wav2vec2-xls-r-300m_phoneme-mfa_korean

This model is a fine-tuned version of facebook/wav2vec2-xls-r-300m on a phonetically balanced native Korean read-speech corpus.

- Model Management by: excalibur12

Training and Evaluation Data

Training Data

- Data Name: Phonetically Balanced Native Korean Read-speech Corpus

- Num. of Samples: 54,000 (540 speakers)

- Audio Length: 108 Hours

Evaluation Data

- Data Name: Phonetically Balanced Native Korean Read-speech Corpus

- Num. of Samples: 6,000 (60 speakers)

- Audio Length: 12 Hours

Training Hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 20 (EarlyStopping: patience: 5 epochs max)

- mixed_precision_training: Native AMP

Evaluation Results

- Phone Error Rate 3.88%

- Monophthong-wise Error Rates: (To be posted)

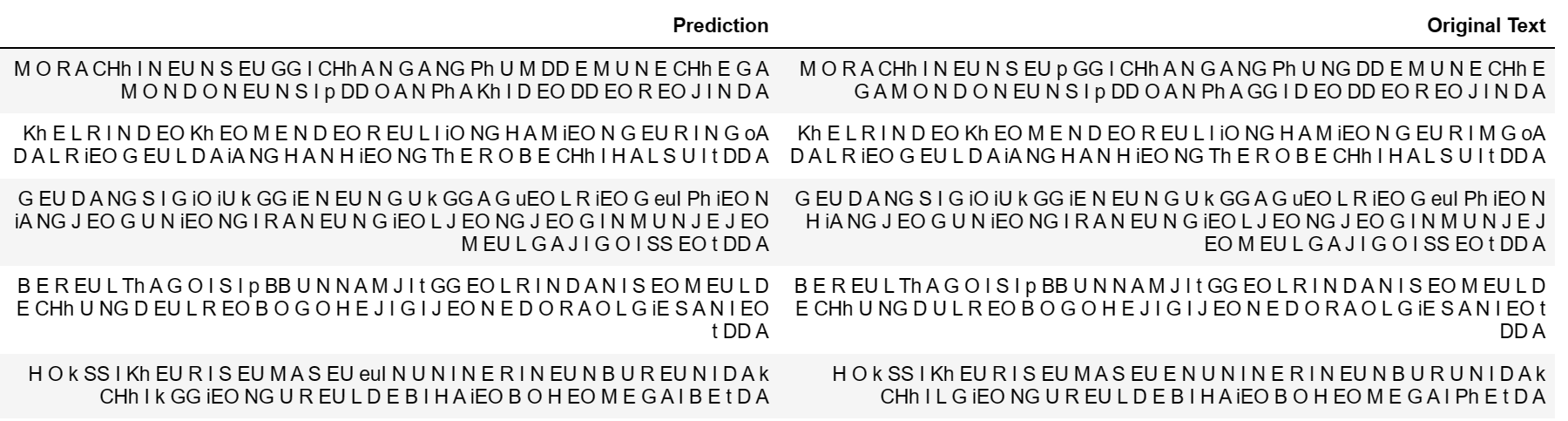

Output Examples

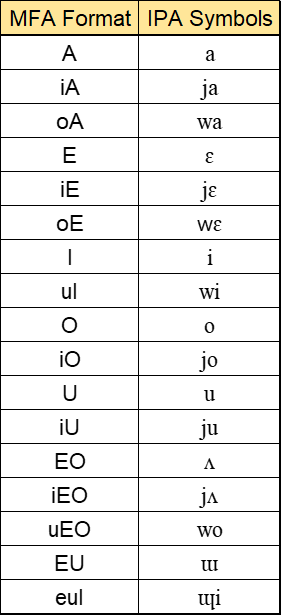

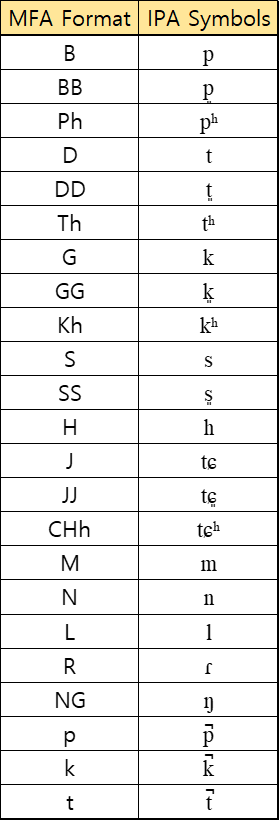

MFA-IPA Phoneset Tables

Vowels

Consonants

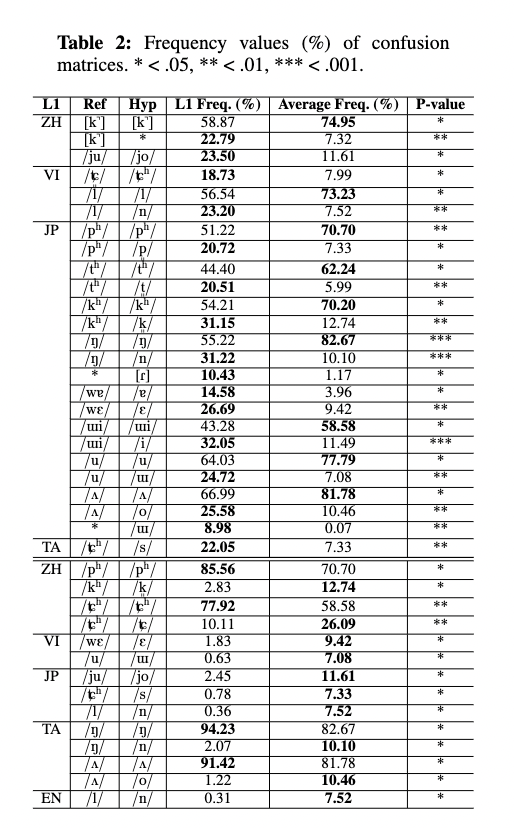

Experimental Results

Official implementation of the paper (ICPhS 2023)

Major error patterns of L2 Korean speech from five different L1s: Chinese (ZH), Vietnamese (VI), Japanese (JP), Thai (TH), English (EN)

Framework versions

- Transformers 4.21.3

- Pytorch 1.12.1

- Datasets 2.4.0

- Tokenizers 0.12.1

- Downloads last month

- 26

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.