Update README.md

Browse files

README.md

CHANGED

|

@@ -8,7 +8,6 @@ base_model:

|

|

| 8 |

- arcee-ai/Virtuoso-Small

|

| 9 |

- CultriX/SeQwence-14B-EvolMerge

|

| 10 |

- CultriX/Qwen2.5-14B-Wernicke

|

| 11 |

-

- huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2

|

| 12 |

- sometimesanotion/lamarck-14b-prose-model_stock

|

| 13 |

- sometimesanotion/lamarck-14b-if-model_stock

|

| 14 |

- sometimesanotion/lamarck-14b-reason-model_stock

|

|

@@ -24,7 +23,7 @@ Lamarck-14B version 0.3 is strongly based on [arcee-ai/Virtuoso-Small](https://h

|

|

| 24 |

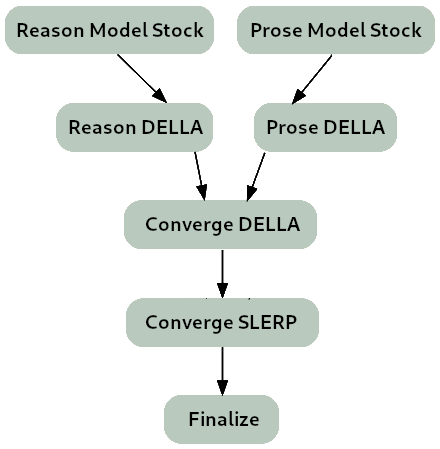

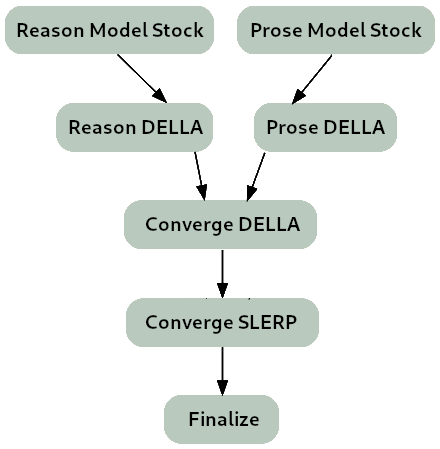

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

|

| 25 |

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

|

| 26 |

- For integration, a SLERP merge of Virtuoso with the converged branches.

|

| 27 |

-

- For finalization

|

| 28 |

|

| 29 |

### Ancestor Models:

|

| 30 |

|

|

@@ -36,6 +35,4 @@ Lamarck-14B version 0.3 is strongly based on [arcee-ai/Virtuoso-Small](https://h

|

|

| 36 |

|

| 37 |

- **[CultriX/Qwen2.5-14B-Wernicke](http://huggingface.co/CultriX/Qwen2.5-14B-Wernicke)** - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

|

| 38 |

|

| 39 |

-

- **[huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2](http://huggingface.co/huihui-ai/Qwen2.5-14B-Instruct-abliterated-v2)** - Merged with higher weight and density , both for re-instructing and abliterating effect

|

| 40 |

-

|

| 41 |

|

|

|

|

| 8 |

- arcee-ai/Virtuoso-Small

|

| 9 |

- CultriX/SeQwence-14B-EvolMerge

|

| 10 |

- CultriX/Qwen2.5-14B-Wernicke

|

|

|

|

| 11 |

- sometimesanotion/lamarck-14b-prose-model_stock

|

| 12 |

- sometimesanotion/lamarck-14b-if-model_stock

|

| 13 |

- sometimesanotion/lamarck-14b-reason-model_stock

|

|

|

|

| 23 |

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

|

| 24 |

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

|

| 25 |

- For integration, a SLERP merge of Virtuoso with the converged branches.

|

| 26 |

+

- For finalization, a TIES merge.

|

| 27 |

|

| 28 |

### Ancestor Models:

|

| 29 |

|

|

|

|

| 35 |

|

| 36 |

- **[CultriX/Qwen2.5-14B-Wernicke](http://huggingface.co/CultriX/Qwen2.5-14B-Wernicke)** - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

|

| 37 |

|

|

|

|

|

|

|

| 38 |

|