merge

Lamarck-14B is the product of a multi-stage merge which emphasizes arcee-ai/Virtuoso-Small in early and finishing layers, and midway features strong emphasis on reasoning, and ends balanced somewhat towards Virtuoso again.

For GGUFs, mradermacher/Lamarck-14B-v0.3-i1-GGUF has you covered. Thank you @mradermacher!

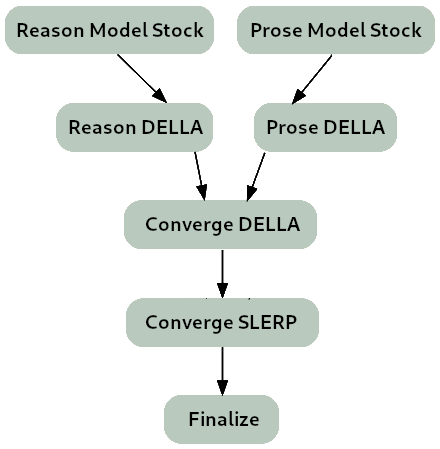

The merge strategy of Lamarck 0.3 can be summarized as:

- Two model_stocks commence specialized branches for reasoning and prose quality.

- For refinement on both model_stocks, DELLA merges re-emphasize selected ancestors.

- For smooth instruction following, a SLERP merges Virtuoso with a DELLA merge of the two branches, where reason vs. prose quality are balanced.

- For finalization and normalization, a TIES merge.

Thanks go to:

- @arcee-ai's team for the ever-capable mergekit, and the exceptional Virtuoso Small model.

- @CultriX for the helpful examples of memory-efficient sliced merges and evolutionary merging. Their contribution of tinyevals on version 0.1 of Lamarck did much to validate the hypotheses of the DELLA->SLERP gradient process used here.

- The authors behind the capable models that appear in the model_stock.

Models Merged

Top influences: These ancestors are base models and present in the model_stocks, but are heavily re-emphasized in the DELLA and SLERP merges.

arcee-ai/Virtuoso-Small - A brand new model from Arcee, refined from the notable cross-architecture Llama-to-Qwen distillation arcee-ai/SuperNova-Medius. The first two layers are nearly exclusively from Virtuoso. It has proven to be a well-rounded performer, and contributes a noticeable boost to the model's prose quality.

CultriX/SeQwence-14B-EvolMerge - A top contender on reasoning benchmarks.

Reason: While Virtuoso is the strongest influence the starting ending layers, the reasoning mo

CultriX/Qwen2.5-14B-Wernicke - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

VAGOsolutions/SauerkrautLM-v2-14b-DPO - This model's influence is understated, but aids BBH and coding capability.

Prose: While the prose module is gently applied, its impact is noticeable on Lamarck 0.3's prose quality, and a DELLA merge re-emphasizes the contributions of two models particularly:

Model stock: Two model_stock merges, specialized for specific aspects of performance, are used to mildly influence a large range of the model.

sometimesanotion/lamarck-14b-prose-model_stock - This brings in a little influence from EVA-UNIT-01/EVA-Qwen2.5-14B-v0.2, oxyapi/oxy-1-small, and allura-org/TQ2.5-14B-Sugarquill-v1.

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 36.58 |

| IFEval (0-Shot) | 50.32 |

| BBH (3-Shot) | 51.27 |

| MATH Lvl 5 (4-Shot) | 32.40 |

| GPQA (0-shot) | 18.46 |

| MuSR (0-shot) | 18.00 |

| MMLU-PRO (5-shot) | 49.01 |

- Downloads last month

- 150

Model tree for sometimesanotion/Lamarck-14B-v0.3

Collection including sometimesanotion/Lamarck-14B-v0.3

Evaluation results

- strict accuracy on IFEval (0-Shot)Open LLM Leaderboard50.320

- normalized accuracy on BBH (3-Shot)Open LLM Leaderboard51.270

- exact match on MATH Lvl 5 (4-Shot)Open LLM Leaderboard32.400

- acc_norm on GPQA (0-shot)Open LLM Leaderboard18.460

- acc_norm on MuSR (0-shot)Open LLM Leaderboard18.000

- accuracy on MMLU-PRO (5-shot)test set Open LLM Leaderboard49.010