Spaces:

Runtime error

Runtime error

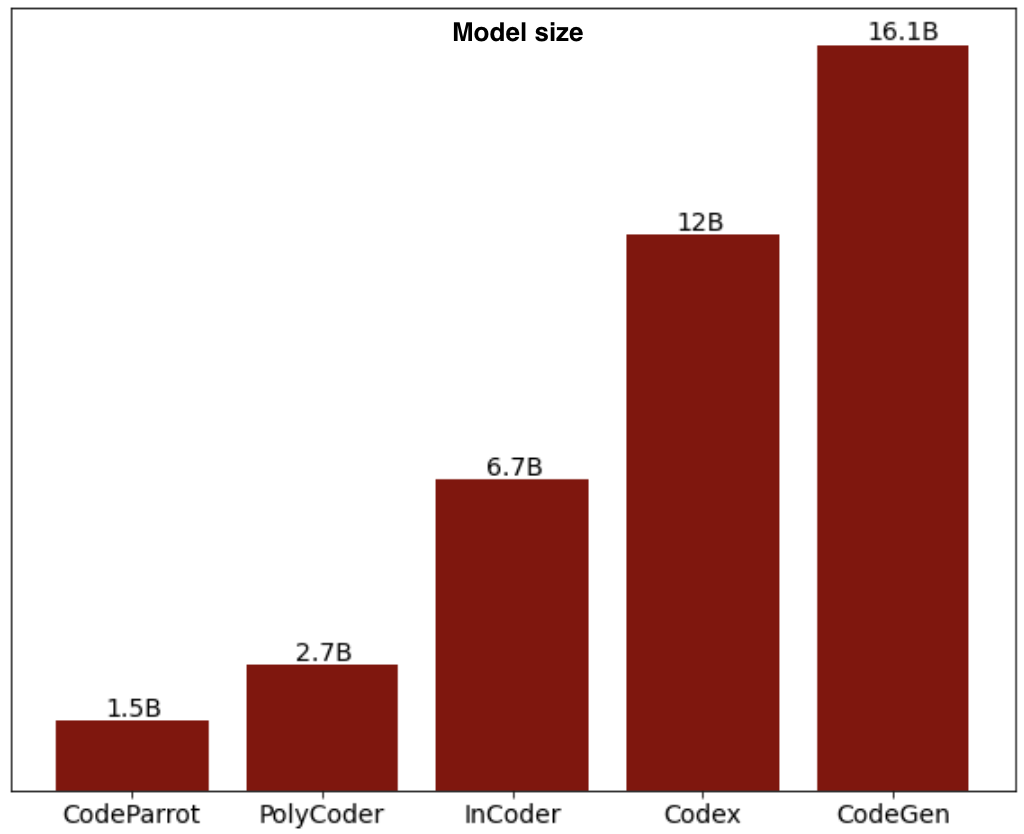

Various architectures are used in code generation models, but most of them use the auto-regressive left-to-right setting, such as GPT. However InCoder used a decoder-only Transformer with Causal Masking objective, that combines both next token prediction and bidirectional context through masking. AlphaCode used an encoder-decoder architecture.

For model-specific information about each architecture, please select a model below: