A newer version of the Gradio SDK is available:

5.9.1

description: Learn how to set up LLM Studio.

import Tabs from "@theme/Tabs"; import TabItem from "@theme/TabItem";

Set up H2O LLM Studio

Prerequisites

H2O LLM Studio requires the following minimum requirements:

- A machine with Ubuntu 16.04+ with atleast one recent Nvidia GPU

- Have at least 128GB+ of system RAM. Larger models and complex tasks may require 256GB+ or more.

- Nvidia drivers v470.57.02 or a later version

- Access to the following URLs:

- developer.download.nvidia.com

- pypi.org

- huggingface.co

- download.pytorch.org

- cdn-lfs.huggingface.co

:::info Notes

- Atleast 24GB of GPU memory is recommended for larger models.

- For more information on performance benchmarks based on the hardware setup, see H2O LLM Studio performance.

- The required URLs are accessible by default when you start a GCP instance, however, if you have network rules or custom firewalls in place, it is recommended to confirm that the URLs are accessible before running

make setup. :::

Installation

:::note Installation methods

The recommended way to install H2O LLM Studio is using pipenv with Python 3.10. To install Python 3.10 on Ubuntu 16.04+, execute the following commands.

System installs (Python 3.10)

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt install python3.10

sudo apt-get install python3.10-distutils

curl -sS https://bootstrap.pypa.io/get-pip.py | python3.10

Install NVIDIA drivers (if required)

If you are deploying on a 'bare metal' machine running Ubuntu, you may need

to install the required Nvidia drivers and CUDA. The following commands show

how to retrieve the latest drivers for a machine running Ubuntu 20.04 as an

example. You can update the following based on your respective operating system.

wget

https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin{" "}

sudo mv cuda-ubuntu2004.pin

/etc/apt/preferences.d/cuda-repository-pin-600

wget

https://developer.download.nvidia.com/compute/cuda/11.4.3/local_installers/cuda-repo-ubuntu2004-11-4-local_11.4.3-470.82.01-1_amd64.deb{" "}

sudo dpkg -i

cuda-repo-ubuntu2004-11-4-local_11.4.3-470.82.01-1_amd64.deb

sudo apt-key add /var/cuda-repo-ubuntu2004-11-4-local/7fa2af80.pub

sudo apt-get -y update

sudo apt-get -y install cuda

Create virtual environment (pipenv)

The following command creates a virtual environment using pipenv and will install

the dependencies using pipenv.

make setup

If you wish to use conda or another virtual environment, you can also

install the dependencies using the requirements.txt{" "}

file.{" "}

pip install -r requirements.txt

Follow the steps below to install H2O LLM Studio on a Windows machine using Windows Subsystem for Linux{" "} WSL2

1. Download the{" "} latest nvidia driver {" "} for Windows.{" "}

2. Open PowerShell or a Windows Command Prompt window in administrator mode.{" "}

3. Run the following command to confirm that the driver is installed properly and see the driver version.

nvidia-smi

4. Run the following command to install WSL2.

wsl --install

5. Launch the WSL2 Ubuntu installation.

6. Install the{" "} WSL2 Nvidia Cuda Drivers .

wget

https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-wsl-ubuntu.pin{" "}

sudo mv cuda-ubuntu2004.pin

/etc/apt/preferences.d/cuda-repository-pin-600

wget

https://developer.download.nvidia.com/compute/cuda/12.2.0/local_installers/cuda-repo-wsl-ubuntu-12-2-local_12.2.0-1_amd64.deb{" "}

sudo dpkg -i cuda-repo-wsl-ubuntu-12-2-local_12.2.0-1_amd64.deb

sudo cp /var/cuda-repo-wsl-ubuntu-12-2-local/cuda-*-keyring.gpg

/usr/share/keyrings/

sudo apt-get update

sudo apt-get -y install cuda

7. Set up the required python system installs (Python 3.10).

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt install python3.10

sudo apt-get install python3.10-distutils

curl -sS https://bootstrap.pypa.io/get-pip.py | python3.10

8. Create the virtual environment.

sudo apt install -y python3.10-venv

python3 -m venv llmstudio

source llmstudio/bin/activate

9.Clone the H2O LLM Studio repository locally.

git clone https://github.com/h2oai/h2o-llmstudio.git

cd h2o-llmstudio

10. Install H2O LLM Studio using the `requirements.txt`.

pip install -r requirements.txt

11. Run the H2O LLM Studio application.

H2O_WAVE_MAX_REQUEST_SIZE=25MB \

H2O_WAVE_NO_LOG=True \

H2O_WAVE_PRIVATE_DIR="/download/@output/download" \

wave run app

This will start the H2O Wave server and the H2O LLM Studio app. Navigate to http://localhost:10101/ (we recommend using Chrome) to access H2O LLM Studio and start fine-tuning your models.

:::Install custom package

If required, you can install additional Python packages into your environment. This can be done using pip after activating your virtual environment via make shell. For example, to install flash-attention, you would use the following commands:

make shell

pip install flash-attn --no-build-isolation

pip install git+https://github.com/HazyResearch/flash-attention.git#subdirectory=csrc/rotary

Alternatively, you can also directly install the custom package by running the following command.

pipenv install package_name

Run H2O LLM Studio

There are several ways to run H2O LLM Studio depending on your requirements.

- Run H2O LLM Studio GUI

- Run using Docker from a nightly build

- Run by building your own Docker image

- Run with the CLI (command-line interface)

Run H2O LLM Studio GUI

Run the following command to start the H2O LLM Studio.

make llmstudio

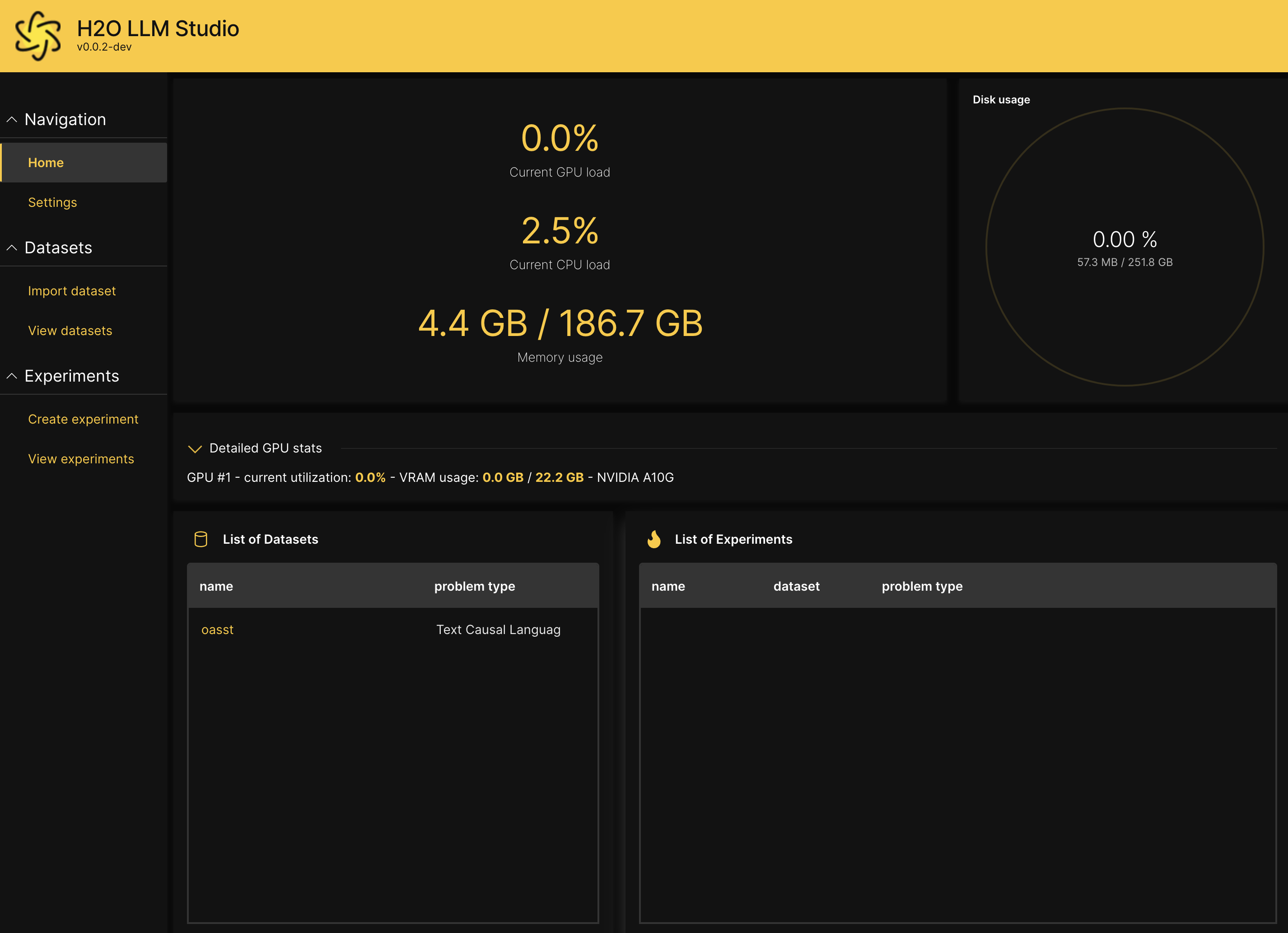

This will start the H2O Wave server and the H2O LLM Studio app. Navigate to http://localhost:10101/ (we recommend using Chrome) to access H2O LLM Studio and start fine-tuning your models.

If you are running H2O LLM Studio with a custom environment other than Pipenv, start the app as follows:

H2O_WAVE_APP_ADDRESS=http://127.0.0.1:8756 \

H2O_WAVE_MAX_REQUEST_SIZE=25MB \

H2O_WAVE_NO_LOG=True \

H2O_WAVE_PRIVATE_DIR="/download/@output/download" \

wave run app

Run using Docker from a nightly build

First, install Docker by following the instructions from the NVIDIA Container Installation Guide. H2O LLM Studio images are stored in the h2oai GCR vorvan container repository.

mkdir -p `pwd`/data

mkdir -p `pwd`/output

docker run \

--runtime=nvidia \

--shm-size=64g \

--init \

--rm \

-p 10101:10101 \

-v `pwd`/data:/workspace/data \

-v `pwd`/output:/workspace/output \

-v ~/.cache:/home/llmstudio/.cache \

gcr.io/vorvan/h2oai/h2o-llmstudio:nightly

Navigate to http://localhost:10101/ (we recommend using Chrome) to access H2O LLM Studio and start fine-tuning your models.

:::info

Other helpful docker commands are docker ps and docker kill.

:::

Run by building your own Docker image

docker build -t h2o-llmstudio .

docker run \

--runtime=nvidia \

--shm-size=64g \

--init \

--rm \

-p 10101:10101 \

-v `pwd`/data:/workspace/data \

-v `pwd`/output:/workspace/output \

-v ~/.cache:/home/llmstudio/.cache \

h2o-llmstudio

Run with command line interface (CLI)

You can also use H2O LLM Studio with the command line interface (CLI) and specify the configuration .yaml file that contains all the experiment parameters. To finetune using H2O LLM Studio with CLI, activate the pipenv environment by running make shell.

To specify the path to the configuration file that contains the experiment parameters, run:

python train.py -Y {path_to_config_yaml_file}

To run on multiple GPUs in DDP mode, run:

bash distributed_train.sh {NR_OF_GPUS} -Y {path_to_config_yaml_file}

:::info

By default, the framework will run on the first k GPUs. If you want to specify specific GPUs to run on, use the CUDA_VISIBLE_DEVICES environment variable before the command.

:::

To start an interactive chat with your trained model, run:

python prompt.py -e {experiment_name}

experiment_name is the output folder of the experiment you want to chat with. The interactive chat will also work with models that were fine-tuned using the GUI.