|

|

|

|

|

from __future__ import annotations |

|

|

|

import argparse |

|

import pathlib |

|

import torch |

|

import gradio as gr |

|

|

|

from vtoonify_model import Model |

|

|

|

def parse_args() -> argparse.Namespace: |

|

parser = argparse.ArgumentParser() |

|

parser.add_argument('--device', type=str, default='cpu') |

|

parser.add_argument('--theme', type=str) |

|

parser.add_argument('--share', action='store_true') |

|

parser.add_argument('--port', type=int) |

|

parser.add_argument('--disable-queue', |

|

dest='enable_queue', |

|

action='store_false') |

|

return parser.parse_args() |

|

|

|

DESCRIPTION = ''' |

|

<div align=center> |

|

<h1 style="font-weight: 900; margin-bottom: 7px;"> |

|

使用<a href="https://github.com/williamyang1991/VToonify">VToonify</a>将视频人物卡通化 |

|

</h1> |

|

|

|

<font color=red><h2 style="font-weight: 900; margin-bottom: 7px;"> |

|

本页面是为方便英语不太好朋友了解如何使用,采用的是CPU,运算时间较长,请稳步<a href="https://huggingface.co/spaces/PKUWilliamYang/VToonify">原VToonify</a>,速度将提升数十倍 |

|

</h2></font> |

|

</div> |

|

''' |

|

FOOTER = '<div align=center><img id="visitor-badge" alt="visitor badge" src="https://visitor-badge.laobi.icu/badge?page_id=williamyang1991/VToonify" /></div>' |

|

|

|

ARTICLE = r""" |

|

如果VToonify对你有帮助请在<a href='https://github.com/williamyang1991/VToonify' target='_blank'>Github Repo</a>上为它点亮⭐.谢谢! |

|

[](https://github.com/williamyang1991/VToonify) |

|

--- |

|

<h2 style="font-weight: 900; margin-bottom: 7px;">点击<a href='https://www.toolchest.cn' target='_blank'>返回智能工具箱</a>查看更多好玩的人工智能项目</h2> |

|

📝 **Citation** |

|

If our work is useful for your research, please consider citing: |

|

```bibtex |

|

@article{yang2022Vtoonify, |

|

title={VToonify: Controllable High-Resolution Portrait Video Style Transfer}, |

|

author={Yang, Shuai and Jiang, Liming and Liu, Ziwei and Loy, Chen Change}, |

|

journal={ACM Transactions on Graphics (TOG)}, |

|

volume={41}, |

|

number={6}, |

|

articleno={203}, |

|

pages={1--15}, |

|

year={2022}, |

|

publisher={ACM New York, NY, USA}, |

|

doi={10.1145/3550454.3555437}, |

|

} |

|

``` |

|

|

|

📋 **License** |

|

This project is licensed under <a rel="license" href="https://github.com/williamyang1991/VToonify/blob/main/LICENSE.md">S-Lab License 1.0</a>. |

|

Redistribution and use for non-commercial purposes should follow this license. |

|

|

|

📧 **Contact** |

|

If you have any questions, please feel free to reach me out at <b>williamyang@pku.edu.cn</b>. |

|

""" |

|

|

|

def update_slider(choice: str) -> dict: |

|

if type(choice) == str and choice.endswith('-d'): |

|

return gr.Slider.update(maximum=1, minimum=0, value=0.5) |

|

else: |

|

return gr.Slider.update(maximum=0.5, minimum=0.5, value=0.5) |

|

|

|

def set_example_image(example: list) -> dict: |

|

return gr.Image.update(value=example[0]) |

|

|

|

def set_example_video(example: list) -> dict: |

|

return gr.Video.update(value=example[0]), |

|

|

|

sample_video = ['./vtoonify/data/529_2.mp4','./vtoonify/data/7154235.mp4','./vtoonify/data/651.mp4','./vtoonify/data/908.mp4'] |

|

sample_vid = gr.Video(label='Video file') |

|

example_videos = gr.components.Dataset(components=[sample_vid], samples=[[path] for path in sample_video], type='values', label='Video Examples') |

|

|

|

def main(): |

|

args = parse_args() |

|

args.device = 'cuda' if torch.cuda.is_available() else 'cpu' |

|

print('*** Now using %s.'%(args.device)) |

|

model = Model(device=args.device) |

|

|

|

with gr.Blocks(theme=args.theme, css='style.css') as demo: |

|

|

|

gr.Markdown(DESCRIPTION) |

|

|

|

with gr.Box(): |

|

gr.Markdown('''## 第1步(选择卡通类型) |

|

- 选择 **卡通类型**. |

|

- 带有 `-d` 表示它可以调整卡通化的程度. |

|

- 不带 `-d` 通常会有更好的卡通效果. |

|

|

|

''') |

|

with gr.Row(): |

|

with gr.Column(): |

|

gr.Markdown('''选择类型''') |

|

with gr.Row(): |

|

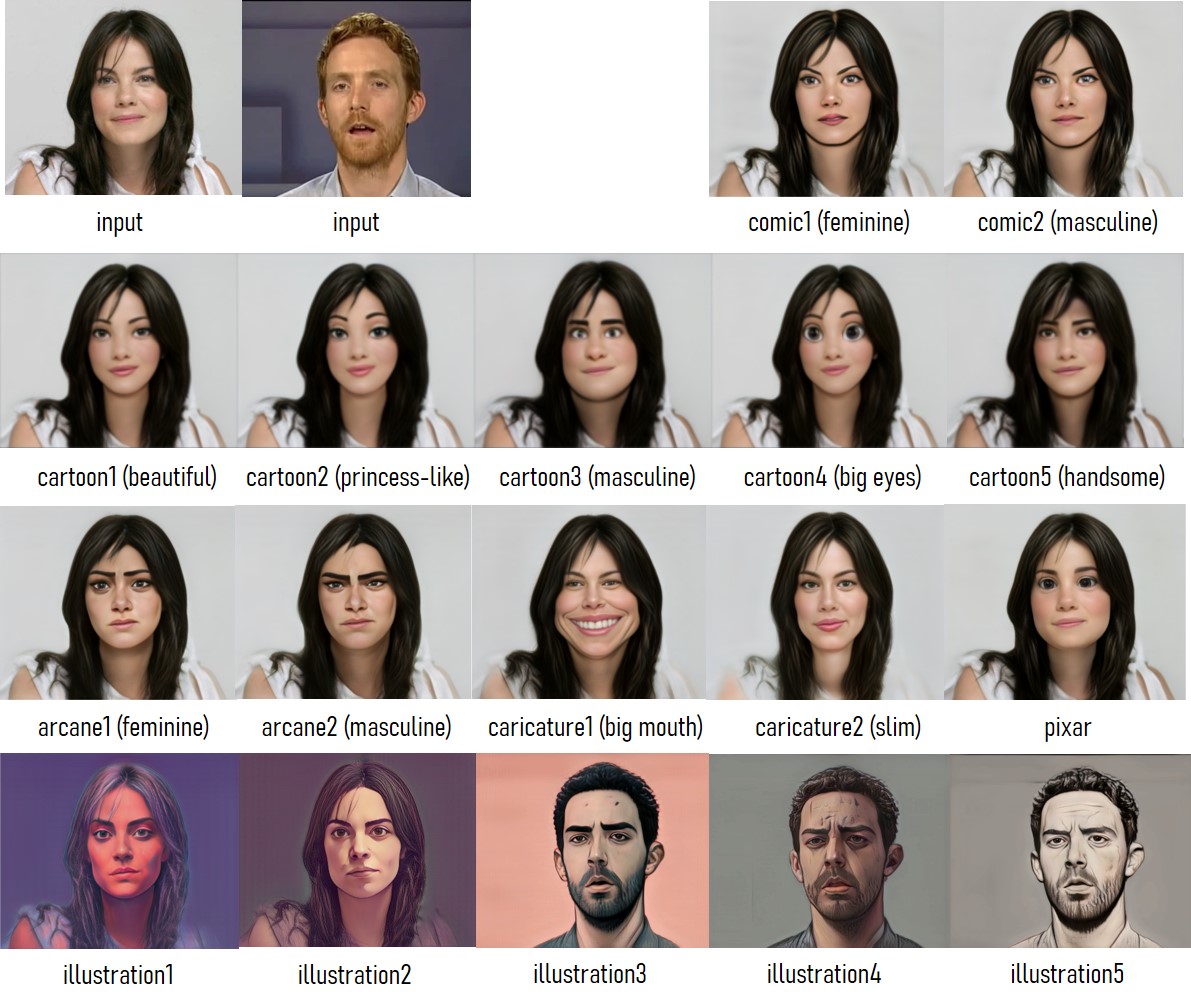

style_type = gr.Radio(label='Style Type', |

|

choices=['cartoon1','cartoon1-d','cartoon2-d','cartoon3-d', |

|

'cartoon4','cartoon4-d','cartoon5-d','comic1-d', |

|

'comic2-d','arcane1','arcane1-d','arcane2', 'arcane2-d', |

|

'caricature1','caricature2','pixar','pixar-d', |

|

'illustration1-d', 'illustration2-d', 'illustration3-d', 'illustration4-d', 'illustration5-d', |

|

] |

|

) |

|

exstyle = gr.Variable() |

|

with gr.Row(): |

|

loadmodel_button = gr.Button('加载模型') |

|

with gr.Row(): |

|

load_info = gr.Textbox(label='Process Information', interactive=False, value='No model loaded.') |

|

with gr.Column(): |

|

gr.Markdown('''类型参考 |

|

''') |

|

|

|

|

|

with gr.Box(): |

|

gr.Markdown('''## 第2步 (对图片或视频进行预处理) |

|

- 拖动1个含有人脸的图片或视频到 **输入图像**/**输入视频**. |

|

- 点击 **缩放图像**/**缩放第1帧** 按钮. |

|

- 缩放输入使它更好的适用模型. |

|

- 最后的图像结果将是基于 **缩放后的脸**. 使用边框距离参数调整背景. |

|

- **<font color=red>若出现[Error: no face detected!]错误</font>**: 是因为vtoonify没有检测到人脸,请调整后再试,或者更换原始图像. |

|

- 对于视频输入, 则点击 **缩放视频** 按钮. |

|

- 最后的视频结果将是基于 **缩放后的视频**. 为了避免超出硬件处理能力, 视频将被裁剪成 **100/300** 帧来适应 CPU/GPU. |

|

|

|

''') |

|

with gr.Row(): |

|

with gr.Box(): |

|

with gr.Column(): |

|

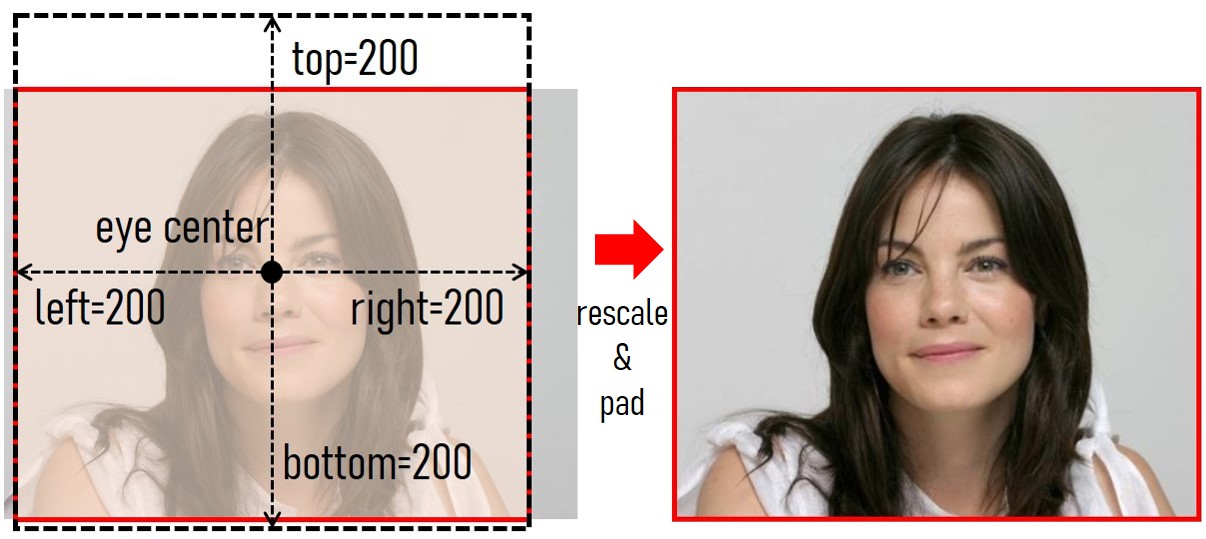

gr.Markdown('''调整边框距离参数. |

|

''') |

|

with gr.Row(): |

|

top = gr.Slider(128, |

|

256, |

|

value=200, |

|

step=8, |

|

label='上') |

|

with gr.Row(): |

|

bottom = gr.Slider(128, |

|

256, |

|

value=200, |

|

step=8, |

|

label='下') |

|

with gr.Row(): |

|

left = gr.Slider(128, |

|

256, |

|

value=200, |

|

step=8, |

|

label='左') |

|

with gr.Row(): |

|

right = gr.Slider(128, |

|

256, |

|

value=200, |

|

step=8, |

|

label='右') |

|

with gr.Box(): |

|

with gr.Column(): |

|

gr.Markdown('''输入''') |

|

with gr.Row(): |

|

input_image = gr.Image(label='输入图像', |

|

type='filepath') |

|

with gr.Row(): |

|

preprocess_image_button = gr.Button('缩放图像') |

|

with gr.Row(): |

|

input_video = gr.Video(label='输入视频', |

|

mirror_webcam=False, |

|

type='filepath') |

|

with gr.Row(): |

|

preprocess_video0_button = gr.Button('缩放第一帧') |

|

preprocess_video1_button = gr.Button('绽放视频') |

|

|

|

with gr.Box(): |

|

with gr.Column(): |

|

gr.Markdown('''View''') |

|

with gr.Row(): |

|

input_info = gr.Textbox(label='处理信息', interactive=False, value='n.a.') |

|

with gr.Row(): |

|

aligned_face = gr.Image(label='绽放脸', |

|

type='numpy', |

|

interactive=False) |

|

instyle = gr.Variable() |

|

with gr.Row(): |

|

aligned_video = gr.Video(label='绽放视频', |

|

type='mp4', |

|

interactive=False) |

|

with gr.Row(): |

|

with gr.Column(): |

|

paths = ['./vtoonify/data/pexels-andrea-piacquadio-733872.jpg','./vtoonify/data/i5R8hbZFDdc.jpg','./vtoonify/data/yRpe13BHdKw.jpg','./vtoonify/data/ILip77SbmOE.jpg','./vtoonify/data/077436.jpg','./vtoonify/data/081680.jpg'] |

|

example_images = gr.Dataset(components=[input_image], |

|

samples=[[path] for path in paths], |

|

label='示例图像') |

|

with gr.Column(): |

|

|

|

|

|

example_videos.render() |

|

|

|

def load_examples(video): |

|

|

|

|

|

return video[0] |

|

|

|

example_videos.click(load_examples, example_videos, input_video) |

|

|

|

with gr.Box(): |

|

gr.Markdown('''## 第3步(生成 图像/视频)''') |

|

with gr.Row(): |

|

with gr.Column(): |

|

gr.Markdown(''' |

|

|

|

- 调整 **卡通化程度**. |

|

- 点击 **图像卡通化!** 来将第1帧卡通化. 点击 **视频卡通化!** 来让整个视频卡通化. |

|

- 预计时间 对于300帧的1600x1440视频 : 1 小时 (CPU); 2 分钟 (GPU) |

|

''') |

|

style_degree = gr.Slider(0, |

|

1, |

|

value=0.5, |

|

step=0.05, |

|

label='卡通化程度') |

|

with gr.Column(): |

|

gr.Markdown(''' |

|

''') |

|

with gr.Row(): |

|

output_info = gr.Textbox(label='示例信息', interactive=False, value='n.a.') |

|

with gr.Row(): |

|

with gr.Column(): |

|

with gr.Row(): |

|

result_face = gr.Image(label='图像结果', |

|

type='numpy', |

|

interactive=False) |

|

with gr.Row(): |

|

toonify_button = gr.Button('图像卡通化!') |

|

with gr.Column(): |

|

with gr.Row(): |

|

result_video = gr.Video(label='视频结果', |

|

type='mp4', |

|

interactive=False) |

|

with gr.Row(): |

|

vtoonify_button = gr.Button('视频卡通化!') |

|

|

|

gr.Markdown(ARTICLE) |

|

gr.Markdown(FOOTER) |

|

|

|

loadmodel_button.click(fn=model.load_model, |

|

inputs=[style_type], |

|

outputs=[exstyle, load_info]) |

|

|

|

|

|

style_type.change(fn=update_slider, |

|

inputs=style_type, |

|

outputs=style_degree) |

|

|

|

preprocess_image_button.click(fn=model.detect_and_align_image, |

|

inputs=[input_image, top, bottom, left, right], |

|

outputs=[aligned_face, instyle, input_info]) |

|

preprocess_video0_button.click(fn=model.detect_and_align_video, |

|

inputs=[input_video, top, bottom, left, right], |

|

outputs=[aligned_face, instyle, input_info]) |

|

preprocess_video1_button.click(fn=model.detect_and_align_full_video, |

|

inputs=[input_video, top, bottom, left, right], |

|

outputs=[aligned_video, instyle, input_info]) |

|

|

|

toonify_button.click(fn=model.image_toonify, |

|

inputs=[aligned_face, instyle, exstyle, style_degree, style_type], |

|

outputs=[result_face, output_info]) |

|

vtoonify_button.click(fn=model.video_tooniy, |

|

inputs=[aligned_video, instyle, exstyle, style_degree, style_type], |

|

outputs=[result_video, output_info]) |

|

|

|

|

|

example_images.click(fn=set_example_image, |

|

inputs=example_images, |

|

outputs=example_images.components) |

|

|

|

demo.launch( |

|

enable_queue=args.enable_queue, |

|

server_port=args.port, |

|

share=args.share, |

|

) |

|

|

|

|

|

if __name__ == '__main__': |

|

main() |

|

|