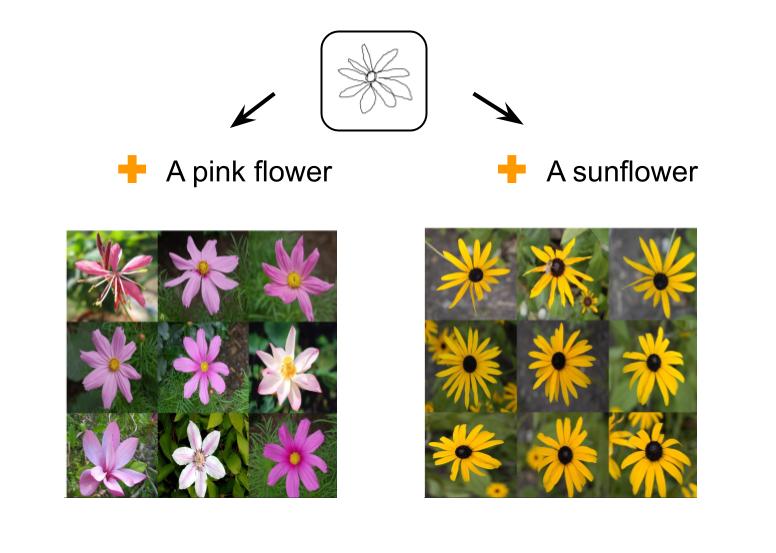

Image Retrieval with Text and Sketch

This code is for our 2022 ECCV paper A Sketch Is Worth a Thousand Words: Image Retrieval with Text and Sketch

This repo is based on open_clip implementation from https://github.com/mlfoundations/open_clip

folder structure

|---model/ : Contain the trained model*

|---sketches/ : Contain example query sketch

|---images/ : Contain 100 randomly sampled images from COCO TBIR benchmark

|---notebooks/ : Contain the demo ipynb notebook

|---code/

|---training/model_configs/ : Contain model config file for the network

|---clip/ : Contain source code for running the notebook

*need to be downloaded first

Prerequisites

- Pytorch

- ftfy

Getting Started

Simply open jupyter notebook in

notebooks/Retrieval_Demo.ipynbfor an example of how to retrieve images using our model,You can use your own set of images and sketches by modifying the

images/andsketches/folder accordingly.Colab version of the notebook is available [here]

Download Models

Citation

If you find it this code useful for your research, please cite:

"A Sketch Is Worth a Thousand Words: Image Retrieval with Text and Sketch"

Patsorn Sangkloy, Wittawat Jitkrittum, Diyi Yang, James Hays in ECCV, 2022.

@article{

tsbir2022,

author = {Patsorn Sangkloy and Wittawat Jitkrittum and Diyi Yang and James Hays},

title = {A Sketch is Worth a Thousand Words: Image Retrieval with Text and Sketch},

journal = {European Conference on Computer Vision, ECCV},

year = {2022},

}

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The HF Inference API does not support feature-extraction models for generic library.