YAML Metadata

Warning:

empty or missing yaml metadata in repo card

(https://huggingface.co/docs/hub/model-cards#model-card-metadata)

This repo contains sparsity report for each of the pruned model in the table below.

The report (csv) shows layer-wise sparsity, sparsity by tile of 128x16, sparsity by col and row global to its layers.

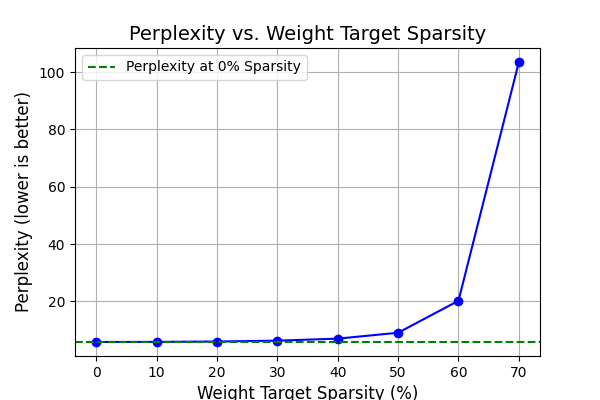

Pruning meta-llama/Meta-Llama-3.1-8B with Wanda

| Weight Target Sparsity | Perplexity (lower is better) |

|---|---|

| 0 (dense, baseline) | 5.8393 |

| 10 | 5.8781 |

| 20 | 6.0102 |

| 30 | 6.3076 |

| 40 | 7.0094 |

| 50 | 9.0642 |

| 60 | 20.2265 |

| 70 | 103.5209 |

For a more granular sparsity report within a given tile, pls continue below.

Install

pip install torch ipython pandas

Interative look up a specific tile of a layer

# pls make sure git lfs is installed at your end

git clone https://huggingface.co/vuiseng9/24-0830-wanda-llama3.1-8B

cd 24-0830-wanda-llama3.1-8B

./interactive_sparsity.sh

Expected outcome in as follows, it will be in ipython console with the needed functionality loaded.

$ ./interactive_sparsity.sh

Python 3.11.9 | packaged by conda-forge | (main, Apr 19 2024, 18:36:13) [GCC 12.3.0]

Type 'copyright', 'credits' or 'license' for more information

IPython 8.26.0 -- An enhanced Interactive Python. Type '?' for help.

- Help ------------------

h = SparseBlob("path to sparsity blob")

SparseBlob.preview:

preview sparsity dataframe, intend to show row id, short id for look up

eg. h.preview()

SparseBlob.ls_layers:

list all available layer ids for look up

eg. h.ls_layers()

SparseBlob.get_sparsity_by_short_id:

return a sparsity stats of a layer via short_id lookup.

eg. h.get_sparsity_by_short_id('tx.0.attn.v')

SparseBlob.get_sparsity_by_row_id:

return a sparsity stats of a layer via row id lookup.

eg. h.get_sparsity_by_row_id(36)

SparseBlob.get_sparsity_of_tile:

zoom into a specific layer and a specific tile,

return the sparsity stats of the tile down to col, row granularity

eg. h.get_sparsity_by_row_id(36, (5, 6))

SparseBlob.show_help:

print help for available function of SparseBlob

eg. h.show_help()

- End of Help ------------------

In [1]:

Sample usage:

In [1]: ls blob*

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.0

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.1

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.2

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.3

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.4

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.5

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.6

blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.7

In [2]: h = SparseBlob("blob.sparsity._Meta-Llama-3.1-8B-wanda-unstructured-0.5")

In [3]: h.preview()

layer_id short_id ... row_med row_max

0 model.layers.0.self_attn.q_proj tx.0.attn.q ... 0.5000 1.0000

1 model.layers.0.self_attn.k_proj tx.0.attn.k ... 0.5000 1.0000

2 model.layers.0.self_attn.v_proj tx.0.attn.v ... 0.5000 1.0000

3 model.layers.0.self_attn.o_proj tx.0.attn.o ... 0.5000 1.0000

4 model.layers.0.mlp.gate_proj tx.0.mlp.gate ... 0.5000 1.0000

5 model.layers.0.mlp.up_proj tx.0.mlp.up ... 0.5000 1.0000

6 model.layers.0.mlp.down_proj tx.0.mlp.down ... 0.5000 1.0000

7 model.layers.1.self_attn.q_proj tx.1.attn.q ... 0.5000 1.0000

8 model.layers.1.self_attn.k_proj tx.1.attn.k ... 0.5000 1.0000

9 model.layers.1.self_attn.v_proj tx.1.attn.v ... 0.5000 1.0000

10 model.layers.1.self_attn.o_proj tx.1.attn.o ... 0.5000 1.0000

11 model.layers.1.mlp.gate_proj tx.1.mlp.gate ... 0.5000 1.0000

.

.

.

222 model.layers.31.mlp.up_proj tx.31.mlp.up ... 0.5000 1.0000

223 model.layers.31.mlp.down_proj tx.31.mlp.down ... 0.5000 1.0000

224 lm_head lm_head ... 0.0000 0.0000

[225 rows x 23 columns]

In [4]: h.get_sparsity_by_row_id(10)

Out[4]:

layer_id model.layers.1.self_attn.o_proj

short_id tx.1.attn.o

layer_type Linear

param_type weight

shape [4096, 4096]

nparam 16777216

nnz 8388608

sparsity 0.5000

tile_shape (128, 16)

n_tile 32 x 256

n_tile_total 8192

tile_avg 0.5000

tile_min 0.2197

tile_med 0.5073

tile_max 0.9678

col_avg 0.5000

col_min 0.0312

col_med 0.4609

col_max 1.0000

row_avg 0.5000

row_min 0.0000

row_med 0.5000

row_max 1.0000

Name: 10, dtype: object

In [5]: h.get_sparsity_of_tile(10, (30, 245))

(30, 245) : tile_id

model.layers.1.self_attn.o_proj : layer_id

(128, 16) : tiled by

0.2861 : tile_sparsity

16 : col_count

0.2861 : col_avg

0.2266 : col_min

0.2734 : col_med

0.3594 : col_max

128 : row_count

0.2861 : row_avg

0.0000 : row_min

0.2500 : row_med

0.6250 : row_max

Internal notes

see patch wanda branch here. see the raw!

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.