YAML Metadata

Warning:

empty or missing yaml metadata in repo card

(https://huggingface.co/docs/hub/model-cards#model-card-metadata)

Segment and Caption Anything

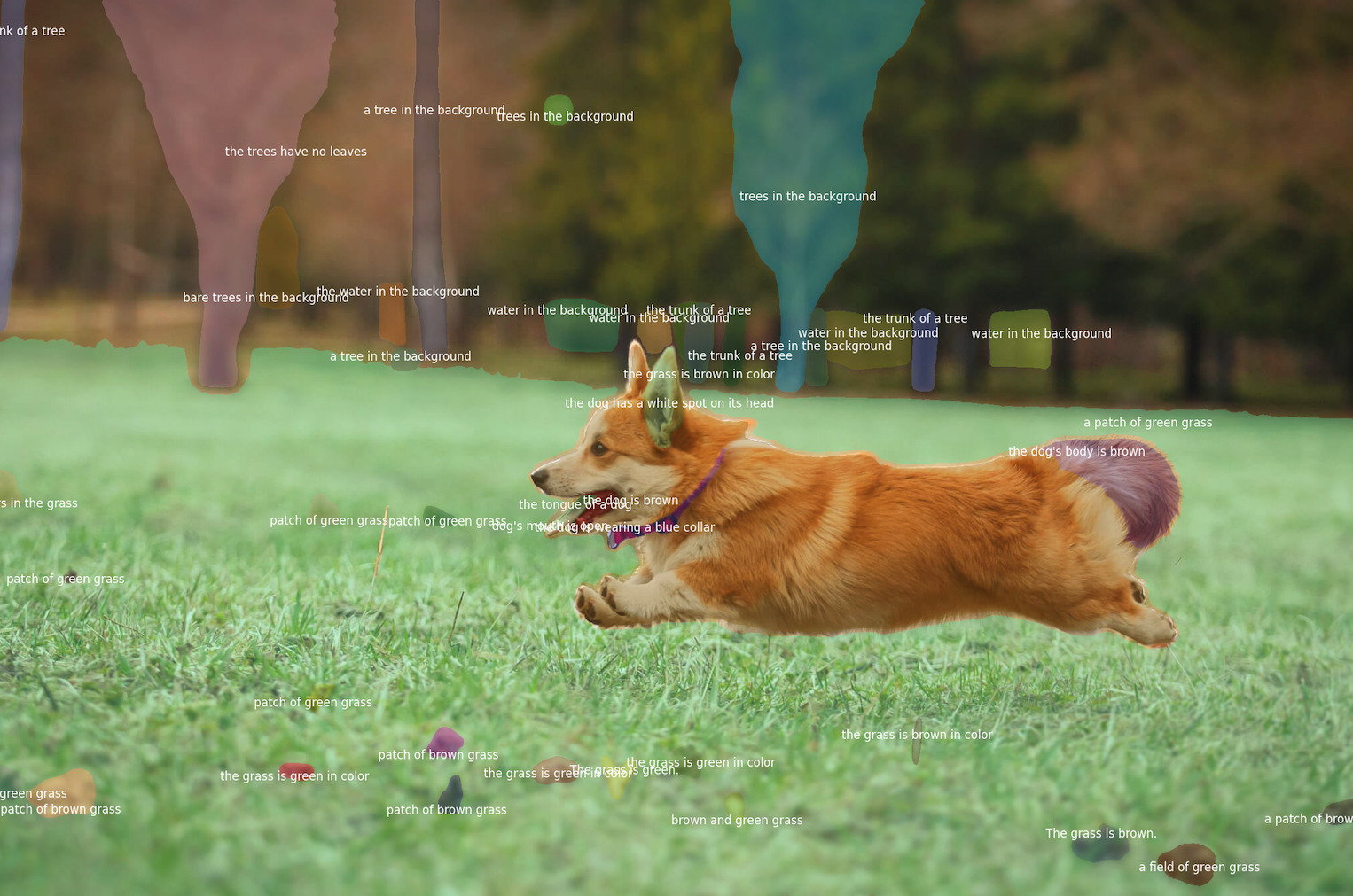

The repository contains the official implementation of "Segment and Caption Anything"

tl;dr

- Despite the absence of semantic labels in the training data, SAM implies high-level semantics sufficient for captioning.

- SCA (b) is a lightweight augmentation of SAM (a) with the ability to generate regional captions.

- On top of SAM architecture, we add a fixed pre-trained language mode, and a optimizable lightweight hybrid feature mixture whose training is cheap and scalable.

|

|

|

|

News

- [01/31/2024] Update the paper and the supp. Release code v0.0.2: bump transformers to 4.36.2, support mistral series, phi-2, zephyr; add experiments about SAM+Image Captioner+V-CoT, and more.

- [12/05/2023] Release paper, code v0.0.1, and project page!

Environment Preparation

Please check docs/ENV.md.

Model Zoo

Please check docs/MODEL_ZOO.md

Gradio Demo

Please check docs/DEMO.md

Running Training and Inference

Please check docs/USAGE.md.

Experiments and Evaluation

Please check docs/EVAL.md

License

The trained weights are licensed under the Apache 2.0 license.

Acknowledgement

Deeply appreciate these wonderful open source projects: transformers, accelerate, deepspeed, detectron2, hydra, timm, gradio.

Citation

If you find this repository useful, please consider giving a star ⭐ and citation 🦖:

@misc{xiaoke2023SCA,

title={{Segment and Caption Anything}},

author={Xiaoke, Huang and Jianfeng, Wang and Yansong, Tang and Zheng, Zhang and Han, Hu and Jiwen, Lu and Lijuan, Wang and Zicheng, Liu},

journal={arXiv},

volume={abs/2312.00869},

year={2023},

}

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.