metadata

license: apache-2.0

language:

- fr

library_name: transformers

inference: false

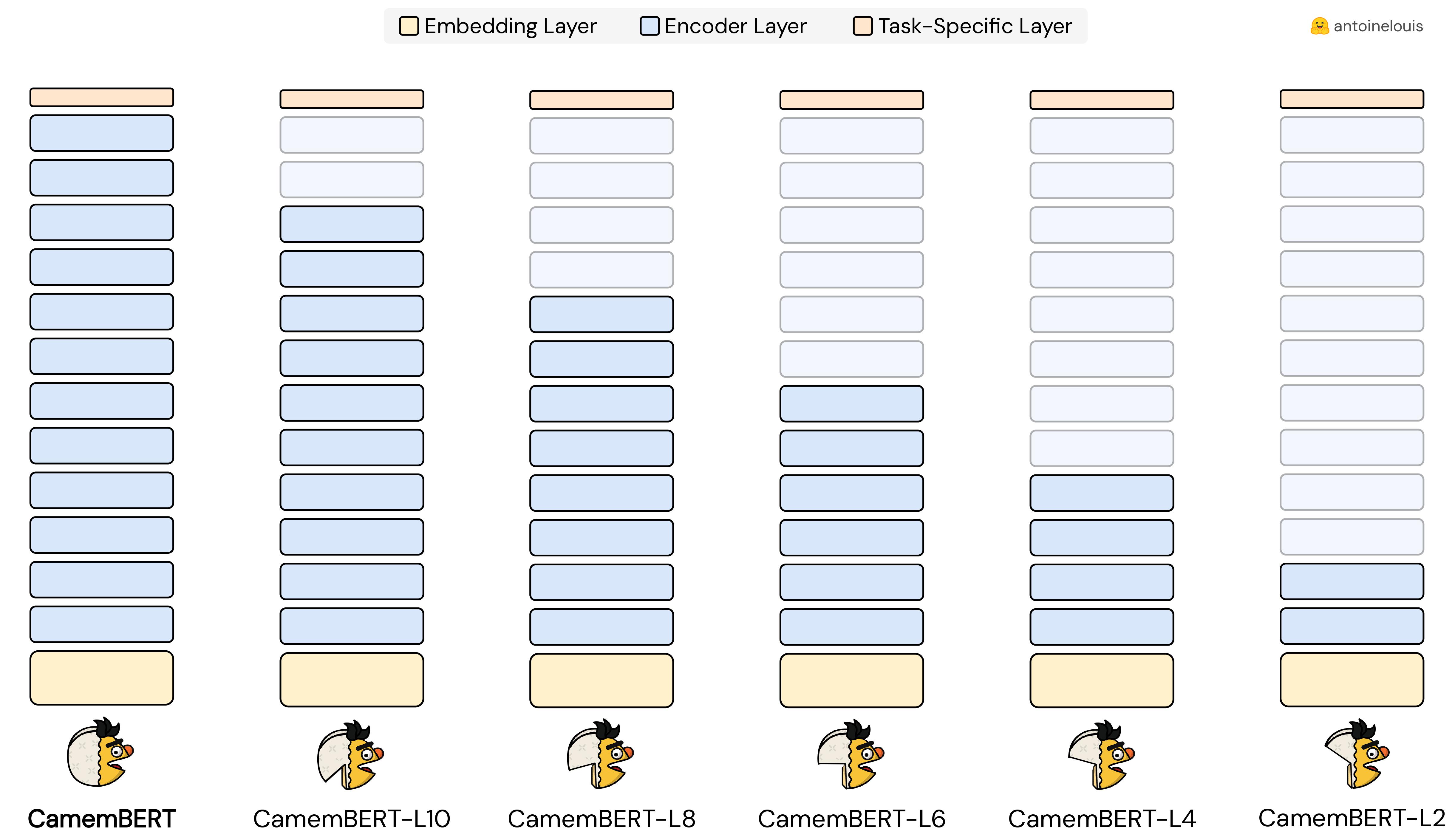

CamemBERT-L4

This model is a pruned version of the pre-trained CamemBERT checkpoint, obtained by dropping the top-layers from the original model.

Usage

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained('antoinelouis/camembert-L4')

model = AutoModel.from_pretrained('antoinelouis/camembert-L4')

Comparison

| Model | #Params | Size | Pruning |

|---|---|---|---|

| CamemBERT | 110.6M | 445MB | - |

| CamemBERT-L10 | 96.4M | 368MB | -13% |

| CamemBERT-L8 | 82.3M | 314MB | -26% |

| CamemBERT-L6 | 68.1M | 260MB | -38% |

| CamemBERT-L4 | 53.9M | 206MB | -51% |

| CamemBERT-L4 | 39.7M | 159MB | -64% |

Citation

@online{louis2023,

author = 'Antoine Louis',

title = 'CamemBERT-L4: A Pruned Version of CamemBERT',

publisher = 'Hugging Face',

month = 'october',

year = '2023',

url = 'https://huggingface.co/antoinelouis/camembert-L4',

}