Pandora 7B Chat

Pandora 7B Chat is a Large Language Model (LLM) designed for chat applications.

Pandora is fine-tuned with publicly available datasets, including a tool-calling dataset for agent-based tasks and a Reinforcement Learning from Human Feedback (RLHF) dataset with Direct Preference Optimization (DPO) training for preference alignment.

The fine-tuning process incorporates Low-Rank Adaptation (LoRA) with the MLX framework, optimized for Apple Silicon.

The model is based on the google/gemma-7b model.

Datasets

Datasets used for fine-tuning stages:

Evaluation

Evaluation on MT-Bench multi-turn benchmark:

Usage

Install package dependencies:

pip install mlx-lm

Generate response:

from mlx_lm import load, generate

model, tokenizer = load('danilopeixoto/pandora-7b-chat')

prompt = '''<|start|>system

You are Pandora, a helpful AI assistant.

<|end|>

<|start|>user

Hello!

<|end|>

<|start|>'''

response = generate(model, tokenizer, prompt)

print(response)

The model supports the following prompt templates:

Question-answering with system messages

<|start|>system

{system_message}

<|end|>

<|start|>user

{user_message}

<|end|>

<|start|>assistant

{assistant_message}

<|end|>

Tool calling

<|start|>system

{system_message}

<|end|>

<|start|>system:tools

{system_tools_message}

<|end|>

<|start|>user

{user_message}

<|end|>

<|start|>assistant:tool_calls

{assistant_tool_calls_message}

<|end|>

<|start|>tool

{tool_message}

<|end|>

<|start|>assistant

{assistant_message}

<|end|>

Note The variables

system_tools_message,assistant_tool_calls_message, andtool_messagemust contain valid YAML.

An example of a tool-calling prompt:

prompt = '''<|start|>system

You are Pandora, a helpful AI assistant.

<|end|>

<|start|>system:tools

- description: Get the current weather based on a given location.

name: get_current_weather

parameters:

type: object

properties:

location:

type: string

description: The location name.

required:

- location

<|end|>

<|start|>user

What is the weather in Sydney, Australia?

<|end|>

<|start|>assistant:tool_calls

- name: get_current_weather

arguments:

location: Sydney, Australia

<|end|>

<|start|>tool

name: get_current_weather

content: 72°F

<|end|>

<|start|>'''

Examples

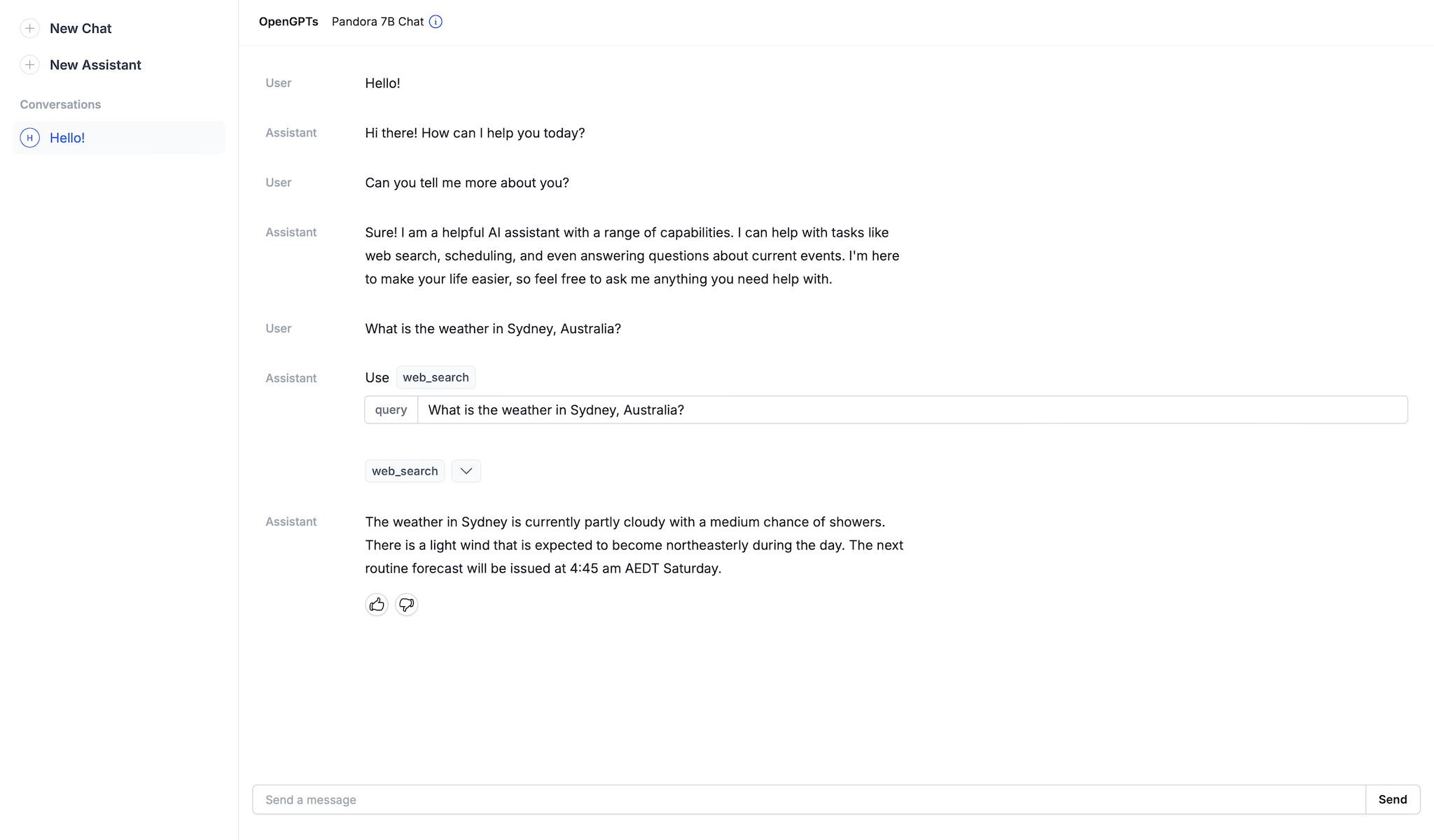

OpenGPTs

Copyright and license

Copyright (c) 2024, Danilo Peixoto Ferreira. All rights reserved.

Project developed under a BSD-3-Clause license.

Gemma is provided under and subject to the Gemma Terms of Use license.

- Downloads last month

- 14