text

stringlengths 46

74.6k

|

|---|

---

sidebar_position: 3

---

# Creating Accounts

You might want to create an account from a contract for many reasons. One example:

You want to [progressively onboard](https://www.youtube.com/watch?v=7mO4yN1zjbs&t=2s) users, hiding the whole concept of NEAR from them at the beginning, and automatically create accounts for them (these could be sub-accounts of your main contract, such as `user123.some-cool-game.near`).

Since an account with no balance is almost unusable, you probably want to combine this with the token transfer from [the last page](./token-tx.md). You will also need to give the account an access key. Here's a way do it:

```js

NearPromise.new("subaccount.example.near").createAccount().addFullAccessKey(near.signerAccountPk()).transfer(BigInt(250_000_000_000_000_000_000_000)); // 2.5e23yN, 0.25N

```

In the context of a full contract:

```js

import { NearPromise, near } from "near-sdk-js";

@NearBindgen({})

export class Contract {

@call({ privateFunction: true })

createSubaccount({ prefix }) {

const subaccountId = `${prefix}.${near.currentAccountId()}`;

return NearPromise.new(subaccount_id).createAccount().addFullAccessKey(near.signerAccountPk()).transfer(BigInt(250_000_000_000_000_000_000_000)); // 2.5e23yN, 0.25N

}

}

```

Things to note:

- `addFullAccessKey` – This example passes in the public key of the human or app that signed the original transaction that resulted in this function call ([`signerAccountPk`](https://github.com/near/near-sdk-js/blob/d1ca261feac5c38768ab30e0b24cf7263d80aaf2/packages/near-sdk-js/src/api.ts#L187-L194)). You could also use [`addAccessKey`](https://github.com/near/near-sdk-js/blob/d1ca261feac5c38768ab30e0b24cf7263d80aaf2/packages/near-sdk-js/src/promise.ts#L526-L548) to add a Function Call access key that only permits the account to make calls to a predefined set of contract functions.

- `{ privateFunction: true }` – if you have a function that spends your contract's funds, you probably want to protect it in some way. This example does so with a perhaps-too-simple [`{ privateFunction: true }`](../contract-interface/private-methods.md) decorator parameter.

|

Thresholded Proof Of Stake

DEVELOPERS

September 11, 2018

A central component of any blockchain is the consensus. At its core, Blockchain is a distributed storage system where every member of the network must agree on what information is stored there. A consensus is the protocol by which this agreement is achieved.

The consensus algorithm usually involves:

Election. How a set of nodes (or single node) is elected at any particular moment to make decisions.

Agreement. What is the protocol by which the elected set of nodes agrees on the current state of the blockchain and newly added transactions.

In this article, I want to discuss the first part (Election) and leave exact mechanics of agreement to a subsequent article.

Proof of Work:

Examples: Bitcoin, Ethereum

The genius of Nakamoto presented the world with Proof of Work (PoW) consensus. This method allows participants in a large distributed system to identify who the leader is at each point in time. To become a leader, everybody is racing to compute a complex puzzle and whomever gets there first ends up being rewarded. This leader then publishes what they believe is the new state of the network and anybody else can easily prove that leader did this work correctly.

There are three main disadvantages to this method:

Pooling. Because of the randomness involved with finding the answer to the cryptographic puzzle which solves a given block, it’s beneficial for a large group of independent workers to pool resources and find the answer more predictably. With pooling, if anybody in the pool finds the answer, all members of the pool gets fraction of the reward. This increases likelihood of payout at any given moment even if each reward is much smaller. Unfortunately, pooling leads to centralization. For example it’s known that 53%+ of Bitcoin network is controlled by just 3 pools.

Forks. Forks are a natural thing in the case of Nakamoto consensus, as there can be multiple entities that found the answer within same few seconds. The fork which is ultimately chosen is that which more other network participants end up adopting. This leads to a longer wait until a transaction can be considered “final”, as one can never be sure that the current last block is the one that most of the network is building on.

Wasted energy. A huge number of customized machines operate around the world solely performing computations for the sake of identifying who should be the next block leader, consuming more electricity than all of Denmark. Iceland for example spends a significant percentage of all electricity produced on mining Bitcoin.

Proof of Stake.

Examples: EOS, Steemit, Bitshares

The most widely adopted alternative to Proof of Work is Proof of Stake (PoS). As an idea, it means that every node in the system participates in decisions proportionally to the amount of money they have.

One of the main ways of using PoS in practice is called Delegated Proof of Stake (DPoS). In this system, the whole network votes for “delegates” — participants who maintain the network and make all of the decisions on behalf of the other members. Depending on the implementation, delegates must either personally stake a large sum of money or use it to campaign for their election. Both of these mean that only high net worth individuals or consortia can be delegates.

In theory, this is very similar to how equity in companies works. In that case, small equity holders participate in elections and elect a small number of decision makers (the board of directors), who are typically large equity holders. These large equity holders then make all of the major decisions on the behalf of all shareholders.

Depending on the specifics of consensus itself, the Proof of Stake system can address the forking and wasted energy that are problematic with Proof of Work. This method still has downside of centralization due to either small number of individual nodes (EOS) or centrally controlled pools end up participating in network maintenance. Also, in specific implementations of DPoS, the fact that all delegates know each other means that slashing (penalizing delegate for wrongdoing by taking their stake) may not happen because a majority of delegates must vote for it.

Ultimately, these factors mean that a small club of rich get richer, perpetuating some of the systemic problems that blockchains were created to address.

Thresholded Proof Of Stake:

Examples: NEAR

NEAR uses an election mechanism called Thresholded Proof of Stake (TPoS). The general idea is that we want a deterministic way to have a large number of participants that are maintaining network maintenance thereby increasing decentralization, security and establishes fair reward distribution. The closest alternative to our method is an auction, where people bid for fixed number of items and at the end the top N bids win, while receiving a number of items proportional to the size of their bids.

In our case, we want a large pool of participants (we call them “witnesses”) to be elected to make decisions during a specific interval of time (we default to one day). Each interval is split into a large number of block slots (we default to 1440 slots, one every minute) with a reasonably large number of witnesses per each block (default to 1024). With these defaults, we end up needing to fill 1,474,560 individual witness seats.

Example of selecting set of witnesses via TPoS process

Each witness seat is defined by the stake of all participants that indicated they want to be signing blocks. For example, if 1,474,560 participants stake 10 tokens each, then each individual seat is worth 10 tokens and each participant will have one seat. Alternatively, if 10 participants stake 1,474,560 tokens each, individual seat still cost 10 tokens and each participant is awarded with 147,456 seats. Formally, if X is the seat price and {Wi} are stakes by each individual participant:

Formula to identify single witness seat threshold

To participate in the network maintenance (become a witness), any account can submit a special transaction that indicates how much money one wants to stake. As soon as that transaction is accepted, the specified amount of money is locked for at least 3 days. At the end of the day, all of the new witness proposals are collected together with all of the participants who signed blocks during the day. From there, we identify the cost for an individual seat (with the formula above), allocate a number of seats for everybody who has staked at least that amount and perform pseudorandom permutation.

NEAR Protocol uses inflationary block rewards and transaction fees to incentivize witnesses to participate in signing blocks. Specifically, we propose an inflation rate to be defined as percentage of total number of tokens. This encourages HODLers to run participating node to maintain their share in the network value.

When participant signs up to be witness, the portion of their money is locked and can not be spend. The stake of each witness is unlocked a day after the witness stops participating in the block signature. If during this time witness signed two competing blocks, their stake will be forfeited toward the participant that noticed and proved “double sign”.

The main advantages of this mechanism for electing witnesses are:

No pooling necessary. There is no reason to pool stake or computational resources because the reward is directly proportional to the stake. Put another way, two accounts holding 10 tokens each will give the same return as 20 tokens in a single account. The only exception if you have less tokens then threshold, which is counteracted by a very large number of witnesses elected.

Less Forking. Forks are possible only when there is a serious network split (when less then ⅓ adversaries present). In normal operation, a user can observe the number of signatures on the block and, if there is more than ⅔+1, the block is irreversible. In the case of a network split, the participants can clearly observe the split by understanding how many signatures there are versus how many there should be. For example, if the network forks then the majority of the network participants will observe blocks with less than ⅔ (but likely more than ½) of necessary signatures and can choose to wait for a sufficient number of blocks before concluding that the block in the past is unlikely to be reversed. The minority of the network participants will see blocks with less than ½ signatures, and will have a clear evidence that the network split might be in effect, and will know that their blocks are likely to be overwritten and should not be used for finality.

Security. Rewriting a single block or performing a long range attack is extremely hard to do since one must get the private keys from witnesses who hold ⅔ of total stake amount over the two days in the past. This is with assumption that each witness participated only for one day, which will rarely happen due to economic incentive to continuously participate if they holding tokens on the account.

A few disadvantages of this approach:

Witnesses are known well in advance so an attacker could try to DDoS them. In our case, this is very difficult because a large portion of witnesses will be on mobile phones behind NATs and not accepting incoming connections. Attacking specific relays will just lead to affected mobile phones reconnecting to their peers.

In PoS, total reward is divided between current pool of witnesses, which makes it slightly unfavorable for them to include new witnesses. In our approach we add extra incentives for inclusion of new witness transactions into the block.

This system is a framework which can incorporate a number of other improvements as well. We are particularly excited about Dfinity and AlgoRand’s research into using Verifiable Random Functions to select random subset of witnesses for producing next block, which helps with protecting from DDoS and reducing the requirement to keep track of who is a witness at a particular time.

In future posts of this series, we will discuss the Agreement part of our consensus algorithm, sharding of state and transactions and design of distributed smart contracts.

To follow our progress you can use:

Twitter — https://twitter.com/nearprotocol

Discord — https://near.chat

https://upscri.be/633436/

Thanks to Ivan Bogatyy for feedback on the draft. Thanks to Alexander Skidanov, Maksym Zavershynskyi, Erik Trautman, Aliaksandr Hudzilin, Bowen Wang for helping putting together this post. |

# Metadata

## [NEP-177](https://github.com/near/NEPs/blob/master/neps/nep-0177.md)

Version `2.1.0`

## Summary

An interface for a non-fungible token's metadata. The goal is to keep the metadata future-proof as well as lightweight. This will be important to dApps needing additional information about an NFT's properties, and broadly compatible with other token standards such that the [NEAR Rainbow Bridge](https://near.org/blog/eth-near-rainbow-bridge/) can move tokens between chains.

## Motivation

The primary value of non-fungible tokens comes from their metadata. While the [core standard](Core.md) provides the minimum interface that can be considered a non-fungible token, most artists, developers, and dApps will want to associate more data with each NFT, and will want a predictable way to interact with any NFT's metadata.

NEAR's unique [storage staking](https://docs.near.org/concepts/storage/storage-staking) approach makes it feasible to store more data on-chain than other blockchains. This standard leverages this strength for common metadata attributes, and provides a standard way to link to additional offchain data to support rapid community experimentation.

This standard also provides a `spec` version. This makes it easy for consumers of NFTs, such as marketplaces, to know if they support all the features of a given token.

Prior art:

- NEAR's [Fungible Token Metadata Standard](../FungibleToken/Metadata.md)

- Discussion about NEAR's complete NFT standard: #171

## Interface

Metadata applies at both the contract level (`NFTContractMetadata`) and the token level (`TokenMetadata`). The relevant metadata for each:

```ts

type NFTContractMetadata = {

spec: string, // required, essentially a version like "nft-2.0.0", replacing "2.0.0" with the implemented version of NEP-177

name: string, // required, ex. "Mochi Rising — Digital Edition" or "Metaverse 3"

symbol: string, // required, ex. "MOCHI"

icon: string|null, // Data URL

base_uri: string|null, // Centralized gateway known to have reliable access to decentralized storage assets referenced by `reference` or `media` URLs

reference: string|null, // URL to a JSON file with more info

reference_hash: string|null, // Base64-encoded sha256 hash of JSON from reference field. Required if `reference` is included.

}

type TokenMetadata = {

title: string|null, // ex. "Arch Nemesis: Mail Carrier" or "Parcel #5055"

description: string|null, // free-form description

media: string|null, // URL to associated media, preferably to decentralized, content-addressed storage

media_hash: string|null, // Base64-encoded sha256 hash of content referenced by the `media` field. Required if `media` is included.

copies: number|null, // number of copies of this set of metadata in existence when token was minted.

issued_at: number|null, // When token was issued or minted, Unix epoch in milliseconds

expires_at: number|null, // When token expires, Unix epoch in milliseconds

starts_at: number|null, // When token starts being valid, Unix epoch in milliseconds

updated_at: number|null, // When token was last updated, Unix epoch in milliseconds

extra: string|null, // anything extra the NFT wants to store on-chain. Can be stringified JSON.

reference: string|null, // URL to an off-chain JSON file with more info.

reference_hash: string|null // Base64-encoded sha256 hash of JSON from reference field. Required if `reference` is included.

}

```

A new function MUST be supported on the NFT contract:

```ts

function nft_metadata(): NFTContractMetadata {}

```

A new attribute MUST be added to each `Token` struct:

```diff

type Token = {

token_id: string,

owner_id: string,

+ metadata: TokenMetadata,

}

```

### An implementing contract MUST include the following fields on-chain

- `spec`: a string that MUST be formatted `nft-n.n.n` where "n.n.n" is replaced with the implemented version of this Metadata spec: for instance, "nft-2.0.0" to indicate NEP-177 version 2.0.0. This will allow consumers of the Non-Fungible Token to know which set of metadata features the contract supports.

- `name`: the human-readable name of the contract.

- `symbol`: the abbreviated symbol of the contract, like MOCHI or MV3

- `base_uri`: Centralized gateway known to have reliable access to decentralized storage assets referenced by `reference` or `media` URLs. Can be used by other frontends for initial retrieval of assets, even if these frontends then replicate the data to their own decentralized nodes, which they are encouraged to do.

### An implementing contract MAY include the following fields on-chain

For `NFTContractMetadata`:

- `icon`: a small image associated with this contract. Encouraged to be a [data URL](https://developer.mozilla.org/en-US/docs/Web/HTTP/Basics_of_HTTP/Data_URIs), to help consumers display it quickly while protecting user data. Recommendation: use [optimized SVG](https://codepen.io/tigt/post/optimizing-svgs-in-data-uris), which can result in high-resolution images with only 100s of bytes of [storage cost](https://docs.near.org/concepts/storage/storage-staking). (Note that these storage costs are incurred to the contract deployer, but that querying these icons is a very cheap & cacheable read operation for all consumers of the contract and the RPC nodes that serve the data.) Recommendation: create icons that will work well with both light-mode and dark-mode websites by either using middle-tone color schemes, or by [embedding `media` queries in the SVG](https://timkadlec.com/2013/04/media-queries-within-svg/).

- `reference`: a link to a valid JSON file containing various keys offering supplementary details on the token. Example: `/ipfs/QmdmQXB2mzChmMeKY47C43LxUdg1NDJ5MWcKMKxDu7RgQm`, `https://example.com/token.json`, etc. If the information given in this document conflicts with the on-chain attributes, the values in `reference` shall be considered the source of truth.

- `reference_hash`: the base64-encoded sha256 hash of the JSON file contained in the `reference` field. This is to guard against off-chain tampering.

For `TokenMetadata`:

- `title`: The name of this specific token.

- `description`: A longer description of the token.

- `media`: URL to associated media. Preferably to decentralized, content-addressed storage.

- `media_hash`: the base64-encoded sha256 hash of content referenced by the `media` field. This is to guard against off-chain tampering.

- `copies`: The number of tokens with this set of metadata or `media` known to exist at time of minting.

- `issued_at`: Unix epoch in milliseconds when token was issued or minted (an unsigned 32-bit integer would suffice until the year 2106)

- `expires_at`: Unix epoch in milliseconds when token expires

- `starts_at`: Unix epoch in milliseconds when token starts being valid

- `updated_at`: Unix epoch in milliseconds when token was last updated

- `extra`: anything extra the NFT wants to store on-chain. Can be stringified JSON.

- `reference`: URL to an off-chain JSON file with more info.

- `reference_hash`: Base64-encoded sha256 hash of JSON from reference field. Required if `reference` is included.

### No incurred cost for core NFT behavior

Contracts should be implemented in a way to avoid extra gas fees for serialization & deserialization of metadata for calls to `nft_*` methods other than `nft_metadata` or `nft_token`. See `near-contract-standards` [implementation using `LazyOption`](https://github.com/near/near-sdk-rs/blob/c2771af7fdfe01a4e8414046752ee16fb0d29d39/examples/fungible-token/ft/src/lib.rs#L71) as a reference example.

## Drawbacks

* When this NFT contract is created and initialized, the storage use per-token will be higher than an NFT Core version. Frontends can account for this by adding extra deposit when minting. This could be done by padding with a reasonable amount, or by the frontend using the [RPC call detailed here](https://docs.near.org/docs/develop/front-end/rpc#genesis-config) that gets genesis configuration and actually determine precisely how much deposit is needed.

* Convention of `icon` being a data URL rather than a link to an HTTP endpoint that could contain privacy-violating code cannot be done on deploy or update of contract metadata, and must be done on the consumer/app side when displaying token data.

* If on-chain icon uses a data URL or is not set but the document given by `reference` contains a privacy-violating `icon` URL, consumers & apps of this data should not naïvely display the `reference` version, but should prefer the safe version. This is technically a violation of the "`reference` setting wins" policy described above.

## Future possibilities

- Detailed conventions that may be enforced for versions.

- A fleshed out schema for what the `reference` object should contain.

## Errata

* **2022-02-03**: updated `Token` struct field names. `id` was changed to `token_id`. This is to be consistent with current implementations of the standard and the rust SDK docs.

The first version (`1.0.0`) had confusing language regarding the fields:

- `issued_at`

- `expires_at`

- `starts_at`

- `updated_at`

It gave those fields the type `string|null` but it was unclear whether it should be a Unix epoch in milliseconds or [ISO 8601](https://www.iso.org/iso-8601-date-and-time-format.html). Upon having to revisit this, it was determined to be the most efficient to use epoch milliseconds as it would reduce the computation on the smart contract and can be derived trivially from the block timestamp.

|

Aurora Partners With ConsenSys, Bringing MetaMask, Infura and More Ethereum Tools to NEAR

COMMUNITY

December 2, 2021

Aurora, the Ethereum scaling solution that allows projects built on Ethereum to utilize the cutting-edge technology of the NEAR Protocol, is partnering with ConsenSys, the enterprise blockchain company to provide access to its suite of developer tools.

The partnership aims to empower both the NEAR and Ethereum ecosystems by improving developer facilities – through the availability of the product suite – with the goal of increasing cross-chain interoperability.

The product suite features projects including MetaMask, Infura, ConsenSys Quorum, Truffle, Codefi, and Diligence. MetaMask is the primary way a global user base of over 21 million monthly active users interact with applications on Web3. Over 350,000 developers use Infura to access Ethereum, IPFS and Layer 2 networks. In addition, 4.7 million developers create and deploy smart contracts using Truffle and ConsenSys Diligence has secured more than $25 billion in smart contracts with its hands-on dapp audits and testing tools.

“We are thrilled to join forces with ConsenSys on our shared mission of empowering the Ethereum ecosystem and extending its economy,” said Alex Shevchenko, the CEO of Aurora Labs.

The partnership means ConsenSys will now have official participation in the ongoing development of the AuroraDAO.

“We are excited to be partnering with the talented Aurora and NEAR protocol teams. Developer interest in EVM-compatible scaling solutions continues to grow at the same pace as the rapid expansion of the Web3 ecosystem, says E.G. Galano, Co-Founder and Head of Engineering at Infura.

“We believe developers will benefit from the addition of Aurora to the Infura product suite by enabling them to utilize the NEAR network with the EVM tooling they are already familiar with.”

Infura is directly involved in the AuroraDAO, which hands over key decision making to its community as a part of its vision to create a scaling solution that is as decentralized as possible.

About Aurora

Aurora is an EVM built on the NEAR Protocol, delivering a turn-key solution for developers to operate their apps on an Ethereum-compatible, high-throughput, scalable and future-safe platform, with low transaction costs for their users. |

The State of Self Sovereign Identities

CASE STUDIES

September 30, 2019

What do we mean by identity?

Throughout our lives, we take on different identities. What we identify ourselves with ranges from the state identity provided at birth to the identities that we assign to ourselves and that are given to us by others. Depending on the communities that we interact with, we are inclined to tell different stories about yourself. The goal may either be to stand out and portray a unique individual or to blend in with the crowd.

Trade-offs

In personal interactions, we can shift our attitude and character depending on the group we engage with. Thus, our counterparty will be able to recognize and respond with an adequate form of interaction. This form of selective disclosure of information is not possible in formal interactions, such as by the government-regulated entities, nor on social media. Users are forced into a split between providing all information and gaining access to a service or product, or refusing access and risking censorship, exclusion, or even becoming subject to state-enforced violence. As a result, personal credentials are scattered across platforms.

When signing up for a new service, the user has to decide between various trade-offs, one is to opt for usability over privacy, another one is the ease of use and the level of inclusion in one’s social circles. The less you know about someone, the harder it is to interact with that person. Similarly, no single entity has the need, nor should be given the right, to access all data that has been generated and gathered on a user.

However, throughout the centralisation of social networks and user-centric services, access to those vast amounts of information has unrightfully been claimed and exploited.

Solution

The following section provides an overview of several projects that are working on alternatives to the problems mentioned above. In the most general form, solutions allow users to register and store their identity credentials. Depending on the entity that they are interacting with, the user can provide access to individual attributes that make up their identity. Imagine this to be similar to Facebook Sign-In, with the difference of you owning your data instead of Facebook. Being able to modify the set of information disclosed on every sign-on will allow users to shift and shape their identity in accordance with social situations.

Digital Identities

We can differentiate between two broad types of identities, self-sovereign identities and centralised trusted identities. Self-sovereign identities allow users to own and control their identity without the need or influence of an external entity. In contrast, centralised trusted identities rely on a centralised body to provide and verify documentation. Both are designed for different use cases.

The following section provides an overview of three different digital identities, none of which utilise a blockchain.

State-maintained digital identities

The first one being state-owned and maintained identities. If you have travelled abroad, purchased a car, or signed a rental agreement, the chances are high that you were in need of a passport or ID card. The main problem with formal documentation is that they require the establishment of an authority that is globally recognised. According to the World Bank, an estimated number of 1 Billion people do not have access to any documentation. To participate in daily activities, individuals have to rely on the trust of their community. Interpersonal trust is not only highly time-consuming to establish but also makes the participation in legal interactions, such as voting or buying a house, impossible.

Several European countries started developing, testing and implementing digital identities, intending to make government services more inclusive. The principle is that once citizens have access to a government-issued identity, they can reuse the credential for all digital services. The most established implementation is in Estonia. Estonia’s government provides an e-residency to all of its citizens. Once users have the government-issued ID card, they can access a wide range of online services, including health records, medical prescriptions, sign e-documents, and vote.

A similar implementation has been provided by u-Port throughout a pilot program in Zug, Switzerland. U-Port allows its users to register their identity and interact with the Ethereum blockchain. After enrolling with the city hall and u-Port, users have to go to the city hall to verify their identity. Once approved, users can interact with financial services.

Germany and other states are currently working on identity solutions that are based on the same principles. Resulting, those will all run into the same problem: Government-issued digital identities are dependent on the government to provide the necessary infrastructure. If this infrastructure is not available, the e-identity will not be of much use, resulting in the chicken and egg problem.

Decentralised approaches

Self-sovereign identities rely on cryptographic solutions, such as the web of trust, to establish higher confidence in the information that users provide on a platform. The premise hereby is that a user has more credibility within a given system, the more people know him/her and approve of her/his identity. Note that the purpose is not to uniquely identify someone’s identity based on whom they claim to be, but instead based on who they are in relation to everybody else.

If the only credential that is known in the system is a user’s public key, members of the system can sign-off each other’s public keys to build trust-relations. Depending on the implementation of the web of trust, users gain more trust from other users in the system, the more people that have signed-off their public key. The same mechanisms can be implemented in the form of a voting ring, whereby users have to become endorsed by those users, they verified prior. A user will only be trusted if (s)he is part of a cycle of trust. Blockchain-based implementations that rely on the web of trust are Sovrin and brightID.

An alternative to the web of trust is to identify users based on what they have. Consensus solutions rely on a similar premise. To gain trust in the system, users have to provide value. In case they behave maliciously, the value provided will be taken away. Ultimately, the more value a user is willing to provide to the network, the less likely (s)he will want it to be slashed, and the more trustworthy the user will be.

An example of a non-blockchain-based system is Scuttlebutt. Scuttlebutt is a decentralised social network, in which users identify themselves with their public key, which is linked to the user’s device. All data that the user generates and collects from other users lives on the user’s machine. Resulting, the relationship between humans correlates to the relationship between computers.

Moving forward

Currently, there is none “solve it all” solution for decentralised identities. Depending on the use case, different identity solutions will be utilised to generate, store and maintain the user’s identity. Blockchain will become most important in providing secure data storage of people’s credentials, whether a central authority issues those or approved by a network of users. Currently, people keep their most relevant information, such as health records, offline and linked to an analogue, state-issued ID. While the data can be lost, they can only be copied if someone gains physical access to the document. In contrast, any information that is kept on a centralised server will become vulnerable to unauthorised access if it has not been encrypted on the user-side by the user’s key.

Utilising blockchain architecture can remove the need for all middlemen, while the control will remain with the user. This will not only lead to higher security if done right but also empower users to take ownership of their identity generation, maintenance and usage. Once creators don’t have to base their business model around the implementation of use-case-specific identities, unprecedented applications can emerge.

TLDR

We rely heavily on personal, organisation, and state-issued identities in our day to day life, often forcing us to compromise privacy for usability. While government-issued IDs rely on a trust-enhancing infrastructure, organisations collect and exploit user data in exchange for access to online services.

Solutions are either based on self-sovereign identities or centralised trusted identities, with the common goal of empowering the user to hold and provide access to their personal information. If the infrastructure is in place, governments are working on ID-based e-identities. These employ centralised storage, providing a common attack vector. In contrast, decentralised identity solutions utilise the web of trust or unique value that an individual offers to the network. With this, identity is based on the trust people provide to each other depending on interactions.

While several projects experiment with decentralised, user-owned identities, an application has yet to emerge that provides users with self-sovereignty, usability, and trust.

To follow our progress and learn how you can get involved, check out the following links:

Discord (http://near.chat/)

Beta Program (https://pages.near.org/beta/) |

---

NEP: 455

Title: Parameter Compute Costs

Author: Andrei Kashin <andrei.kashin@near.org>, Jakob Meier <jakob@near.org>

DiscussionsTo: https://github.com/nearprotocol/neps/pull/455

Status: Final

Type: Protocol Track

Category: Runtime

Created: 26-Jan-2023

---

## Summary

Introduce compute costs decoupled from gas costs for individual parameters to safely limit the compute time it takes to process the chunk while avoiding adding breaking changes for contracts.

## Motivation

For NEAR blockchain stability, we need to ensure that blocks are produced regularly and in a timely manner.

The chunk gas limit is used to ensure that the time it takes to validate a chunk is strictly bounded by limiting the total gas cost of operations included in the chunk.

This process relies on accurate estimates of gas costs for individual operations.

Underestimating these costs leads to *undercharging* which can increase the chunk validation time and slow down the chunk production.

As a concrete example, in the past we undercharged contract deployment.

The responsible team has implemented a number of optimizations but a gas increase was still necessary.

[Meta-pool](https://github.com/Narwallets/meta-pool/issues/21) and [Sputnik-DAO](https://github.com/near-daos/sputnik-dao-contract/issues/135) were affected by this change, among others.

Finding all affected parties and reaching out to them before implementing the change took a lot of effort, prolonging the period where the network was exposed to the attack.

Another motivating example is the upcoming incremental deployment of Flat Storage, where during one of the intermediate stages we expect the storage operations to be undercharged.

See the explanation in the next section for more details.

## Rationale

Separating compute costs from gas costs will allow us to safely limit the compute usage for processing the chunk while still keeping the gas prices the same and thus not breaking existing contracts.

An important challenge with undercharging is that it is not possible to disclose them widely because it could be used to increase the chunk production time thereby impacting the stability of the network.

Adjusting the compute cost for undercharged parameter eliminates the security concern and allows us to publicly discuss the ways to solve the undercharging (optimize implementation, smart contract or increasing the gas cost).

This design is easy to implement and simple to reason about and provides a clear way to address existing undercharging issues.

If we don't address the undercharging problems, we increase the risks that they will be exploited.

Specifically for Flat Storage deployment, we [plan](https://github.com/near/nearcore/issues/8006) to stop charging TTN (touching trie node) gas costs, however the intermediate implementation (read-only Flat Storage) will still incur these costs during writes introducing undercharging.

Setting temporary high compute costs for writes will ensure that this undercharging does not lead to long chunk processing times.

## Alternatives

### Increase the gas costs for undercharged operations

We could increase the gas costs for the operations that are undercharged to match the computational time it takes to process them according to the rule 1ms = 1TGas.

Pros:

- Does not require any new code or design work (but still requires a protocol version bump)

- Security implications are well-understood

Cons:

- Can break contracts that rely on current gas costs, in particular steeply increasing operating costs for the most active users of the blockchain (aurora and sweat)

- Doing this safely and responsibly requires prior consent by the affected parties which is hard to do without disclosing security-sensitive information about undercharging in public

In case of flat storage specifically, using this approach will result in a large increase in storage write costs (breaking many contracts) to enable safe deployment of read-only flat storage and later a correction of storage write costs when flat storage for writes is rolled out.

With compute costs, we will be able to roll out the read-only flat storage with minimal impact on deployed contracts.

### Adjust the gas chunk limit

We could continuously measure the chunk production time in nearcore clients and compare it to the gas burnt.

If the chunk producer observes undercharging, it decreases the limit.

If there is overcharging, the limit can be increased up to a limit of at most 1000 Tgas.

To make such adjustment more predictable under spiky load, we also [limit](https://nomicon.io/Economics/Economic#transaction-fees) the magnitude of change of gas limit by 0.1% per block.

Pros:

- Prevents moderate undercharging from stalling the network

- No protocol change necessary (as this feature is already [a part of the protocol](https://nomicon.io/Economics/Economic#transaction-fees)), we could easily experiment and revert if it does not work well

Cons:

- Very broad granularity --- undercharging in one parameter affects all users, even those that never use the undercharged parts

- Dependence on validator hardware --- someone running overspecced hardware will continuously want to increase the limit, others might run with underspecced hardware and continuously want to decrease the limit

- Malicious undercharging attacks are unlikely to be prevented by this --- a single 10x undercharged receipt still needs to be processed using the old limit.

Adjusting 0.1% per block means 100 chunks can only change by a maximum of 1.1x and 1000 chunks could change up to x2.7

- Conflicts with transaction and receipt limit --- A transaction or receipt can (today) use up to 300Tgas.

The effective limit per chunk is `gas_limit` + 300Tgas since receipts are added to a chunk until one exceeds the limit and the last receipt is not removed.

Thus a gas limit of 0gas only reduces the effective limit from 1300Tgas to 300Tgas, which means a single 10x undercharged receipt can still result in a chunk with compute usage of 3 seconds (equivalent to 3000TGas)

### Allow skipping chunks in the chain

Slow chunk production in one shard can introduce additional user-visible latency in all shards as the nodes expect a regular and timely chunk production during normal operation.

If processing the chunk takes much longer than 1.3s, it can cause the corresponding block and possibly more consecutive blocks to be skipped.

We could extend the protocol to produce empty chunks for some of the shards within the block (effectively skipping them) when processing the chunk takes longer than expected.

This way will still ensure a regular block production, at a cost of lower throughput of the network in that shard.

The chunk should still be included in a later block to avoid stalling the affected shard.

Pros:

- Fast and automatic adaptation to the blockchain workload

Cons:

- For the purpose of slashing, it is hard to distinguish situations when the honest block producer skips chunk due to slowness from the situations when the block producer is offline or is maliciously stalling the block production. We need some mechanism (e.g. on-chain voting) for nodes to agree that the chunk was skipped legitimately due to slowness as otherwise we introduce new attack vectors to stall the network

## Specification

- **Chunk Compute Usage** -- total compute time spent on processing the chunk

- **Chunk Compute Limit** -- upper-bound for compute time spent on processing the chunk

- **Parameter Compute Cost** -- the numeric value in seconds corresponding to compute time that it takes to include an operation into the chunk

Today, gas has two somewhat orthogonal roles:

1. Gas is money. It is used to avoid spam by charging users

2. Gas is CPU time. It defines how many transactions fit in a chunk so that validators can apply it within a second

The idea is to decouple these two by introducing parameter compute costs.

Each gas parameter still has a gas cost that determines what users have to pay.

But when filling a chunk with transactions, parameter compute cost is used to estimate CPU time.

Ideally, all compute costs should match corresponding gas costs.

But when we discover undercharging issues, we can set a higher compute cost (this would require a protocol upgrade).

The stability concern is then resolved when the compute cost becomes active.

The ratio between compute cost and gas cost can be thought of as an undercharging factor.

If a gas cost is 2 times too low to guarantee stability, compute cost will be twice the gas cost.

A chunk will be full 2 times faster when gas for this parameter is burned.

This deterministically throttles the throughput to match what validators can actually handle.

Compute costs influence the gas price adjustment logic described in https://nomicon.io/Economics/Economic#transaction-fees.

Specifically, we're now using compute usage instead of gas usage in the formula to make sure that the gas price increases if chunk processing time is close to the limit.

Compute costs **do not** count towards the transaction/receipt gas limit of 300TGas, as that might break existing contracts by pushing their method calls over this limit.

Compute costs are static for each protocol version.

### Using Compute Costs

Compute costs different from gas costs are only a temporary solution.

Whenever we introduce a compute cost, we as the community can discuss this publicly and find a solution to the specific problem together.

For any active compute cost, a tracking GitHub issue in [`nearcore`](https://github.com/near/nearcore) should be created, tracking work towards resolving the undercharging. The reference to this issue should be added to this NEP.

In the best case, we find technical optimizations that allow us to decrease the compute cost to match the existing gas cost.

In other cases, the only solution is to increase the gas cost.

But the dApp developers who are affected by this change should have a chance to voice their opinion, suggest alternatives, and implement necessary changes before the gas cost is increased.

## Reference Implementation

The compute cost is a numeric value represented as `u64` in time units.

Value 1 corresponds to `10^-15` seconds or 1fs (femtosecond) to match the gas costs scale.

By default, the parameter compute cost matches the corresponding gas cost.

Compute costs should be applicable to all gas parameters, specifically including:

- [`ExtCosts`](https://github.com/near/nearcore/blob/6e08a41084c632010b1d4c42132ad58ecf1398a2/core/primitives-core/src/config.rs#L377)

- [`ActionCosts`](https://github.com/near/nearcore/blob/6e08a41084c632010b1d4c42132ad58ecf1398a2/core/primitives-core/src/config.rs#L456)

Changes necessary to support `ExtCosts`:

1. Track compute usage in [`GasCounter`](https://github.com/near/nearcore/blob/51670e593a3741342a1abc40bb65e29ba0e1b026/runtime/near-vm-logic/src/gas_counter.rs#L47) struct

2. Track compute usage in [`VMOutcome`](https://github.com/near/nearcore/blob/056c62183e31e64cd6cacfc923a357775bc2b5c9/runtime/near-vm-logic/src/logic.rs#L2868) struct (alongside `burnt_gas` and `used_gas`)

3. Store compute usage in [`ActionResult`](https://github.com/near/nearcore/blob/6d2f3fcdd8512e0071847b9d2ca10fb0268f469e/runtime/runtime/src/lib.rs#L129) and aggregate it across multiple actions by modifying [`ActionResult::merge`](https://github.com/near/nearcore/blob/6d2f3fcdd8512e0071847b9d2ca10fb0268f469e/runtime/runtime/src/lib.rs#L141)

4. Store compute costs in [`ExecutionOutcome`](https://github.com/near/nearcore/blob/578983c8df9cc36508da2fb4a205c852e92b211a/runtime/runtime/src/lib.rs#L266) and [aggregate them across all transactions](https://github.com/near/nearcore/blob/578983c8df9cc36508da2fb4a205c852e92b211a/runtime/runtime/src/lib.rs#L1279)

5. Enforce the chunk compute limit when the chunk is [applied](https://github.com/near/nearcore/blob/6d2f3fcdd8512e0071847b9d2ca10fb0268f469e/runtime/runtime/src/lib.rs#L1325)

Additional changes necessary to support `ActionCosts`:

1. Return compute costs from [`total_send_fees`](https://github.com/near/nearcore/blob/578983c8df9cc36508da2fb4a205c852e92b211a/runtime/runtime/src/config.rs#L71)

2. Store aggregate compute cost in [`TransactionCost`](https://github.com/near/nearcore/blob/578983c8df9cc36508da2fb4a205c852e92b211a/runtime/runtime/src/config.rs#L22) struct

3. Propagate compute costs to [`VerificationResult`](https://github.com/near/nearcore/blob/578983c8df9cc36508da2fb4a205c852e92b211a/runtime/runtime/src/verifier.rs#L330)

Additionaly, the gas price computation will need to be adjusted in [`compute_new_gas_price`](https://github.com/near/nearcore/blob/578983c8df9cc36508da2fb4a205c852e92b211a/core/primitives/src/block.rs#L328) to use compute cost instead of gas cost.

## Security Implications

Changes in compute costs will be publicly known and might reveal an undercharging that can be used as a target for the attack.

In practice, it is not trivial to exploit the undercharging unless you know the exact shape of the workload that realizes it.

Also, after the compute cost is deployed, the undercharging should no longer be a threat for the network stability.

## Drawbacks

- Changing compute costs requires a protocol version bump (and a new binary release), limiting their use to undercharging problems that we're aware of

- Updating compute costs is a manual process and requires deliberately looking for potential underchargings

- The compute cost would not have a full effect on the last receipt in the chunk, decreasing its effectiveness to deal with undercharging.

This is because 1) a transaction or receipt today can use up to 300TGas and 2) receipts are added to a chunk until one exceeds the limit and the last receipt is not removed.

Therefore, a single receipt with 300TGas filled with undercharged operations with a factor of K can lead to overshooting the chunk compute limit by (K - 1) * 300TGas

- Underchargings can still be exploited to lower the throughput of the network at unfair price and increase the waiting times for other users.

This is inevitable for any proposal that doesn't change the gas costs and must be resolved by improving the performance or increasing the gas costs

- Even without malicious intent, the effective peak throughput of the network will decrease when the chunks include undercharged operations (as the stopping condition based on compute costs for filling the chunk becomes stricter).

Most of the time, this is not the problem as the network is operating below the capacity.

The effects will also be softened by the fact that undercharged operations comprise only a fraction of the workload.

For example, the planned increase for TTN compute cost alongside the Flat Storage MVP is less critical because you cannot fill a receipt with only TTN costs, you will always have other storage costs and ~5Tgas overhead to even start a function call.

So even with 10x difference between gas and compute costs, the DoS only becomes 5x cheaper instead of 10x

## Unresolved Issues

## Future possibilities

We can also think about compute costs smaller than gas costs.

For example, we charge gas instead of token balance for extra storage bytes in [NEP-448](https://github.com/near/NEPs/pull/448), it would make sense to set the compute cost to 0 for the part that covers on-chain storage if the throttling due to increased gas cost becomes problematic.

Otherwise, the throughput would be throttled unnecessarily.

A further option would be to change compute costs dynamically without a protocol upgrade when block production has become too slow.

This would be a catch-all, self-healing solution that requires zero intervention from anyone.

The network would simply throttle throughput when block time remains too high for long enough.

Pursuing this approach would require additional design work:

- On-chain voting to agree on new values of costs, given that inputs to the adjustment process are not deterministic (measurements of wall clock time it takes to process receipt on particular validator)

- Ensuring that dynamic adjustment is done in a safe way that does not lead to erratic behavior of costs (and as a result unpredictable network throughput).

Having some experience manually operating this mechanism would be valuable before introducing automation

and addressing challenges described in https://github.com/near/nearcore/issues/8032#issuecomment-1362564330.

The idea of introducing a chunk limit for compute resource usage naturally extends to other resource types, for example RAM usage, Disk IOPS, [Background CPU Usage](https://github.com/near/nearcore/issues/7625).

This would allow us to align the pricing model with cloud offerings familiar to many users, while still using gas as a common denominator to simplify UX.

## Changelog

### 1.0.0 - Initial Version

This NEP was approved by Protocol Working Group members on March 16, 2023 ([meeting recording](https://www.youtube.com/watch?v=4VxRoKwLXIs)):

- [Bowen's vote](https://github.com/near/NEPs/pull/455#issuecomment-1467023424)

- [Marcelo's vote](https://github.com/near/NEPs/pull/455#pullrequestreview-1340887413)

- [Marcin's vote](https://github.com/near/NEPs/pull/455#issuecomment-1471882639)

### 1.0.1 - Storage Related Compute Costs

Add five compute cost values for protocol version 61 and above.

- wasm_touching_trie_node

- wasm_storage_write_base

- wasm_storage_remove_base

- wasm_storage_read_base

- wasm_storage_has_key_base

For the exact values, please refer to the table at the bottom.

The intention behind these increased compute costs is to address the issue of

storage accesses taking longer than the allocated gas costs, particularly in

cases where RocksDB, the underlying storage system, is too slow. These values

have been chosen to ensure that validators with recommended hardware can meet

the required timing constraints.

([Analysis Report](https://github.com/near/nearcore/issues/8006))

The protocol team at Pagoda is actively working on optimizing the nearcore

client storage implementation. This should eventually allow to lower the compute

costs parameters again.

Progress on this work is tracked here: https://github.com/near/nearcore/issues/8938.

#### Benefits

- Among the alternatives, this is the easiest to implement.

- It allows us to able to publicly discuss undercharging issues before they are fixed.

#### Concerns

No concerns that need to be addressed. The drawbacks listed in this NEP are minor compared to the benefits that it will bring. And implementing this NEP is strictly better than what we have today.

## Copyright

Copyright and related rights waived via [CC0](https://creativecommons.org/publicdomain/zero/1.0/).

## References

- https://gov.near.org/t/proposal-gas-weights-to-fight-instability-to-due-to-undercharging/30919

- https://github.com/near/nearcore/issues/8032

## Live Compute Costs Tracking

Parameter Name | Compute / Gas factor | First version | Last version | Tracking issue |

-------------- | -------------------- | ------------- | ------------ | -------------- |

wasm_touching_trie_node | 6.83 | 61 | *TBD* | [nearcore#8938](https://github.com/near/nearcore/issues/8938)

wasm_storage_write_base | 3.12 | 61 | *TBD* | [nearcore#8938](https://github.com/near/nearcore/issues/8938)

wasm_storage_remove_base | 3.74 | 61 | *TBD* | [nearcore#8938](https://github.com/near/nearcore/issues/8938)

wasm_storage_read_base | 3.55 | 61 | *TBD* | [nearcore#8938](https://github.com/near/nearcore/issues/8938)

wasm_storage_has_key_base | 3.70 | 61 | *TBD* | [nearcore#8938](https://github.com/near/nearcore/issues/8938)

|

---

id: chain-signatures

title: Chain Signatures

---

import Tabs from '@theme/Tabs';

import TabItem from '@theme/TabItem';

import {CodeTabs, Language, Github} from "@site/src/components/codetabs"

Chain signatures enable NEAR accounts, including smart contracts, to sign and execute transactions across many blockchain protocols.

This unlocks the next level of blockchain interoperability by giving ownership of diverse assets, cross-chain accounts, and data to a single NEAR account.

:::info

This guide will take you through a step by step process for creating a Chain Signature.

⭐️ For a deep dive into the concepts of Chain Signatures see [What are Chain Signatures?](/concepts/abstraction/chain-signatures)

⭐️ For complete examples of a NEAR account performing transactions in other chains:

- [CLI script](https://github.com/mattlockyer/mpc-script)

- [web-app example](https://github.com/near-examples/near-multichain)

- [component example](https://test.near.social/bot.testnet/widget/chainsig-sign-eth-tx)

:::

---

## Create a Chain Signature

There are five steps to create a Chain Signature:

1. [Deriving the Foreign Address](#1-deriving-the-foreign-address) - Construct the address that will be controlled on the target blockchain

2. [Creating a Transaction](#2-creating-the-transaction) - Create the transaction or message to be signed

3. [Requesting a Signature](#3-requesting-the-signature) - Call the NEAR `multichain` contract requesting it to sign the transaction

4. [Reconstructing the Signature](#4-reconstructing-the-signature) - Reconstruct the signature from the MPC service's response

5. [Relaying the Signed Transaction](#5-relaying-the-signature) - Send the signed transaction to the destination chain for execution

_Diagram of a chain signature in NEAR_

:::info MPC testnet contracts

If you want to try things out, these are the smart contracts available on `testnet`:

- `multichain-testnet-2.testnet`: MPC signer contract

- `canhazgas.testnet`: [Multichain Gas Station](multichain-gas-relayer/gas-station.md) contract

- `nft.kagi.testnet`: [NFT Chain Key](nft-keys.md) contract

:::

---

## 1. Deriving the Foreign Address

Chain Signatures use [`derivation paths`](../../1.concepts/abstraction/chain-signatures.md#one-account-multiple-chains) to represent accounts on the target blockchain. The external address to be controlled can be deterministically derived from:

- The NEAR address (e.g., `example.near`, `example.testnet`, etc.)

- A derivation path (a string such as `ethereum-1`, `ethereum-2`, etc.)

- The MPC service's public key

We provide code to derive the address, as it's a complex process that involves multiple steps of hashing and encoding:

<Tabs groupId="code-tabs">

<TabItem value="Ξ Ethereum">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/ethereum.js" start="14" end="18" />

</TabItem>

<TabItem value="₿ Bitcoin">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/bitcoin.js" start="14" end="18" />

</TabItem>

</Tabs>

:::tip

The same NEAR account and path will always produce the same address on the target blockchain.

- `example.near` + `ethereum-1` = `0x1b48b83a308ea4beb845db088180dc3389f8aa3b`

- `example.near` + `ethereum-2` = `0x99c5d3025dc736541f2d97c3ef3c90de4d221315`

:::

---

## 2. Creating the Transaction

Constructing the transaction to be signed (transaction, message, data, etc.) varies depending on the target blockchain, but generally it's the hash of the message or transaction to be signed.

<Tabs groupId="code-tabs">

<TabItem value="Ξ Ethereum">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/ethereum.js"

start="32" end="48" />

In Ethereum, constructing the transaction is simple since you only need to specify the address of the receiver and how much you want to send.

</TabItem>

<TabItem value="₿ Bitcoin">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/bitcoin.js"

start="28" end="80" />

In bitcoin, you construct a new transaction by using all the Unspent Transaction Outputs (UTXOs) of the account as input, and then specify the output address and amount you want to send.

</TabItem>

</Tabs>

---

## 3. Requesting the Signature

Once the transaction is created and ready to be signed, a signature request is made by calling `sign` on the [MPC smart contract](https://github.com/near/mpc-recovery/blob/develop/contract/src/lib.rs#L298).

The method requires two parameters:

1. The `transaction` to be signed for the target blockchain

2. The derivation `path` for the account we want to use to sign the transaction

<Tabs groupId="code-tabs">

<TabItem value="Ξ Ethereum">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/ethereum.js"

start="57" end="61" />

</TabItem>

<TabItem value="₿ Bitcoin">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/bitcoin.js"

start="87" end="98" />

For bitcoin, all UTXOs are signed independently and then combined into a single transaction.

</TabItem>

</Tabs>

:::tip

Notice that the `payload` is being reversed before requesting the signature, to match the little-endian format expected by the contract

:::

:::info

The contract will take some time to respond, as the `sign` method starts recursively calling itself waiting for the **MPC service** to sign the transaction.

<details>

<summary> A Contract Recursively Calling Itself? </summary>

NEAR smart contracts are unable to halt execution and await the completion of a process. To solve this, one can make the contract call itself again and again checking on each iteration to see if the result is ready.

**Note:** Each call will take one block which equates to ~1 second of waiting. After some time the contract will either return a result that an external party provided or return an error running out of GAS waiting.

</details>

:::

---

## 4. Reconstructing the Signature

The MPC contract will not return the signature of the transaction itself, but the elements needed to reconstruct the signature.

This allows the contract to generalize the signing process for multiple blockchains.

<Tabs groupId="code-tabs">

<TabItem value="Ξ Ethereum">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/ethereum.js"

start="62" end="71" />

In Ethereum, the signature is reconstructed by concatenating the `r`, `s`, and `v` values returned by the contract.

The `v` parameter is a parity bit that depends on the `sender` address. We reconstruct the signature using both possible values (`v=0` and `v=1`) and check which one corresponds to our `sender` address.

</TabItem>

<TabItem value="₿ Bitcoin">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/bitcoin.js"

start="105" end="116" />

In Bitcoin, the signature is reconstructed by concatenating the `r` and `s` values returned by the contract.

</TabItem>

</Tabs>

---

## 5. Relaying the Signature

Once we have reconstructed the signature, we can relay it to the corresponding network. This will once again vary depending on the target blockchain.

<Tabs groupId="code-tabs">

<TabItem value="Ξ Ethereum">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/ethereum.js"

start="80" end="84" />

</TabItem>

<TabItem value="₿ Bitcoin">

<Github language="js"

url="https://github.com/near-examples/near-multichain/blob/main/src/services/bitcoin.js"

start="119" end="127" />

</TabItem>

</Tabs>

:::info

⭐️ For a deep dive into the concepts of Chain Signatures see [What are Chain Signatures?](/concepts/abstraction/chain-signatures)

⭐️ For complete examples of a NEAR account performing Eth transactions:

- [web-app example](https://github.com/near-examples/near-multichain)

- [component example](https://test.near.social/bot.testnet/widget/chainsig-sign-eth-tx)

:::

|

---

id: meta-transactions

title: Meta Transactions

---

# Meta Transactions

[NEP-366](https://github.com/near/NEPs/pull/366) introduced the concept of meta

transactions to Near Protocol. This feature allows users to execute transactions

on NEAR without owning any gas or tokens. In order to enable this, users

construct and sign transactions off-chain. A third party (the relayer) is used

to cover the fees of submitting and executing the transaction.

## Overview

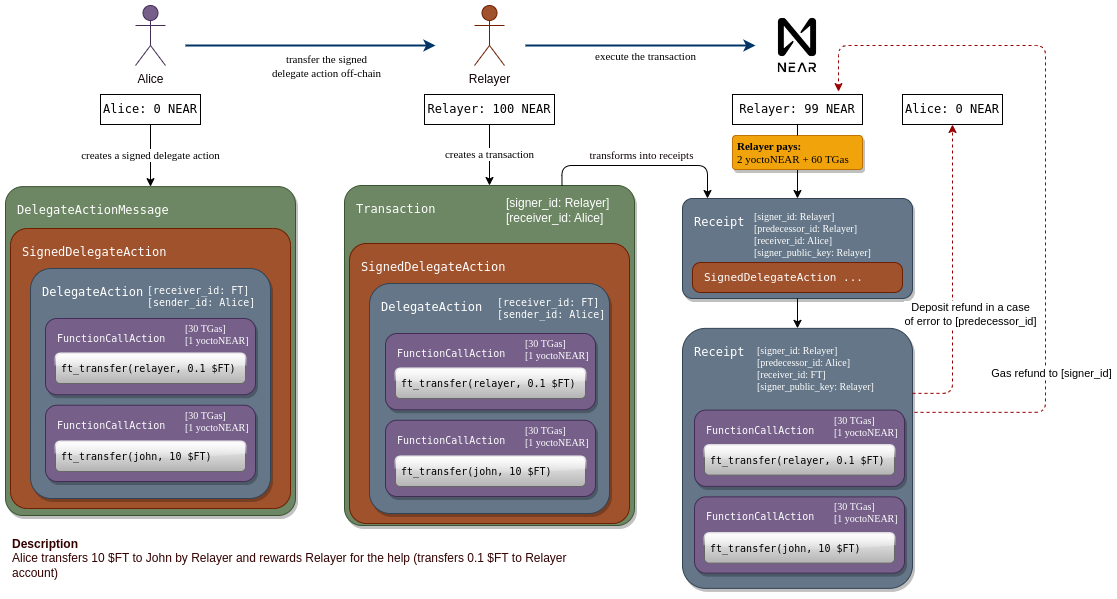

_Credits for the diagram go to the NEP authors Alexander Fadeev and Egor

Uleyskiy._

The graphic shows an example use case for meta transactions. Alice owns an

amount of the fungible token `$FT`. She wants to transfer some to John. To do

that, she needs to call `ft_transfer("john", 10)` on an account named `FT`.

The problem is, Alice has no NEAR tokens. She only has a NEAR account that

someone else funded for her and she owns the private keys. She could create a

signed transaction that would make the `ft_transfer("john", 10)` call. But

validator nodes will not accept it, because she does not have the necessary Near

token balance to purchase the gas.

With meta transactions, Alice can create a `DelegateAction`, which is very

similar to a transaction. It also contains a list of actions to execute and a

single receiver for those actions. She signs the `DelegateAction` and forwards

it (off-chain) to a relayer. The relayer wraps it in a transaction, of which the

relayer is the signer and therefore pays the gas costs. If the inner actions

have an attached token balance, this is also paid for by the relayer.

On chain, the `SignedDelegateAction` inside the transaction is converted to an

action receipt with the same `SignedDelegateAction` on the relayer's shard. The

receipt is forwarded to the account from `Alice`, which will unpacked the

`SignedDelegateAction` and verify that it is signed by Alice with a valid Nonce,

etc. If all checks are successful, a new action receipt with the inner actions

as body is sent to `FT`. There, the `ft_transfer` call finally executes.

## Relayer

Meta transactions only work with a [relayer](relayers.md). This is an application layer

concept, implemented off-chain. Think of it as a server that accepts a

`SignedDelegateAction`, does some checks on them and eventually forwards it

inside a transaction to the blockchain network.

A relayer may choose to offer their service for free but that's not going to be

financially viable long-term. But they could easily have the user pay using

other means, outside of Near blockchain. And with some tricks, it can even be

paid using fungible tokens on Near.

In the example visualized above, the payment is done using $FT. Together with

the transfer to John, Alice also adds an action to pay 0.1 $FT to the relayer.

The relayer checks the content of the `SignedDelegateAction` and only processes

it if this payment is included as the first action. In this way, the relayer

will be paid in the same transaction as John.

:::warning Keep in mind

The payment to the relayer is still not guaranteed. It could be that

Alice does not have sufficient `$FT` and the transfer fails. To mitigate, the

relayer should check the `$FT` balance of Alice first.

:::

Unfortunately, this still does not guarantee that the balance will be high

enough once the meta transaction executes. The relayer could waste NEAR gas

without compensation if Alice somehow reduces her $FT balance in just the right

moment. Some level of trust between the relayer and its user is therefore

required.

## Limitations

### Single receiver

A meta transaction, like a normal transaction, can only have one receiver. It's

possible to chain additional receipts afterwards. But crucially, there is no

atomicity guarantee and no roll-back mechanism.

### Accounts must be initialized

Any transaction, including meta transactions, must use NONCEs to avoid replay

attacks. The NONCE must be chosen by Alice and compared to a NONCE stored on

chain. This NONCE is stored on the access key information that gets initialized

when creating an account.

## Constraints on the actions inside a meta transaction

A transaction is only allowed to contain one single delegate action. Nested

delegate actions are disallowed and so are delegate actions next to each other

in the same receipt.

## Gas costs for meta transactions

Meta transactions challenge the traditional ways of charging gas for actions.

Let's assume Alice uses a relayer to

execute actions with Bob as the receiver.

1. The relayer purchases the gas for all inner actions, plus the gas for the

delegate action wrapping them.

2. The cost of sending the inner actions and the delegate action from the

relayer to Alice's shard will be burned immediately. The condition `relayer

== Alice` determines which action `SEND` cost is taken (`sir` or `not_sir`).

Let's call this `SEND(1)`.

3. On Alice's shard, the delegate action is executed, thus the `EXEC` gas cost

for it is burned. Alice sends the inner actions to Bob's shard. Therefore, we

burn the `SEND` fee again. This time based on `Alice == Bob` to figure out

`sir` or `not_sir`. Let's call this `SEND(2)`.

4. On Bob's shard, we execute all inner actions and burn their `EXEC` cost.

Each of these steps should make sense and not be too surprising. But the

consequence is that the implicit costs paid at the relayer's shard are

`SEND(1)` + `SEND(2)` + `EXEC` for all inner actions plus `SEND(1)` + `EXEC` for

the delegate action. This might be surprising but hopefully with this

explanation it makes sense now!

## Gas refunds in meta transactions

Gas refund receipts work exactly like for normal transaction. At every step, the

difference between the pessimistic gas price and the actual gas price at that

height is computed and refunded. At the end of the last step, additionally all

remaining gas is also refunded at the original purchasing price. The gas refunds

go to the signer of the original transaction, in this case the relayer. This is

only fair, since the relayer also paid for it.

## Balance refunds in meta transactions

Unlike gas refunds, the protocol sends balance refunds to the predecessor

(a.k.a. sender) of the receipt. This makes sense, as we deposit the attached

balance to the receiver, who has to explicitly reattach a new balance to new

receipts they might spawn.

In the world of meta transactions, this assumption is also challenged. If an

inner action requires an attached balance (for example a transfer action) then

this balance is taken from the relayer.

The relayer can see what the cost will be before submitting the meta transaction

and agrees to pay for it, so nothing wrong so far. But what if the transaction

fails execution on Bob's shard? At this point, the predecessor is `Alice` and

therefore she receives the token balance refunded, not the relayer. This is

something relayer implementations must be aware of since there is a financial

incentive for Alice to submit meta transactions that have high balances attached

but will fail on Bob's shard.

## Function access keys in meta transactions

Function access keys can limit the allowance, the receiving contract, and the

contract methods. The allowance limitation acts slightly strange with meta

transactions.

But first, both the methods and the receiver will be checked as expected. That

is, when the delegate action is unwrapped on Alice's shard, the access key is

loaded from the DB and compared to the function call. If the receiver or method

is not allowed, the function call action fails.

For allowance, however, there is no check. All costs have been covered by the

relayer. Hence, even if the allowance of the key is insufficient to make the call

directly, indirectly through meta transaction it will still work.

This behavior is in the spirit of allowance limiting how much financial

resources the user can use from a given account. But if someone were to limit a

function access key to one trivial action by setting a very small allowance,

that is circumventable by going through a relayer. An interesting twist that

comes with the addition of meta transactions.

|

公链 2020 :Near Protocol 驶向Open Web之路

COMMUNITY

February 19, 2020

“Into the Open Web”, China Community AMA.

Conducted by NEAR Protocol Co-Founder Illia Polosukhin and China Lead Amos Zhang.

1、你是如何进入区块链行业又做了NEAR公链的?

Hi Illia! How did you enter the blockchain space and come up with the idea of Near Protocol?

@illia:

Alex and I previously had been working on an AI company NEAR.ai. Though we were doing cutting edge research in the field of program synthesis (automating software engineering), we were lacking real data and real users. As part of our work, we built a crowdsourcing platform that would employ engineers across the world to solve programming tasks to allow us to train better models.

We faced multiple issues, starting from payment across the world to the fact that we couldn’t ourselves provide it [the platform] with enough tasks. We started to look at how to make this platform into a marketplace and blockchain seemed like a perfect platform for this.

Alex comes from the background of building sharded databases at MemSQL, and I worked at Google Research on large distributed machine learning systems – we went down the rabbit hole of learning about blockchain, consensus and generally surrounding technologies. As we were learning, we stumbled upon the fact that we didn’t find a fitting solution that we would be able to use. Both from a technology standpoint, and even more importantly, from a usability standpoint.

We had a chat with some of our friends from MemSQL and Google on July 4th and realized that in that room we had great systems engineers who are all excited about the technology and also have experience building distributed systems.

Thus NEAR Protocol was born; we grew the team from the 3 people we had at NEAR.ai to 9 people over a week. Now we have 30+ ppl all over the world.

2、NEAR的分片设计是什么样的,和目前已有的分片方案有什么不同?

What is NEAR’s sharding solution, and how will NEAR differentiate with other sharding solutions?

@illia:

First and foremost NEAR is a developer platform. Meaning that we are focused on delivering the best experience for developers to build applications without limiting the types of experience they can build for their users.

This means that we really focused on tooling, APIs, common programming languages and making things really easy to develop. Second, we focused on allowing a common non-crypto user to easily start using applications built on NEAR – you don’t need to have tokens, wallets or prior knowledge of private/public keys to start using things.

Sharding and scalability are emerging as the outcome of this – blockchain should not be blocking developers or users from using applications. Hence there should not be limitations on the infrastructure layer.

Our sharding is designed to be hidden from the developer. For example, instead of shard chains we shard blocks. This means that developers do not have to be concerned with the shard they are on nor with other applications on that shard and the gas prices among shards. Instead, developers have the convenience of interacting with the NEAR network as they do with a single blockchain now. To achieve that, we have designed a novel sharding approach called Nightshade, you can read more about it https://near.ai/nightshade or check out this video https://www.youtube.com/watch?v=4CKvfYJTjxk.

中文版:https://blog.csdn.net/sun_dsk1/article/details/102763593

还有github版 https://github.com/marco-sundsk/NEAR_DOC_zhcn/blob/master/whitepaper/nightshade_cn.md

Additionally, economics is extremely important for any chain, and in sharded or multi-chain setups this becomes even more crucial. We have successfully made strides to both hide complexity and solve some of the burning needs of developers – https://near.ai/economics.

中文版https://blog.csdn.net/sun_dsk1/article/details/102763595

3、你认为分片带来的最大的可用性挑战是什么,NEAR打算如何应对?

What are the biggest usability challenges due to sharding, and how do you plan to address them ?

@illia:

The biggest challenge for developers building on sharded blockchains compared to blockchains like Ethereum is the fact that cross-contract calls become asynchronous. When in Ethereum we send transactions – if something fails mid-way through its execution across many contracts, the system will revert all the changes.

This is highly unscalable in nature. And if we look at any distributed system used in Web2, we see that everything is operating asynchronously. You might have seen the DevCon commentary by James Prestwich about how this would hurt the experience and composability.

There are a few things we are doing to address this:

Nightshade design makes cross-shard communication to be reliable and execute at the next block produced by the network. Because of this, we removed the need for developers to care when they are calling another contract if it’s in the same shard or not. All cross contract calls get executed in the same block, even if routing across shards have happened.

Because different contracts might have different usage, it also means different shards might get more or fewer transactions. Dynamic resharding is done every epoch to rebalance the contracts and accounts between shards, and sometimes even change the number of shards, to keep usage of each shard relatively even.

Economic design (https://near.ai/economics) targets to provide predictable fees for developers and users. That is one of the problems of auction-based systems, that Bitcoin and Ethereum have that the pricing for transactions might be changing dramatically within a short period of time. In NEAR, price is predictably changing from block to block depending on the network usage, which allows developers and users to understand how much will operations cost. Additionally, price is the same across all shards, removing the need for developers to manage that as well.