modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

unknown | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

tohoku-nlp/bert-base-japanese-whole-word-masking | tohoku-nlp | "2024-02-22T00:57:37Z" | 283,403 | 55 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"fill-mask",

"ja",

"dataset:wikipedia",

"license:cc-by-sa-4.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | fill-mask | "2022-03-02T23:29:05Z" | ---

language: ja

license: cc-by-sa-4.0

datasets:

- wikipedia

widget:

- text: 東北大学で[MASK]の研究をしています。

---

# BERT base Japanese (IPA dictionary, whole word masking enabled)

This is a [BERT](https://github.com/google-research/bert) model pretrained on texts in the Japanese language.

This version of the model processes input texts with word-level tokenization based on the IPA dictionary, followed by the WordPiece subword tokenization.

Additionally, the model is trained with the whole word masking enabled for the masked language modeling (MLM) objective.

The codes for the pretraining are available at [cl-tohoku/bert-japanese](https://github.com/cl-tohoku/bert-japanese/tree/v1.0).

## Model architecture

The model architecture is the same as the original BERT base model; 12 layers, 768 dimensions of hidden states, and 12 attention heads.

## Training Data

The model is trained on Japanese Wikipedia as of September 1, 2019.

To generate the training corpus, [WikiExtractor](https://github.com/attardi/wikiextractor) is used to extract plain texts from a dump file of Wikipedia articles.

The text files used for the training are 2.6GB in size, consisting of approximately 17M sentences.

## Tokenization

The texts are first tokenized by [MeCab](https://taku910.github.io/mecab/) morphological parser with the IPA dictionary and then split into subwords by the WordPiece algorithm.

The vocabulary size is 32000.

## Training

The model is trained with the same configuration as the original BERT; 512 tokens per instance, 256 instances per batch, and 1M training steps.

For the training of the MLM (masked language modeling) objective, we introduced the **Whole Word Masking** in which all of the subword tokens corresponding to a single word (tokenized by MeCab) are masked at once.

## Licenses

The pretrained models are distributed under the terms of the [Creative Commons Attribution-ShareAlike 3.0](https://creativecommons.org/licenses/by-sa/3.0/).

## Acknowledgments

For training models, we used Cloud TPUs provided by [TensorFlow Research Cloud](https://www.tensorflow.org/tfrc/) program.

|

T-Systems-onsite/cross-en-de-roberta-sentence-transformer | T-Systems-onsite | "2024-04-07T21:18:12Z" | 283,157 | 54 | transformers | [

"transformers",

"pytorch",

"tf",

"safetensors",

"xlm-roberta",

"feature-extraction",

"sentence_embedding",

"search",

"roberta",

"xlm-r-distilroberta-base-paraphrase-v1",

"paraphrase",

"de",

"en",

"multilingual",

"dataset:stsb_multi_mt",

"arxiv:1908.10084",

"license:mit",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

] | feature-extraction | "2022-03-02T23:29:05Z" | ---

language:

- de

- en

- multilingual

license: mit

tags:

- sentence_embedding

- search

- pytorch

- xlm-roberta

- roberta

- xlm-r-distilroberta-base-paraphrase-v1

- paraphrase

datasets:

- stsb_multi_mt

metrics:

- Spearman’s rank correlation

- cosine similarity

---

# Cross English & German RoBERTa for Sentence Embeddings

This model is intended to [compute sentence (text) embeddings](https://www.sbert.net/examples/applications/computing-embeddings/README.html) for English and German text. These embeddings can then be compared with [cosine-similarity](https://en.wikipedia.org/wiki/Cosine_similarity) to find sentences with a similar semantic meaning. For example this can be useful for [semantic textual similarity](https://www.sbert.net/docs/usage/semantic_textual_similarity.html), [semantic search](https://www.sbert.net/docs/usage/semantic_search.html), or [paraphrase mining](https://www.sbert.net/docs/usage/paraphrase_mining.html). To do this you have to use the [Sentence Transformers Python framework](https://github.com/UKPLab/sentence-transformers).

The speciality of this model is that it also works cross-lingually. Regardless of the language, the sentences are translated into very similar vectors according to their semantics. This means that you can, for example, enter a search in German and find results according to the semantics in German and also in English. Using a xlm model and _multilingual finetuning with language-crossing_ we reach performance that even exceeds the best current dedicated English large model (see Evaluation section below).

> Sentence-BERT (SBERT) is a modification of the pretrained BERT network that use siamese and triplet network structures to derive semantically meaningful sentence embeddings that can be compared using cosine-similarity. This reduces the effort for finding the most similar pair from 65hours with BERT / RoBERTa to about 5 seconds with SBERT, while maintaining the accuracy from BERT.

Source: [Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks](https://arxiv.org/abs/1908.10084)

This model is fine-tuned from [Philip May](https://may.la/) and open-sourced by [T-Systems-onsite](https://www.t-systems-onsite.de/). Special thanks to [Nils Reimers](https://www.nils-reimers.de/) for your awesome open-source work, the Sentence Transformers, the models and your help on GitHub.

## How to use

To use this model install the `sentence-transformers` package (see here: <https://github.com/UKPLab/sentence-transformers>).

```python

from sentence_transformers import SentenceTransformer

model = SentenceTransformer('T-Systems-onsite/cross-en-de-roberta-sentence-transformer')

```

For details of usage and examples see here:

- [Computing Sentence Embeddings](https://www.sbert.net/docs/usage/computing_sentence_embeddings.html)

- [Semantic Textual Similarity](https://www.sbert.net/docs/usage/semantic_textual_similarity.html)

- [Paraphrase Mining](https://www.sbert.net/docs/usage/paraphrase_mining.html)

- [Semantic Search](https://www.sbert.net/docs/usage/semantic_search.html)

- [Cross-Encoders](https://www.sbert.net/docs/usage/cross-encoder.html)

- [Examples on GitHub](https://github.com/UKPLab/sentence-transformers/tree/master/examples)

## Training

The base model is [xlm-roberta-base](https://huggingface.co/xlm-roberta-base). This model has been further trained by [Nils Reimers](https://www.nils-reimers.de/) on a large scale paraphrase dataset for 50+ languages. [Nils Reimers](https://www.nils-reimers.de/) about this [on GitHub](https://github.com/UKPLab/sentence-transformers/issues/509#issuecomment-712243280):

>A paper is upcoming for the paraphrase models.

>

>These models were trained on various datasets with Millions of examples for paraphrases, mainly derived from Wikipedia edit logs, paraphrases mined from Wikipedia and SimpleWiki, paraphrases from news reports, AllNLI-entailment pairs with in-batch-negative loss etc.

>

>In internal tests, they perform much better than the NLI+STSb models as they have see more and broader type of training data. NLI+STSb has the issue that they are rather narrow in their domain and do not contain any domain specific words / sentences (like from chemistry, computer science, math etc.). The paraphrase models has seen plenty of sentences from various domains.

>

>More details with the setup, all the datasets, and a wider evaluation will follow soon.

The resulting model called `xlm-r-distilroberta-base-paraphrase-v1` has been released here: <https://github.com/UKPLab/sentence-transformers/releases/tag/v0.3.8>

Building on this cross language model we fine-tuned it for English and German language on the [STSbenchmark](http://ixa2.si.ehu.es/stswiki/index.php/STSbenchmark) dataset. For German language we used the dataset of our [German STSbenchmark dataset](https://github.com/t-systems-on-site-services-gmbh/german-STSbenchmark) which has been translated with [deepl.com](https://www.deepl.com/translator). Additionally to the German and English training samples we generated samples of English and German crossed. We call this _multilingual finetuning with language-crossing_. It doubled the traing-datasize and tests show that it further improves performance.

We did an automatic hyperparameter search for 33 trials with [Optuna](https://github.com/optuna/optuna). Using 10-fold crossvalidation on the deepl.com test and dev dataset we found the following best hyperparameters:

- batch_size = 8

- num_epochs = 2

- lr = 1.026343323298136e-05,

- eps = 4.462251033010287e-06

- weight_decay = 0.04794438776350409

- warmup_steps_proportion = 0.1609010732760181

The final model was trained with these hyperparameters on the combination of the train and dev datasets from English, German and the crossings of them. The testset was left for testing.

# Evaluation

The evaluation has been done on English, German and both languages crossed with the STSbenchmark test data. The evaluation-code is available on [Colab](https://colab.research.google.com/drive/1gtGnKq_dYU_sDYqMohTYVMVpxMJjyH0M?usp=sharing). As the metric for evaluation we use the Spearman’s rank correlation between the cosine-similarity of the sentence embeddings and STSbenchmark labels.

| Model Name | Spearman<br/>German | Spearman<br/>English | Spearman<br/>EN-DE & DE-EN<br/>(cross) |

|---------------------------------------------------------------|-------------------|--------------------|------------------|

| xlm-r-distilroberta-base-paraphrase-v1 | 0.8079 | 0.8350 | 0.7983 |

| [xlm-r-100langs-bert-base-nli-stsb-mean-tokens](https://huggingface.co/sentence-transformers/xlm-r-100langs-bert-base-nli-stsb-mean-tokens) | 0.7877 | 0.8465 | 0.7908 |

| xlm-r-bert-base-nli-stsb-mean-tokens | 0.7877 | 0.8465 | 0.7908 |

| [roberta-large-nli-stsb-mean-tokens](https://huggingface.co/sentence-transformers/roberta-large-nli-stsb-mean-tokens) | 0.6371 | 0.8639 | 0.4109 |

| [T-Systems-onsite/<br/>german-roberta-sentence-transformer-v2](https://huggingface.co/T-Systems-onsite/german-roberta-sentence-transformer-v2) | 0.8529 | 0.8634 | 0.8415 |

| [paraphrase-multilingual-mpnet-base-v2](https://huggingface.co/sentence-transformers/paraphrase-multilingual-mpnet-base-v2) | 0.8355 | **0.8682** | 0.8309 |

| **T-Systems-onsite/<br/>cross-en-de-roberta-sentence-transformer** | **0.8550** | 0.8660 | **0.8525** |

## License

Copyright (c) 2020 [Philip May](https://philipmay.org), T-Systems on site services GmbH

Licensed under the MIT License (the "License"); you may not use this work except in compliance with the License. You may obtain a copy of the License by reviewing the file [LICENSE](https://huggingface.co/T-Systems-onsite/cross-en-de-roberta-sentence-transformer/blob/main/LICENSE) in the repository.

|

latent-consistency/lcm-lora-sdv1-5 | latent-consistency | "2023-11-16T16:01:30Z" | 282,334 | 433 | diffusers | [

"diffusers",

"lora",

"text-to-image",

"arxiv:2311.05556",

"base_model:runwayml/stable-diffusion-v1-5",

"license:openrail++",

"region:us"

] | text-to-image | "2023-11-07T11:20:24Z" | ---

library_name: diffusers

base_model: runwayml/stable-diffusion-v1-5

tags:

- lora

- text-to-image

license: openrail++

inference: false

---

# Latent Consistency Model (LCM) LoRA: SDv1-5

Latent Consistency Model (LCM) LoRA was proposed in [LCM-LoRA: A universal Stable-Diffusion Acceleration Module](https://arxiv.org/abs/2311.05556)

by *Simian Luo, Yiqin Tan, Suraj Patil, Daniel Gu et al.*

It is a distilled consistency adapter for [`runwayml/stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5) that allows

to reduce the number of inference steps to only between **2 - 8 steps**.

| Model | Params / M |

|----------------------------------------------------------------------------|------------|

| [**lcm-lora-sdv1-5**](https://huggingface.co/latent-consistency/lcm-lora-sdv1-5) | **67.5** |

| [lcm-lora-ssd-1b](https://huggingface.co/latent-consistency/lcm-lora-ssd-1b) | 105 |

| [lcm-lora-sdxl](https://huggingface.co/latent-consistency/lcm-lora-sdxl) | 197M |

## Usage

LCM-LoRA is supported in 🤗 Hugging Face Diffusers library from version v0.23.0 onwards. To run the model, first

install the latest version of the Diffusers library as well as `peft`, `accelerate` and `transformers`.

audio dataset from the Hugging Face Hub:

```bash

pip install --upgrade pip

pip install --upgrade diffusers transformers accelerate peft

```

***Note: For detailed usage examples we recommend you to check out our official [LCM-LoRA docs](https://huggingface.co/docs/diffusers/main/en/using-diffusers/inference_with_lcm_lora)***

### Text-to-Image

The adapter can be loaded with SDv1-5 or deviratives. Here we use [`Lykon/dreamshaper-7`](https://huggingface.co/Lykon/dreamshaper-7). Next, the scheduler needs to be changed to [`LCMScheduler`](https://huggingface.co/docs/diffusers/v0.22.3/en/api/schedulers/lcm#diffusers.LCMScheduler) and we can reduce the number of inference steps to just 2 to 8 steps.

Please make sure to either disable `guidance_scale` or use values between 1.0 and 2.0.

```python

import torch

from diffusers import LCMScheduler, AutoPipelineForText2Image

model_id = "Lykon/dreamshaper-7"

adapter_id = "latent-consistency/lcm-lora-sdv1-5"

pipe = AutoPipelineForText2Image.from_pretrained(model_id, torch_dtype=torch.float16, variant="fp16")

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

pipe.to("cuda")

# load and fuse lcm lora

pipe.load_lora_weights(adapter_id)

pipe.fuse_lora()

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

# disable guidance_scale by passing 0

image = pipe(prompt=prompt, num_inference_steps=4, guidance_scale=0).images[0]

```

### Image-to-Image

LCM-LoRA can be applied to image-to-image tasks too. Let's look at how we can perform image-to-image generation with LCMs. For this example we'll use the [dreamshaper-7](https://huggingface.co/Lykon/dreamshaper-7) model and the LCM-LoRA for `stable-diffusion-v1-5 `.

```python

import torch

from diffusers import AutoPipelineForImage2Image, LCMScheduler

from diffusers.utils import make_image_grid, load_image

pipe = AutoPipelineForImage2Image.from_pretrained(

"Lykon/dreamshaper-7",

torch_dtype=torch.float16,

variant="fp16",

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LCM-LoRA

pipe.load_lora_weights("latent-consistency/lcm-lora-sdv1-5")

pipe.fuse_lora()

# prepare image

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/img2img-init.png"

init_image = load_image(url)

prompt = "Astronauts in a jungle, cold color palette, muted colors, detailed, 8k"

# pass prompt and image to pipeline

generator = torch.manual_seed(0)

image = pipe(

prompt,

image=init_image,

num_inference_steps=4,

guidance_scale=1,

strength=0.6,

generator=generator

).images[0]

make_image_grid([init_image, image], rows=1, cols=2)

```

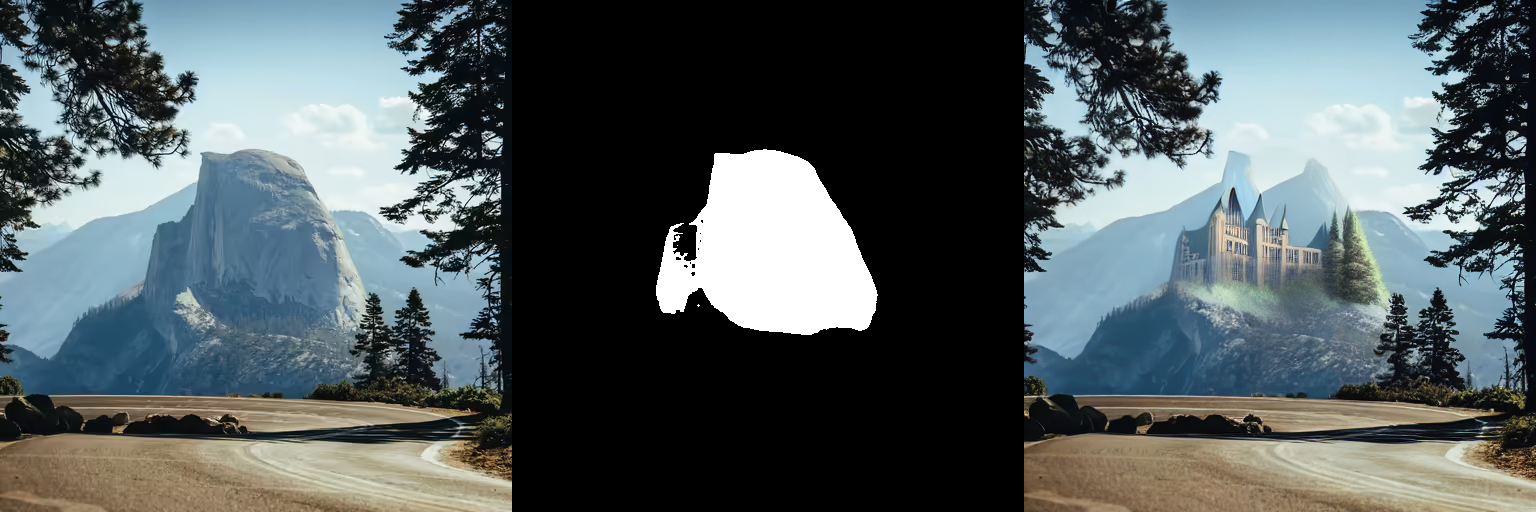

### Inpainting

LCM-LoRA can be used for inpainting as well.

```python

import torch

from diffusers import AutoPipelineForInpainting, LCMScheduler

from diffusers.utils import load_image, make_image_grid

pipe = AutoPipelineForInpainting.from_pretrained(

"runwayml/stable-diffusion-inpainting",

torch_dtype=torch.float16,

variant="fp16",

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LCM-LoRA

pipe.load_lora_weights("latent-consistency/lcm-lora-sdv1-5")

pipe.fuse_lora()

# load base and mask image

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint.png")

mask_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint_mask.png")

# generator = torch.Generator("cuda").manual_seed(92)

prompt = "concept art digital painting of an elven castle, inspired by lord of the rings, highly detailed, 8k"

generator = torch.manual_seed(0)

image = pipe(

prompt=prompt,

image=init_image,

mask_image=mask_image,

generator=generator,

num_inference_steps=4,

guidance_scale=4,

).images[0]

make_image_grid([init_image, mask_image, image], rows=1, cols=3)

```

### ControlNet

For this example, we'll use the SD-v1-5 model and the LCM-LoRA for SD-v1-5 with canny ControlNet.

```python

import torch

import cv2

import numpy as np

from PIL import Image

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, LCMScheduler

from diffusers.utils import load_image

image = load_image(

"https://hf.co/datasets/huggingface/documentation-images/resolve/main/diffusers/input_image_vermeer.png"

).resize((512, 512))

image = np.array(image)

low_threshold = 100

high_threshold = 200

image = cv2.Canny(image, low_threshold, high_threshold)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

canny_image = Image.fromarray(image)

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5",

controlnet=controlnet,

torch_dtype=torch.float16,

safety_checker=None,

variant="fp16"

).to("cuda")

# set scheduler

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

# load LCM-LoRA

pipe.load_lora_weights("latent-consistency/lcm-lora-sdv1-5")

generator = torch.manual_seed(0)

image = pipe(

"the mona lisa",

image=canny_image,

num_inference_steps=4,

guidance_scale=1.5,

controlnet_conditioning_scale=0.8,

cross_attention_kwargs={"scale": 1},

generator=generator,

).images[0]

make_image_grid([canny_image, image], rows=1, cols=2)

```

## Speed Benchmark

TODO

## Training

TODO |

prithivida/parrot_adequacy_model | prithivida | "2022-05-27T02:47:22Z" | 279,313 | 7 | transformers | [

"transformers",

"pytorch",

"roberta",

"text-classification",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | "2022-05-27T02:04:37Z" | ---

license: apache-2.0

---

Parrot

THIS IS AN ANCILLARY MODEL FOR PARROT PARAPHRASER

1. What is Parrot?

Parrot is a paraphrase-based utterance augmentation framework purpose-built to accelerate training NLU models. A paraphrase framework is more than just a paraphrasing model. Please refer to the GitHub page or The model card prithivida/parrot_paraphraser_on_T5 |

sentence-transformers/LaBSE | sentence-transformers | "2024-06-03T09:38:00Z" | 278,638 | 185 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"tf",

"jax",

"bert",

"feature-extraction",

"sentence-similarity",

"multilingual",

"af",

"sq",

"am",

"ar",

"hy",

"as",

"az",

"eu",

"be",

"bn",

"bs",

"bg",

"my",

"ca",

"ceb",

"zh",

"co",

"hr",

"cs",

"da",

"nl",

"en",

"eo",

"et",

"fi",

"fr",

"fy",

"gl",

"ka",

"de",

"el",

"gu",

"ht",

"ha",

"haw",

"he",

"hi",

"hmn",

"hu",

"is",

"ig",

"id",

"ga",

"it",

"ja",

"jv",

"kn",

"kk",

"km",

"rw",

"ko",

"ku",

"ky",

"lo",

"la",

"lv",

"lt",

"lb",

"mk",

"mg",

"ms",

"ml",

"mt",

"mi",

"mr",

"mn",

"ne",

"no",

"ny",

"or",

"fa",

"pl",

"pt",

"pa",

"ro",

"ru",

"sm",

"gd",

"sr",

"st",

"sn",

"si",

"sk",

"sl",

"so",

"es",

"su",

"sw",

"sv",

"tl",

"tg",

"ta",

"tt",

"te",

"th",

"bo",

"tr",

"tk",

"ug",

"uk",

"ur",

"uz",

"vi",

"cy",

"wo",

"xh",

"yi",

"yo",

"zu",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | sentence-similarity | "2022-03-02T23:29:05Z" | ---

language:

- multilingual

- af

- sq

- am

- ar

- hy

- as

- az

- eu

- be

- bn

- bs

- bg

- my

- ca

- ceb

- zh

- co

- hr

- cs

- da

- nl

- en

- eo

- et

- fi

- fr

- fy

- gl

- ka

- de

- el

- gu

- ht

- ha

- haw

- he

- hi

- hmn

- hu

- is

- ig

- id

- ga

- it

- ja

- jv

- kn

- kk

- km

- rw

- ko

- ku

- ky

- lo

- la

- lv

- lt

- lb

- mk

- mg

- ms

- ml

- mt

- mi

- mr

- mn

- ne

- no

- ny

- or

- fa

- pl

- pt

- pa

- ro

- ru

- sm

- gd

- sr

- st

- sn

- si

- sk

- sl

- so

- es

- su

- sw

- sv

- tl

- tg

- ta

- tt

- te

- th

- bo

- tr

- tk

- ug

- uk

- ur

- uz

- vi

- cy

- wo

- xh

- yi

- yo

- zu

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

library_name: sentence-transformers

license: apache-2.0

---

# LaBSE

This is a port of the [LaBSE](https://tfhub.dev/google/LaBSE/1) model to PyTorch. It can be used to map 109 languages to a shared vector space.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/LaBSE')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/LaBSE)

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 256, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Dense({'in_features': 768, 'out_features': 768, 'bias': True, 'activation_function': 'torch.nn.modules.activation.Tanh'})

(3): Normalize()

)

```

## Citing & Authors

Have a look at [LaBSE](https://tfhub.dev/google/LaBSE/1) for the respective publication that describes LaBSE.

|

snrspeaks/KeyPhraseTransformer | snrspeaks | "2022-03-25T13:05:44Z" | 276,406 | 8 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text2text-generation | "2022-03-25T12:23:25Z" | ---

license: mit

---

|

amazon/chronos-t5-small | amazon | "2024-05-13T21:08:16Z" | 275,117 | 16 | transformers | [

"transformers",

"safetensors",

"t5",

"text2text-generation",

"time series",

"forecasting",

"pretrained models",

"foundation models",

"time series foundation models",

"time-series",

"time-series-forecasting",

"arxiv:2403.07815",

"arxiv:1910.10683",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | time-series-forecasting | "2024-02-21T10:06:21Z" | ---

license: apache-2.0

pipeline_tag: time-series-forecasting

tags:

- time series

- forecasting

- pretrained models

- foundation models

- time series foundation models

- time-series

---

# Chronos-T5 (Small)

Chronos is a family of **pretrained time series forecasting models** based on language model architectures. A time series is transformed into a sequence of tokens via scaling and quantization, and a language model is trained on these tokens using the cross-entropy loss. Once trained, probabilistic forecasts are obtained by sampling multiple future trajectories given the historical context. Chronos models have been trained on a large corpus of publicly available time series data, as well as synthetic data generated using Gaussian processes.

For details on Chronos models, training data and procedures, and experimental results, please refer to the paper [Chronos: Learning the Language of Time Series](https://arxiv.org/abs/2403.07815).

<p align="center">

<img src="figures/main-figure.png" width="100%">

<br />

<span>

Fig. 1: High-level depiction of Chronos. (<b>Left</b>) The input time series is scaled and quantized to obtain a sequence of tokens. (<b>Center</b>) The tokens are fed into a language model which may either be an encoder-decoder or a decoder-only model. The model is trained using the cross-entropy loss. (<b>Right</b>) During inference, we autoregressively sample tokens from the model and map them back to numerical values. Multiple trajectories are sampled to obtain a predictive distribution.

</span>

</p>

---

## Architecture

The models in this repository are based on the [T5 architecture](https://arxiv.org/abs/1910.10683). The only difference is in the vocabulary size: Chronos-T5 models use 4096 different tokens, compared to 32128 of the original T5 models, resulting in fewer parameters.

| Model | Parameters | Based on |

| ---------------------------------------------------------------------- | ---------- | ---------------------------------------------------------------------- |

| [**chronos-t5-tiny**](https://huggingface.co/amazon/chronos-t5-tiny) | 8M | [t5-efficient-tiny](https://huggingface.co/google/t5-efficient-tiny) |

| [**chronos-t5-mini**](https://huggingface.co/amazon/chronos-t5-mini) | 20M | [t5-efficient-mini](https://huggingface.co/google/t5-efficient-mini) |

| [**chronos-t5-small**](https://huggingface.co/amazon/chronos-t5-small) | 46M | [t5-efficient-small](https://huggingface.co/google/t5-efficient-small) |

| [**chronos-t5-base**](https://huggingface.co/amazon/chronos-t5-base) | 200M | [t5-efficient-base](https://huggingface.co/google/t5-efficient-base) |

| [**chronos-t5-large**](https://huggingface.co/amazon/chronos-t5-large) | 710M | [t5-efficient-large](https://huggingface.co/google/t5-efficient-large) |

## Usage

To perform inference with Chronos models, install the package in the GitHub [companion repo](https://github.com/amazon-science/chronos-forecasting) by running:

```

pip install git+https://github.com/amazon-science/chronos-forecasting.git

```

A minimal example showing how to perform inference using Chronos models:

```python

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

from chronos import ChronosPipeline

pipeline = ChronosPipeline.from_pretrained(

"amazon/chronos-t5-small",

device_map="cuda",

torch_dtype=torch.bfloat16,

)

df = pd.read_csv("https://raw.githubusercontent.com/AileenNielsen/TimeSeriesAnalysisWithPython/master/data/AirPassengers.csv")

# context must be either a 1D tensor, a list of 1D tensors,

# or a left-padded 2D tensor with batch as the first dimension

context = torch.tensor(df["#Passengers"])

prediction_length = 12

forecast = pipeline.predict(context, prediction_length) # shape [num_series, num_samples, prediction_length]

# visualize the forecast

forecast_index = range(len(df), len(df) + prediction_length)

low, median, high = np.quantile(forecast[0].numpy(), [0.1, 0.5, 0.9], axis=0)

plt.figure(figsize=(8, 4))

plt.plot(df["#Passengers"], color="royalblue", label="historical data")

plt.plot(forecast_index, median, color="tomato", label="median forecast")

plt.fill_between(forecast_index, low, high, color="tomato", alpha=0.3, label="80% prediction interval")

plt.legend()

plt.grid()

plt.show()

```

## Citation

If you find Chronos models useful for your research, please consider citing the associated [paper](https://arxiv.org/abs/2403.07815):

```

@article{ansari2024chronos,

author = {Ansari, Abdul Fatir and Stella, Lorenzo and Turkmen, Caner and Zhang, Xiyuan, and Mercado, Pedro and Shen, Huibin and Shchur, Oleksandr and Rangapuram, Syama Syndar and Pineda Arango, Sebastian and Kapoor, Shubham and Zschiegner, Jasper and Maddix, Danielle C. and Mahoney, Michael W. and Torkkola, Kari and Gordon Wilson, Andrew and Bohlke-Schneider, Michael and Wang, Yuyang},

title = {Chronos: Learning the Language of Time Series},

journal = {arXiv preprint arXiv:2403.07815},

year = {2024}

}

```

## Security

See [CONTRIBUTING](CONTRIBUTING.md#security-issue-notifications) for more information.

## License

This project is licensed under the Apache-2.0 License.

|

SanctumAI/Meta-Llama-3-8B-Instruct-GGUF | SanctumAI | "2024-05-29T00:29:38Z" | 273,062 | 24 | transformers | [

"transformers",

"gguf",

"llama",

"facebook",

"meta",

"pytorch",

"llama-3",

"text-generation",

"en",

"license:other",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | "2024-04-22T15:21:47Z" | ---

language:

- en

pipeline_tag: text-generation

tags:

- facebook

- meta

- pytorch

- llama

- llama-3

license: other

license_name: llama3

license_link: LICENSE

---

*This model was quantized by [SanctumAI](https://sanctum.ai). To leave feedback, join our community in [Discord](https://discord.gg/7ZNE78HJKh).*

# Meta Llama 3 8B Instruct GGUF

**Model creator:** [meta-llama](https://huggingface.co/meta-llama)<br>

**Original model**: [Meta-Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct)<br>

## Model Summary:

Meta developed and released the Meta Llama 3 family of large language models (LLMs), a collection of pretrained and instruction tuned generative text models in 8 and 70B sizes. The Llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks. Further, in developing these models, we took great care to optimize helpfulness and safety.

## Prompt Template:

If you're using Sanctum app, simply use `Llama 3` model preset.

Prompt template:

```

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

{system_prompt}<|eot_id|><|start_header_id|>user<|end_header_id|>

{prompt}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

```

## Hardware Requirements Estimate

| Name | Quant method | Size | Memory (RAM, vRAM) required |

| ---- | ---- | ---- | ---- |

| [meta-llama-3-8b-instruct.Q2_K.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q2_K.gguf) | Q2_K | 3.18 GB | 7.20 GB |

| [meta-llama-3-8b-instruct.Q3_K_S.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q3_K_S.gguf) | Q3_K_S | 3.67 GB | 7.65 GB |

| [meta-llama-3-8b-instruct.Q3_K_M.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q3_K_M.gguf) | Q3_K_M | 4.02 GB | 7.98 GB |

| [meta-llama-3-8b-instruct.Q3_K_L.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q3_K_L.gguf) | Q3_K_L | 4.32 GB | 8.27 GB |

| [meta-llama-3-8b-instruct.Q4_0.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q4_0.gguf) | Q4_0 | 4.66 GB | 8.58 GB |

| [meta-llama-3-8b-instruct.Q4_K_S.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q4_K_S.gguf) | Q4_K_S | 4.69 GB | 8.61 GB |

| [meta-llama-3-8b-instruct.Q4_K_M.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q4_K_M.gguf) | Q4_K_M | 4.92 GB | 8.82 GB |

| [meta-llama-3-8b-instruct.Q4_K.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q4_K.gguf) | Q4_K | 4.92 GB | 8.82 GB |

| [meta-llama-3-8b-instruct.Q4_1.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q4_1.gguf) | Q4_1 | 5.13 GB | 9.02 GB |

| [meta-llama-3-8b-instruct.Q5_0.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q5_0.gguf) | Q5_0 | 5.60 GB | 9.46 GB |

| [meta-llama-3-8b-instruct.Q5_K_S.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q5_K_S.gguf) | Q5_K_S | 5.60 GB | 9.46 GB |

| [meta-llama-3-8b-instruct.Q5_K_M.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q5_K_M.gguf) | Q5_K_M | 5.73 GB | 9.58 GB |

| [meta-llama-3-8b-instruct.Q5_K.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q5_K.gguf) | Q5_K | 5.73 GB | 9.58 GB |

| [meta-llama-3-8b-instruct.Q5_1.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q5_1.gguf) | Q5_1 | 6.07 GB | 9.89 GB |

| [meta-llama-3-8b-instruct.Q6_K.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q6_K.gguf) | Q6_K | 6.60 GB | 10.38 GB |

| [meta-llama-3-8b-instruct.Q8_0.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.Q8_0.gguf) | Q8_0 | 8.54 GB | 12.19 GB |

| [meta-llama-3-8b-instruct.f16.gguf](https://huggingface.co/SanctumAI/Meta-Llama-3-8B-Instruct-GGUF/blob/main/meta-llama-3-8b-instruct.f16.gguf) | f16 | 16.07 GB | 19.21 GB |

## Disclaimer

Sanctum is not the creator, originator, or owner of any Model featured in the Models section of the Sanctum application. Each Model is created and provided by third parties. Sanctum does not endorse, support, represent or guarantee the completeness, truthfulness, accuracy, or reliability of any Model listed there. You understand that supported Models can produce content that might be offensive, harmful, inaccurate or otherwise inappropriate, or deceptive. Each Model is the sole responsibility of the person or entity who originated such Model. Sanctum may not monitor or control the Models supported and cannot, and does not, take responsibility for any such Model. Sanctum disclaims all warranties or guarantees about the accuracy, reliability or benefits of the Models. Sanctum further disclaims any warranty that the Model will meet your requirements, be secure, uninterrupted or available at any time or location, or error-free, viruses-free, or that any errors will be corrected, or otherwise. You will be solely responsible for any damage resulting from your use of or access to the Models, your downloading of any Model, or use of any other Model provided by or through Sanctum.

|

microsoft/speecht5_hifigan | microsoft | "2023-02-02T13:08:06Z" | 271,265 | 14 | transformers | [

"transformers",

"pytorch",

"hifigan",

"audio",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | "2023-02-02T13:06:10Z" | ---

license: mit

tags:

- audio

---

# SpeechT5 HiFi-GAN Vocoder

This is the HiFi-GAN vocoder for use with the SpeechT5 text-to-speech and voice conversion models.

SpeechT5 was first released in [this repository](https://github.com/microsoft/SpeechT5/), [original weights](https://huggingface.co/mechanicalsea/speecht5-tts). The license used is [MIT](https://github.com/microsoft/SpeechT5/blob/main/LICENSE).

Disclaimer: The team releasing SpeechT5 did not write a model card for this model so this model card has been written by the Hugging Face team.

## Citation

**BibTeX:**

```bibtex

@inproceedings{ao-etal-2022-speecht5,

title = {{S}peech{T}5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing},

author = {Ao, Junyi and Wang, Rui and Zhou, Long and Wang, Chengyi and Ren, Shuo and Wu, Yu and Liu, Shujie and Ko, Tom and Li, Qing and Zhang, Yu and Wei, Zhihua and Qian, Yao and Li, Jinyu and Wei, Furu},

booktitle = {Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)},

month = {May},

year = {2022},

pages={5723--5738},

}

```

|

google/gemma-7b-it | google | "2024-06-27T14:09:41Z" | 270,986 | 1,101 | transformers | [

"transformers",

"safetensors",

"gguf",

"gemma",

"text-generation",

"conversational",

"arxiv:2312.11805",

"arxiv:2009.03300",

"arxiv:1905.07830",

"arxiv:1911.11641",

"arxiv:1904.09728",

"arxiv:1905.10044",

"arxiv:1907.10641",

"arxiv:1811.00937",

"arxiv:1809.02789",

"arxiv:1911.01547",

"arxiv:1705.03551",

"arxiv:2107.03374",

"arxiv:2108.07732",

"arxiv:2110.14168",

"arxiv:2304.06364",

"arxiv:2206.04615",

"arxiv:1804.06876",

"arxiv:2110.08193",

"arxiv:2009.11462",

"arxiv:2101.11718",

"arxiv:1804.09301",

"arxiv:2109.07958",

"arxiv:2203.09509",

"license:gemma",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | "2024-02-13T01:07:30Z" | ---

library_name: transformers

license: gemma

tags: []

widget:

- messages:

- role: user

content: How does the brain work?

inference:

parameters:

max_new_tokens: 200

extra_gated_heading: Access Gemma on Hugging Face

extra_gated_prompt: To access Gemma on Hugging Face, you’re required to review and

agree to Google’s usage license. To do this, please ensure you’re logged-in to Hugging

Face and click below. Requests are processed immediately.

extra_gated_button_content: Acknowledge license

---

# Gemma Model Card

**Model Page**: [Gemma](https://ai.google.dev/gemma/docs)

This model card corresponds to the 7B instruct version of the Gemma model. You can also visit the model card of the [2B base model](https://huggingface.co/google/gemma-2b), [7B base model](https://huggingface.co/google/gemma-7b), and [2B instruct model](https://huggingface.co/google/gemma-2b-it).

**Resources and Technical Documentation**:

* [Responsible Generative AI Toolkit](https://ai.google.dev/responsible)

* [Gemma on Kaggle](https://www.kaggle.com/models/google/gemma)

* [Gemma on Vertex Model Garden](https://console.cloud.google.com/vertex-ai/publishers/google/model-garden/335?version=gemma-7b-it-gg-hf)

**Terms of Use**: [Terms](https://www.kaggle.com/models/google/gemma/license/consent/verify/huggingface?returnModelRepoId=google/gemma-7b-it)

**Authors**: Google

## Model Information

Summary description and brief definition of inputs and outputs.

### Description

Gemma is a family of lightweight, state-of-the-art open models from Google,

built from the same research and technology used to create the Gemini models.

They are text-to-text, decoder-only large language models, available in English,

with open weights, pre-trained variants, and instruction-tuned variants. Gemma

models are well-suited for a variety of text generation tasks, including

question answering, summarization, and reasoning. Their relatively small size

makes it possible to deploy them in environments with limited resources such as

a laptop, desktop or your own cloud infrastructure, democratizing access to

state of the art AI models and helping foster innovation for everyone.

### Usage

Below we share some code snippets on how to get quickly started with running the model. First make sure to `pip install -U transformers`, then copy the snippet from the section that is relevant for your usecase.

#### Fine-tuning the model

You can find fine-tuning scripts and notebook under the [`examples/` directory](https://huggingface.co/google/gemma-7b/tree/main/examples) of [`google/gemma-7b`](https://huggingface.co/google/gemma-7b) repository. To adapt it to this model, simply change the model-id to `google/gemma-7b-it`.

In that repository, we provide:

* A script to perform Supervised Fine-Tuning (SFT) on UltraChat dataset using QLoRA

* A script to perform SFT using FSDP on TPU devices

* A notebook that you can run on a free-tier Google Colab instance to perform SFT on English quotes dataset

#### Running the model on a CPU

As explained below, we recommend `torch.bfloat16` as the default dtype. You can use [a different precision](#precisions) if necessary.

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-7b-it",

torch_dtype=torch.bfloat16

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

#### Running the model on a single / multi GPU

```python

# pip install accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-7b-it",

device_map="auto",

torch_dtype=torch.bfloat16

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

<a name="precisions"></a>

#### Running the model on a GPU using different precisions

The native weights of this model were exported in `bfloat16` precision. You can use `float16`, which may be faster on certain hardware, indicating the `torch_dtype` when loading the model. For convenience, the `float16` revision of the repo contains a copy of the weights already converted to that precision.

You can also use `float32` if you skip the dtype, but no precision increase will occur (model weights will just be upcasted to `float32`). See examples below.

* _Using `torch.float16`_

```python

# pip install accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-7b-it",

device_map="auto",

torch_dtype=torch.float16,

revision="float16",

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

* _Using `torch.bfloat16`_

```python

# pip install accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained("google/gemma-7b-it", device_map="auto", torch_dtype=torch.bfloat16)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

* _Upcasting to `torch.float32`_

```python

# pip install accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-7b-it",

device_map="auto"

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

#### Quantized Versions through `bitsandbytes`

* _Using 8-bit precision (int8)_

```python

# pip install bitsandbytes accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(load_in_8bit=True)

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained("google/gemma-7b-it", quantization_config=quantization_config)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

* _Using 4-bit precision_

```python

# pip install bitsandbytes accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

quantization_config = BitsAndBytesConfig(load_in_4bit=True)

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it")

model = AutoModelForCausalLM.from_pretrained("google/gemma-7b-it", quantization_config=quantization_config)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

```

#### Other optimizations

* _Flash Attention 2_

First make sure to install `flash-attn` in your environment `pip install flash-attn`

```diff

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.float16,

+ attn_implementation="flash_attention_2"

).to(0)

```

### Chat Template

The instruction-tuned models use a chat template that must be adhered to for conversational use.

The easiest way to apply it is using the tokenizer's built-in chat template, as shown in the following snippet.

Let's load the model and apply the chat template to a conversation. In this example, we'll start with a single user interaction:

```py

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

model_id = "google/gemma-7b-it"

dtype = torch.bfloat16

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

device_map="cuda",

torch_dtype=dtype,

)

chat = [

{ "role": "user", "content": "Write a hello world program" },

]

prompt = tokenizer.apply_chat_template(chat, tokenize=False, add_generation_prompt=True)

```

At this point, the prompt contains the following text:

```

<bos><start_of_turn>user

Write a hello world program<end_of_turn>

<start_of_turn>model

```

As you can see, each turn is preceded by a `<start_of_turn>` delimiter and then the role of the entity

(either `user`, for content supplied by the user, or `model` for LLM responses). Turns finish with

the `<end_of_turn>` token.

You can follow this format to build the prompt manually, if you need to do it without the tokenizer's

chat template.

After the prompt is ready, generation can be performed like this:

```py

inputs = tokenizer.encode(prompt, add_special_tokens=False, return_tensors="pt")

outputs = model.generate(input_ids=inputs.to(model.device), max_new_tokens=150)

print(tokenizer.decode(outputs[0]))

```

### Inputs and outputs

* **Input:** Text string, such as a question, a prompt, or a document to be

summarized.

* **Output:** Generated English-language text in response to the input, such

as an answer to a question, or a summary of a document.

## Model Data

Data used for model training and how the data was processed.

### Training Dataset

These models were trained on a dataset of text data that includes a wide variety

of sources, totaling 6 trillion tokens. Here are the key components:

* Web Documents: A diverse collection of web text ensures the model is exposed

to a broad range of linguistic styles, topics, and vocabulary. Primarily

English-language content.

* Code: Exposing the model to code helps it to learn the syntax and patterns of

programming languages, which improves its ability to generate code or

understand code-related questions.

* Mathematics: Training on mathematical text helps the model learn logical

reasoning, symbolic representation, and to address mathematical queries.

The combination of these diverse data sources is crucial for training a powerful

language model that can handle a wide variety of different tasks and text

formats.

### Data Preprocessing

Here are the key data cleaning and filtering methods applied to the training

data:

* CSAM Filtering: Rigorous CSAM (Child Sexual Abuse Material) filtering was

applied at multiple stages in the data preparation process to ensure the

exclusion of harmful and illegal content

* Sensitive Data Filtering: As part of making Gemma pre-trained models safe and

reliable, automated techniques were used to filter out certain personal

information and other sensitive data from training sets.

* Additional methods: Filtering based on content quality and safely in line with

[our policies](https://storage.googleapis.com/gweb-uniblog-publish-prod/documents/2023_Google_AI_Principles_Progress_Update.pdf#page=11).

## Implementation Information

Details about the model internals.

### Hardware

Gemma was trained using the latest generation of

[Tensor Processing Unit (TPU)](https://cloud.google.com/tpu/docs/intro-to-tpu) hardware (TPUv5e).

Training large language models requires significant computational power. TPUs,

designed specifically for matrix operations common in machine learning, offer

several advantages in this domain:

* Performance: TPUs are specifically designed to handle the massive computations

involved in training LLMs. They can speed up training considerably compared to

CPUs.

* Memory: TPUs often come with large amounts of high-bandwidth memory, allowing

for the handling of large models and batch sizes during training. This can

lead to better model quality.

* Scalability: TPU Pods (large clusters of TPUs) provide a scalable solution for

handling the growing complexity of large foundation models. You can distribute

training across multiple TPU devices for faster and more efficient processing.

* Cost-effectiveness: In many scenarios, TPUs can provide a more cost-effective

solution for training large models compared to CPU-based infrastructure,

especially when considering the time and resources saved due to faster

training.

* These advantages are aligned with

[Google's commitments to operate sustainably](https://sustainability.google/operating-sustainably/).

### Software

Training was done using [JAX](https://github.com/google/jax) and [ML Pathways](https://blog.google/technology/ai/introducing-pathways-next-generation-ai-architecture).

JAX allows researchers to take advantage of the latest generation of hardware,

including TPUs, for faster and more efficient training of large models.

ML Pathways is Google's latest effort to build artificially intelligent systems

capable of generalizing across multiple tasks. This is specially suitable for

[foundation models](https://ai.google/discover/foundation-models/), including large language models like

these ones.

Together, JAX and ML Pathways are used as described in the

[paper about the Gemini family of models](https://arxiv.org/abs/2312.11805); "the 'single

controller' programming model of Jax and Pathways allows a single Python

process to orchestrate the entire training run, dramatically simplifying the

development workflow."

## Evaluation

Model evaluation metrics and results.

### Benchmark Results

These models were evaluated against a large collection of different datasets and

metrics to cover different aspects of text generation:

| Benchmark | Metric | 2B Params | 7B Params |

| ------------------------------ | ------------- | ----------- | --------- |

| [MMLU](https://arxiv.org/abs/2009.03300) | 5-shot, top-1 | 42.3 | 64.3 |

| [HellaSwag](https://arxiv.org/abs/1905.07830) | 0-shot |71.4 | 81.2 |

| [PIQA](https://arxiv.org/abs/1911.11641) | 0-shot | 77.3 | 81.2 |

| [SocialIQA](https://arxiv.org/abs/1904.09728) | 0-shot | 49.7 | 51.8 |

| [BooIQ](https://arxiv.org/abs/1905.10044) | 0-shot | 69.4 | 83.2 |

| [WinoGrande](https://arxiv.org/abs/1907.10641) | partial score | 65.4 | 72.3 |

| [CommonsenseQA](https://arxiv.org/abs/1811.00937) | 7-shot | 65.3 | 71.3 |

| [OpenBookQA](https://arxiv.org/abs/1809.02789) | | 47.8 | 52.8 |

| [ARC-e](https://arxiv.org/abs/1911.01547) | | 73.2 | 81.5 |

| [ARC-c](https://arxiv.org/abs/1911.01547) | | 42.1 | 53.2 |

| [TriviaQA](https://arxiv.org/abs/1705.03551) | 5-shot | 53.2 | 63.4 |

| [Natural Questions](https://github.com/google-research-datasets/natural-questions) | 5-shot | 12.5 | 23 |

| [HumanEval](https://arxiv.org/abs/2107.03374) | pass@1 | 22.0 | 32.3 |

| [MBPP](https://arxiv.org/abs/2108.07732) | 3-shot | 29.2 | 44.4 |

| [GSM8K](https://arxiv.org/abs/2110.14168) | maj@1 | 17.7 | 46.4 |

| [MATH](https://arxiv.org/abs/2108.07732) | 4-shot | 11.8 | 24.3 |

| [AGIEval](https://arxiv.org/abs/2304.06364) | | 24.2 | 41.7 |

| [BIG-Bench](https://arxiv.org/abs/2206.04615) | | 35.2 | 55.1 |

| ------------------------------ | ------------- | ----------- | --------- |

| **Average** | | **45.0** | **56.9** |

## Ethics and Safety

Ethics and safety evaluation approach and results.

### Evaluation Approach

Our evaluation methods include structured evaluations and internal red-teaming

testing of relevant content policies. Red-teaming was conducted by a number of

different teams, each with different goals and human evaluation metrics. These

models were evaluated against a number of different categories relevant to

ethics and safety, including:

* Text-to-Text Content Safety: Human evaluation on prompts covering safety

policies including child sexual abuse and exploitation, harassment, violence

and gore, and hate speech.

* Text-to-Text Representational Harms: Benchmark against relevant academic

datasets such as [WinoBias](https://arxiv.org/abs/1804.06876) and [BBQ Dataset](https://arxiv.org/abs/2110.08193v2).

* Memorization: Automated evaluation of memorization of training data, including

the risk of personally identifiable information exposure.

* Large-scale harm: Tests for "dangerous capabilities," such as chemical,

biological, radiological, and nuclear (CBRN) risks.

### Evaluation Results

The results of ethics and safety evaluations are within acceptable thresholds

for meeting [internal policies](https://storage.googleapis.com/gweb-uniblog-publish-prod/documents/2023_Google_AI_Principles_Progress_Update.pdf#page=11) for categories such as child

safety, content safety, representational harms, memorization, large-scale harms.

On top of robust internal evaluations, the results of well known safety

benchmarks like BBQ, BOLD, Winogender, Winobias, RealToxicity, and TruthfulQA

are shown here.

| Benchmark | Metric | 2B Params | 7B Params |

| ------------------------------ | ------------- | ----------- | --------- |

| [RealToxicity](https://arxiv.org/abs/2009.11462) | average | 6.86 | 7.90 |

| [BOLD](https://arxiv.org/abs/2101.11718) | | 45.57 | 49.08 |

| [CrowS-Pairs](https://aclanthology.org/2020.emnlp-main.154/) | top-1 | 45.82 | 51.33 |

| [BBQ Ambig](https://arxiv.org/abs/2110.08193v2) | 1-shot, top-1 | 62.58 | 92.54 |

| [BBQ Disambig](https://arxiv.org/abs/2110.08193v2) | top-1 | 54.62 | 71.99 |

| [Winogender](https://arxiv.org/abs/1804.09301) | top-1 | 51.25 | 54.17 |

| [TruthfulQA](https://arxiv.org/abs/2109.07958) | | 44.84 | 31.81 |

| [Winobias 1_2](https://arxiv.org/abs/1804.06876) | | 56.12 | 59.09 |

| [Winobias 2_2](https://arxiv.org/abs/1804.06876) | | 91.10 | 92.23 |

| [Toxigen](https://arxiv.org/abs/2203.09509) | | 29.77 | 39.59 |

| ------------------------------ | ------------- | ----------- | --------- |

## Usage and Limitations

These models have certain limitations that users should be aware of.

### Intended Usage

Open Large Language Models (LLMs) have a wide range of applications across

various industries and domains. The following list of potential uses is not

comprehensive. The purpose of this list is to provide contextual information

about the possible use-cases that the model creators considered as part of model

training and development.

* Content Creation and Communication

* Text Generation: These models can be used to generate creative text formats

such as poems, scripts, code, marketing copy, and email drafts.

* Chatbots and Conversational AI: Power conversational interfaces for customer

service, virtual assistants, or interactive applications.

* Text Summarization: Generate concise summaries of a text corpus, research

papers, or reports.

* Research and Education

* Natural Language Processing (NLP) Research: These models can serve as a

foundation for researchers to experiment with NLP techniques, develop

algorithms, and contribute to the advancement of the field.

* Language Learning Tools: Support interactive language learning experiences,

aiding in grammar correction or providing writing practice.

* Knowledge Exploration: Assist researchers in exploring large bodies of text

by generating summaries or answering questions about specific topics.

### Limitations

* Training Data

* The quality and diversity of the training data significantly influence the

model's capabilities. Biases or gaps in the training data can lead to

limitations in the model's responses.

* The scope of the training dataset determines the subject areas the model can

handle effectively.

* Context and Task Complexity

* LLMs are better at tasks that can be framed with clear prompts and

instructions. Open-ended or highly complex tasks might be challenging.

* A model's performance can be influenced by the amount of context provided

(longer context generally leads to better outputs, up to a certain point).

* Language Ambiguity and Nuance

* Natural language is inherently complex. LLMs might struggle to grasp subtle

nuances, sarcasm, or figurative language.

* Factual Accuracy

* LLMs generate responses based on information they learned from their

training datasets, but they are not knowledge bases. They may generate

incorrect or outdated factual statements.

* Common Sense

* LLMs rely on statistical patterns in language. They might lack the ability

to apply common sense reasoning in certain situations.

### Ethical Considerations and Risks

The development of large language models (LLMs) raises several ethical concerns.

In creating an open model, we have carefully considered the following:

* Bias and Fairness

* LLMs trained on large-scale, real-world text data can reflect socio-cultural

biases embedded in the training material. These models underwent careful

scrutiny, input data pre-processing described and posterior evaluations

reported in this card.

* Misinformation and Misuse

* LLMs can be misused to generate text that is false, misleading, or harmful.

* Guidelines are provided for responsible use with the model, see the

[Responsible Generative AI Toolkit](http://ai.google.dev/gemma/responsible).

* Transparency and Accountability:

* This model card summarizes details on the models' architecture,

capabilities, limitations, and evaluation processes.

* A responsibly developed open model offers the opportunity to share

innovation by making LLM technology accessible to developers and researchers

across the AI ecosystem.

Risks identified and mitigations:

* Perpetuation of biases: It's encouraged to perform continuous monitoring

(using evaluation metrics, human review) and the exploration of de-biasing

techniques during model training, fine-tuning, and other use cases.

* Generation of harmful content: Mechanisms and guidelines for content safety

are essential. Developers are encouraged to exercise caution and implement

appropriate content safety safeguards based on their specific product policies

and application use cases.

* Misuse for malicious purposes: Technical limitations and developer and

end-user education can help mitigate against malicious applications of LLMs.

Educational resources and reporting mechanisms for users to flag misuse are

provided. Prohibited uses of Gemma models are outlined in the

[Gemma Prohibited Use Policy](https://ai.google.dev/gemma/prohibited_use_policy).

* Privacy violations: Models were trained on data filtered for removal of PII

(Personally Identifiable Information). Developers are encouraged to adhere to

privacy regulations with privacy-preserving techniques.

### Benefits

At the time of release, this family of models provides high-performance open

large language model implementations designed from the ground up for Responsible

AI development compared to similarly sized models.

Using the benchmark evaluation metrics described in this document, these models

have shown to provide superior performance to other, comparably-sized open model

alternatives.

|

Helsinki-NLP/opus-mt-mul-en | Helsinki-NLP | "2023-08-16T12:01:25Z" | 269,809 | 57 | transformers | [

"transformers",

"pytorch",

"tf",

"marian",

"text2text-generation",

"translation",

"ca",

"es",

"os",

"eo",

"ro",

"fy",

"cy",

"is",

"lb",

"su",

"an",

"sq",

"fr",

"ht",

"rm",

"cv",

"ig",

"am",

"eu",

"tr",

"ps",

"af",

"ny",

"ch",

"uk",

"sl",

"lt",

"tk",

"sg",

"ar",

"lg",

"bg",

"be",

"ka",

"gd",

"ja",

"si",

"br",

"mh",

"km",

"th",

"ty",

"rw",

"te",

"mk",

"or",

"wo",

"kl",

"mr",

"ru",

"yo",

"hu",

"fo",

"zh",

"ti",

"co",

"ee",

"oc",

"sn",

"mt",

"ts",

"pl",

"gl",

"nb",

"bn",

"tt",

"bo",

"lo",

"id",

"gn",

"nv",

"hy",

"kn",

"to",

"io",

"so",

"vi",

"da",

"fj",

"gv",

"sm",

"nl",

"mi",

"pt",

"hi",

"se",

"as",

"ta",

"et",

"kw",

"ga",

"sv",

"ln",

"na",

"mn",

"gu",

"wa",

"lv",

"jv",

"el",

"my",

"ba",

"it",

"hr",

"ur",

"ce",

"nn",

"fi",

"mg",

"rn",

"xh",

"ab",

"de",

"cs",

"he",

"zu",

"yi",

"ml",

"mul",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | translation | "2022-03-02T23:29:04Z" | ---

language:

- ca

- es

- os

- eo

- ro

- fy

- cy

- is

- lb

- su

- an

- sq

- fr

- ht

- rm

- cv

- ig

- am

- eu

- tr

- ps

- af

- ny

- ch

- uk

- sl

- lt

- tk

- sg

- ar

- lg

- bg

- be

- ka

- gd

- ja

- si

- br

- mh

- km

- th

- ty

- rw

- te

- mk

- or

- wo

- kl

- mr

- ru

- yo

- hu

- fo

- zh

- ti

- co

- ee

- oc

- sn

- mt

- ts

- pl

- gl

- nb

- bn

- tt

- bo

- lo

- id

- gn

- nv

- hy

- kn

- to

- io

- so

- vi

- da

- fj

- gv

- sm

- nl

- mi

- pt

- hi

- se

- as

- ta

- et

- kw

- ga

- sv

- ln

- na

- mn

- gu

- wa

- lv

- jv

- el

- my

- ba

- it

- hr

- ur

- ce

- nn

- fi

- mg

- rn

- xh

- ab

- de

- cs

- he

- zu

- yi

- ml

- mul

- en

tags:

- translation

license: apache-2.0

---

### mul-eng

* source group: Multiple languages

* target group: English

* OPUS readme: [mul-eng](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/mul-eng/README.md)

* model: transformer

* source language(s): abk acm ady afb afh_Latn afr akl_Latn aln amh ang_Latn apc ara arg arq ary arz asm ast avk_Latn awa aze_Latn bak bam_Latn bel bel_Latn ben bho bod bos_Latn bre brx brx_Latn bul bul_Latn cat ceb ces cha che chr chv cjy_Hans cjy_Hant cmn cmn_Hans cmn_Hant cor cos crh crh_Latn csb_Latn cym dan deu dsb dtp dws_Latn egl ell enm_Latn epo est eus ewe ext fao fij fin fkv_Latn fra frm_Latn frr fry fuc fuv gan gcf_Latn gil gla gle glg glv gom gos got_Goth grc_Grek grn gsw guj hat hau_Latn haw heb hif_Latn hil hin hnj_Latn hoc hoc_Latn hrv hsb hun hye iba ibo ido ido_Latn ike_Latn ile_Latn ilo ina_Latn ind isl ita izh jav jav_Java jbo jbo_Cyrl jbo_Latn jdt_Cyrl jpn kab kal kan kat kaz_Cyrl kaz_Latn kek_Latn kha khm khm_Latn kin kir_Cyrl kjh kpv krl ksh kum kur_Arab kur_Latn lad lad_Latn lao lat_Latn lav ldn_Latn lfn_Cyrl lfn_Latn lij lin lit liv_Latn lkt lld_Latn lmo ltg ltz lug lzh lzh_Hans mad mah mai mal mar max_Latn mdf mfe mhr mic min mkd mlg mlt mnw moh mon mri mwl mww mya myv nan nau nav nds niu nld nno nob nob_Hebr nog non_Latn nov_Latn npi nya oci ori orv_Cyrl oss ota_Arab ota_Latn pag pan_Guru pap pau pdc pes pes_Latn pes_Thaa pms pnb pol por ppl_Latn prg_Latn pus quc qya qya_Latn rap rif_Latn roh rom ron rue run rus sag sah san_Deva scn sco sgs shs_Latn shy_Latn sin sjn_Latn slv sma sme smo sna snd_Arab som spa sqi srp_Cyrl srp_Latn stq sun swe swg swh tah tam tat tat_Arab tat_Latn tel tet tgk_Cyrl tha tir tlh_Latn tly_Latn tmw_Latn toi_Latn ton tpw_Latn tso tuk tuk_Latn tur tvl tyv tzl tzl_Latn udm uig_Arab uig_Cyrl ukr umb urd uzb_Cyrl uzb_Latn vec vie vie_Hani vol_Latn vro war wln wol wuu xal xho yid yor yue yue_Hans yue_Hant zho zho_Hans zho_Hant zlm_Latn zsm_Latn zul zza

* target language(s): eng

* model: transformer

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus2m-2020-08-01.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/mul-eng/opus2m-2020-08-01.zip)

* test set translations: [opus2m-2020-08-01.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/mul-eng/opus2m-2020-08-01.test.txt)

* test set scores: [opus2m-2020-08-01.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/mul-eng/opus2m-2020-08-01.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| newsdev2014-hineng.hin.eng | 8.5 | 0.341 |

| newsdev2015-enfi-fineng.fin.eng | 16.8 | 0.441 |

| newsdev2016-enro-roneng.ron.eng | 31.3 | 0.580 |

| newsdev2016-entr-tureng.tur.eng | 16.4 | 0.422 |

| newsdev2017-enlv-laveng.lav.eng | 21.3 | 0.502 |

| newsdev2017-enzh-zhoeng.zho.eng | 12.7 | 0.409 |

| newsdev2018-enet-esteng.est.eng | 19.8 | 0.467 |

| newsdev2019-engu-gujeng.guj.eng | 13.3 | 0.385 |

| newsdev2019-enlt-liteng.lit.eng | 19.9 | 0.482 |

| newsdiscussdev2015-enfr-fraeng.fra.eng | 26.7 | 0.520 |

| newsdiscusstest2015-enfr-fraeng.fra.eng | 29.8 | 0.541 |

| newssyscomb2009-ceseng.ces.eng | 21.1 | 0.487 |

| newssyscomb2009-deueng.deu.eng | 22.6 | 0.499 |

| newssyscomb2009-fraeng.fra.eng | 25.8 | 0.530 |

| newssyscomb2009-huneng.hun.eng | 15.1 | 0.430 |

| newssyscomb2009-itaeng.ita.eng | 29.4 | 0.555 |

| newssyscomb2009-spaeng.spa.eng | 26.1 | 0.534 |

| news-test2008-deueng.deu.eng | 21.6 | 0.491 |

| news-test2008-fraeng.fra.eng | 22.3 | 0.502 |

| news-test2008-spaeng.spa.eng | 23.6 | 0.514 |

| newstest2009-ceseng.ces.eng | 19.8 | 0.480 |

| newstest2009-deueng.deu.eng | 20.9 | 0.487 |

| newstest2009-fraeng.fra.eng | 25.0 | 0.523 |

| newstest2009-huneng.hun.eng | 14.7 | 0.425 |

| newstest2009-itaeng.ita.eng | 27.6 | 0.542 |

| newstest2009-spaeng.spa.eng | 25.7 | 0.530 |

| newstest2010-ceseng.ces.eng | 20.6 | 0.491 |

| newstest2010-deueng.deu.eng | 23.4 | 0.517 |

| newstest2010-fraeng.fra.eng | 26.1 | 0.537 |

| newstest2010-spaeng.spa.eng | 29.1 | 0.561 |

| newstest2011-ceseng.ces.eng | 21.0 | 0.489 |

| newstest2011-deueng.deu.eng | 21.3 | 0.494 |

| newstest2011-fraeng.fra.eng | 26.8 | 0.546 |

| newstest2011-spaeng.spa.eng | 28.2 | 0.549 |

| newstest2012-ceseng.ces.eng | 20.5 | 0.485 |

| newstest2012-deueng.deu.eng | 22.3 | 0.503 |

| newstest2012-fraeng.fra.eng | 27.5 | 0.545 |

| newstest2012-ruseng.rus.eng | 26.6 | 0.532 |

| newstest2012-spaeng.spa.eng | 30.3 | 0.567 |

| newstest2013-ceseng.ces.eng | 22.5 | 0.498 |

| newstest2013-deueng.deu.eng | 25.0 | 0.518 |

| newstest2013-fraeng.fra.eng | 27.4 | 0.537 |

| newstest2013-ruseng.rus.eng | 21.6 | 0.484 |

| newstest2013-spaeng.spa.eng | 28.4 | 0.555 |

| newstest2014-csen-ceseng.ces.eng | 24.0 | 0.517 |

| newstest2014-deen-deueng.deu.eng | 24.1 | 0.511 |

| newstest2014-fren-fraeng.fra.eng | 29.1 | 0.563 |

| newstest2014-hien-hineng.hin.eng | 14.0 | 0.414 |

| newstest2014-ruen-ruseng.rus.eng | 24.0 | 0.521 |

| newstest2015-encs-ceseng.ces.eng | 21.9 | 0.481 |

| newstest2015-ende-deueng.deu.eng | 25.5 | 0.519 |

| newstest2015-enfi-fineng.fin.eng | 17.4 | 0.441 |

| newstest2015-enru-ruseng.rus.eng | 22.4 | 0.494 |

| newstest2016-encs-ceseng.ces.eng | 23.0 | 0.500 |

| newstest2016-ende-deueng.deu.eng | 30.1 | 0.560 |

| newstest2016-enfi-fineng.fin.eng | 18.5 | 0.461 |

| newstest2016-enro-roneng.ron.eng | 29.6 | 0.562 |

| newstest2016-enru-ruseng.rus.eng | 22.0 | 0.495 |

| newstest2016-entr-tureng.tur.eng | 14.8 | 0.415 |

| newstest2017-encs-ceseng.ces.eng | 20.2 | 0.475 |

| newstest2017-ende-deueng.deu.eng | 26.0 | 0.523 |

| newstest2017-enfi-fineng.fin.eng | 19.6 | 0.465 |

| newstest2017-enlv-laveng.lav.eng | 16.2 | 0.454 |

| newstest2017-enru-ruseng.rus.eng | 24.2 | 0.510 |

| newstest2017-entr-tureng.tur.eng | 15.0 | 0.412 |

| newstest2017-enzh-zhoeng.zho.eng | 13.7 | 0.412 |

| newstest2018-encs-ceseng.ces.eng | 21.2 | 0.486 |

| newstest2018-ende-deueng.deu.eng | 31.5 | 0.564 |

| newstest2018-enet-esteng.est.eng | 19.7 | 0.473 |

| newstest2018-enfi-fineng.fin.eng | 15.1 | 0.418 |

| newstest2018-enru-ruseng.rus.eng | 21.3 | 0.490 |

| newstest2018-entr-tureng.tur.eng | 15.4 | 0.421 |

| newstest2018-enzh-zhoeng.zho.eng | 12.9 | 0.408 |

| newstest2019-deen-deueng.deu.eng | 27.0 | 0.529 |

| newstest2019-fien-fineng.fin.eng | 17.2 | 0.438 |

| newstest2019-guen-gujeng.guj.eng | 9.0 | 0.342 |

| newstest2019-lten-liteng.lit.eng | 22.6 | 0.512 |

| newstest2019-ruen-ruseng.rus.eng | 24.1 | 0.503 |

| newstest2019-zhen-zhoeng.zho.eng | 13.9 | 0.427 |

| newstestB2016-enfi-fineng.fin.eng | 15.2 | 0.428 |

| newstestB2017-enfi-fineng.fin.eng | 16.8 | 0.442 |

| newstestB2017-fien-fineng.fin.eng | 16.8 | 0.442 |

| Tatoeba-test.abk-eng.abk.eng | 2.4 | 0.190 |

| Tatoeba-test.ady-eng.ady.eng | 1.1 | 0.111 |

| Tatoeba-test.afh-eng.afh.eng | 1.7 | 0.108 |

| Tatoeba-test.afr-eng.afr.eng | 53.0 | 0.672 |

| Tatoeba-test.akl-eng.akl.eng | 5.9 | 0.239 |

| Tatoeba-test.amh-eng.amh.eng | 25.6 | 0.464 |

| Tatoeba-test.ang-eng.ang.eng | 11.7 | 0.289 |

| Tatoeba-test.ara-eng.ara.eng | 26.4 | 0.443 |

| Tatoeba-test.arg-eng.arg.eng | 35.9 | 0.473 |

| Tatoeba-test.asm-eng.asm.eng | 19.8 | 0.365 |

| Tatoeba-test.ast-eng.ast.eng | 31.8 | 0.467 |

| Tatoeba-test.avk-eng.avk.eng | 0.4 | 0.119 |

| Tatoeba-test.awa-eng.awa.eng | 9.7 | 0.271 |

| Tatoeba-test.aze-eng.aze.eng | 37.0 | 0.542 |

| Tatoeba-test.bak-eng.bak.eng | 13.9 | 0.395 |

| Tatoeba-test.bam-eng.bam.eng | 2.2 | 0.094 |

| Tatoeba-test.bel-eng.bel.eng | 36.8 | 0.549 |

| Tatoeba-test.ben-eng.ben.eng | 39.7 | 0.546 |

| Tatoeba-test.bho-eng.bho.eng | 33.6 | 0.540 |

| Tatoeba-test.bod-eng.bod.eng | 1.1 | 0.147 |

| Tatoeba-test.bre-eng.bre.eng | 14.2 | 0.303 |

| Tatoeba-test.brx-eng.brx.eng | 1.7 | 0.130 |

| Tatoeba-test.bul-eng.bul.eng | 46.0 | 0.621 |

| Tatoeba-test.cat-eng.cat.eng | 46.6 | 0.636 |

| Tatoeba-test.ceb-eng.ceb.eng | 17.4 | 0.347 |

| Tatoeba-test.ces-eng.ces.eng | 41.3 | 0.586 |

| Tatoeba-test.cha-eng.cha.eng | 7.9 | 0.232 |

| Tatoeba-test.che-eng.che.eng | 0.7 | 0.104 |

| Tatoeba-test.chm-eng.chm.eng | 7.3 | 0.261 |

| Tatoeba-test.chr-eng.chr.eng | 8.8 | 0.244 |

| Tatoeba-test.chv-eng.chv.eng | 11.0 | 0.319 |

| Tatoeba-test.cor-eng.cor.eng | 5.4 | 0.204 |

| Tatoeba-test.cos-eng.cos.eng | 58.2 | 0.643 |

| Tatoeba-test.crh-eng.crh.eng | 26.3 | 0.399 |

| Tatoeba-test.csb-eng.csb.eng | 18.8 | 0.389 |

| Tatoeba-test.cym-eng.cym.eng | 23.4 | 0.407 |

| Tatoeba-test.dan-eng.dan.eng | 50.5 | 0.659 |

| Tatoeba-test.deu-eng.deu.eng | 39.6 | 0.579 |

| Tatoeba-test.dsb-eng.dsb.eng | 24.3 | 0.449 |

| Tatoeba-test.dtp-eng.dtp.eng | 1.0 | 0.149 |

| Tatoeba-test.dws-eng.dws.eng | 1.6 | 0.061 |

| Tatoeba-test.egl-eng.egl.eng | 7.6 | 0.236 |

| Tatoeba-test.ell-eng.ell.eng | 55.4 | 0.682 |

| Tatoeba-test.enm-eng.enm.eng | 28.0 | 0.489 |

| Tatoeba-test.epo-eng.epo.eng | 41.8 | 0.591 |

| Tatoeba-test.est-eng.est.eng | 41.5 | 0.581 |

| Tatoeba-test.eus-eng.eus.eng | 37.8 | 0.557 |

| Tatoeba-test.ewe-eng.ewe.eng | 10.7 | 0.262 |

| Tatoeba-test.ext-eng.ext.eng | 25.5 | 0.405 |

| Tatoeba-test.fao-eng.fao.eng | 28.7 | 0.469 |

| Tatoeba-test.fas-eng.fas.eng | 7.5 | 0.281 |