Mistral-7B-dbnl-v0.1

This model is a fine-tuned version of mistralai/Mistral-7B-v0.1 on the DBNL Public Domain dataset, featuring texts from the Dutch Literature that are in the public domain, specifically focusing on historical texts that are at least 140 years old.

Model description

Mistral-7B-dbnl-v0.1 is designed to generate and understand Dutch literature, trained on a wide array of historical Dutch texts. This model leverages the LORA (Low-Rank Adaptation) technique for efficient parameter adaptation, providing a way to maintain high performance while being computationally efficient.

Intended uses & limitations

I mostly created this for fun, cultural learnings and sharing with others.

This model is can be used by researchers, historians, and natural language processing practitioners interested in Dutch literature, historical text analysis, and language modeling. It can be used for tasks such as text generation, language modeling, and more.

Limitations

- The model is trained on historical texts, which may contain biases and outdated language that do not reflect current norms or values.

- The model's performance and relevance may be limited to the context of Dutch literature and historical texts.

Training and evaluation data

The model was trained on the DBNL Public Domain dataset, which includes a variety of texts such as books, poems, songs, and other documentation, ensuring a rich source of linguistic and cultural heritage.

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 2

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- total_eval_batch_size: 16

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 2000

- num_epochs: 3.0

Adapter configuration

The model uses LORA with the following configuration:

- lora_alpha: 2048

- r: 1024

- lora_dropout: 0.0

- inference_mode: true

- init_lora_weights: true

- peft_type: "LORA"

- target_modules: ["q_proj", "v_proj", "up_proj", "o_proj", "k_proj", "gate_proj"]

- task_type: "CAUSAL_LM"

This configuration allows the model to adapt the pre-trained layers specifically for the task of causal language modeling with an efficient use of parameters.

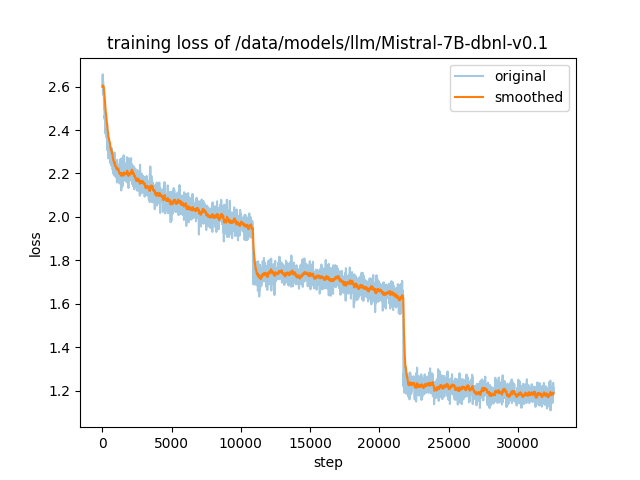

Training results

Framework versions

- PEFT 0.7.1

- Transformers 4.37.1

- Pytorch 2.1.1+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

The model is an innovative example of applying advanced NLP techniques to historical texts, offering a unique resource for exploring Dutch literature and linguistics.

- Downloads last month

- 2

Model tree for jvdgoltz/Mistral-7B-dbnl-v0.1

Base model

mistralai/Mistral-7B-v0.1