Update README.md

Browse files

README.md

CHANGED

|

@@ -19,7 +19,8 @@ language:

|

|

| 19 |

|

| 20 |

Lamarck-14B version 0.3 is strongly based on [arcee-ai/Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small) as a diffuse influence for prose and reasoning. Arcee's pioneering use of distillation and innovative merge techniques create a diverse knowledge pool for its models.

|

| 21 |

|

| 22 |

-

###

|

|

|

|

| 23 |

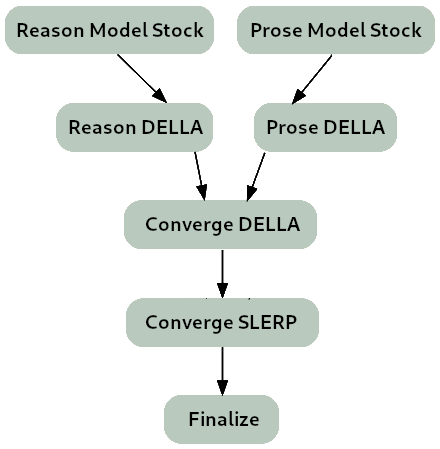

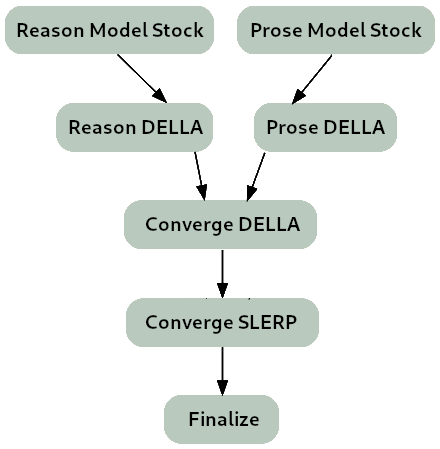

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

|

| 24 |

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

|

| 25 |

- For integration, a SLERP merge of Virtuoso with the converged branches.

|

|

@@ -36,3 +37,157 @@ Lamarck-14B version 0.3 is strongly based on [arcee-ai/Virtuoso-Small](https://h

|

|

| 36 |

- **[CultriX/Qwen2.5-14B-Wernicke](http://huggingface.co/CultriX/Qwen2.5-14B-Wernicke)** - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

|

| 37 |

|

| 38 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

|

| 20 |

Lamarck-14B version 0.3 is strongly based on [arcee-ai/Virtuoso-Small](https://huggingface.co/arcee-ai/Virtuoso-Small) as a diffuse influence for prose and reasoning. Arcee's pioneering use of distillation and innovative merge techniques create a diverse knowledge pool for its models.

|

| 21 |

|

| 22 |

+

### Overview:

|

| 23 |

+

|

| 24 |

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

|

| 25 |

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

|

| 26 |

- For integration, a SLERP merge of Virtuoso with the converged branches.

|

|

|

|

| 37 |

- **[CultriX/Qwen2.5-14B-Wernicke](http://huggingface.co/CultriX/Qwen2.5-14B-Wernicke)** - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.

|

| 38 |

|

| 39 |

|

| 40 |

+

|

| 41 |

+

### Merge Strategy:

|

| 42 |

+

|

| 43 |

+

```yaml

|

| 44 |

+

name: lamarck-14b-reason-della # This contributes the knowledge and reasoning pool, later to be merged

|

| 45 |

+

merge_method: della # with the dominant instruction-following model

|

| 46 |

+

base_model: arcee-ai/Virtuoso-Small

|

| 47 |

+

tokenizer_source: arcee-ai/Virtuoso-Small

|

| 48 |

+

parameters:

|

| 49 |

+

int8_mask: false

|

| 50 |

+

normalize: true

|

| 51 |

+

rescale: false

|

| 52 |

+

density: 0.30

|

| 53 |

+

weight: 0.50

|

| 54 |

+

epsilon: 0.08

|

| 55 |

+

lambda: 1.00

|

| 56 |

+

models:

|

| 57 |

+

- model: CultriX/SeQwence-14B-EvolMerge

|

| 58 |

+

parameters:

|

| 59 |

+

density: 0.70

|

| 60 |

+

weight: 0.90

|

| 61 |

+

- model: sometimesanotion/lamarck-14b-reason-model_stock

|

| 62 |

+

parameters:

|

| 63 |

+

density: 0.90

|

| 64 |

+

weight: 0.60

|

| 65 |

+

- model: CultriX/Qwen2.5-14B-Wernicke

|

| 66 |

+

parameters:

|

| 67 |

+

density: 0.20

|

| 68 |

+

weight: 0.30

|

| 69 |

+

dtype: bfloat16

|

| 70 |

+

out_dtype: bfloat16

|

| 71 |

+

---

|

| 72 |

+

name: lamarck-14b-prose-della # This contributes the prose, later to be merged

|

| 73 |

+

merge_method: della # with the dominant instruction-following model

|

| 74 |

+

base_model: arcee-ai/Virtuoso-Small

|

| 75 |

+

tokenizer_source: arcee-ai/Virtuoso-Small

|

| 76 |

+

parameters:

|

| 77 |

+

int8_mask: false

|

| 78 |

+

normalize: true

|

| 79 |

+

rescale: false

|

| 80 |

+

density: 0.30

|

| 81 |

+

weight: 0.50

|

| 82 |

+

epsilon: 0.08

|

| 83 |

+

lambda: 0.95

|

| 84 |

+

models:

|

| 85 |

+

- model: sthenno-com/miscii-14b-1028

|

| 86 |

+

parameters:

|

| 87 |

+

density: 0.40

|

| 88 |

+

weight: 0.90

|

| 89 |

+

- model: sometimesanotion/lamarck-14b-prose-model_stock

|

| 90 |

+

parameters:

|

| 91 |

+

density: 0.60

|

| 92 |

+

weight: 0.70

|

| 93 |

+

- model: underwoods/medius-erebus-magnum-14b

|

| 94 |

+

dtype: bfloat16

|

| 95 |

+

out_dtype: bfloat16

|

| 96 |

+

---

|

| 97 |

+

name: lamarck-14b-converge-della # This is the strongest control point to quickly

|

| 98 |

+

merge_method: della # re-balance reasoning vs. prose

|

| 99 |

+

base_model: arcee-ai/Virtuoso-Small

|

| 100 |

+

tokenizer_source: arcee-ai/Virtuoso-Small

|

| 101 |

+

parameters:

|

| 102 |

+

int8_mask: false

|

| 103 |

+

normalize: true

|

| 104 |

+

rescale: false

|

| 105 |

+

density: 0.30

|

| 106 |

+

weight: 0.50

|

| 107 |

+

epsilon: 0.08

|

| 108 |

+

lambda: 1.00

|

| 109 |

+

models:

|

| 110 |

+

- model: sometimesanotion/lamarck-14b-reason-della

|

| 111 |

+

parameters:

|

| 112 |

+

density: 0.80

|

| 113 |

+

weight: 1.00

|

| 114 |

+

- model: arcee-ai/Virtuoso-Small

|

| 115 |

+

parameters:

|

| 116 |

+

density: 0.40

|

| 117 |

+

weight: 0.50

|

| 118 |

+

- model: sometimesanotion/lamarck-14b-prose-della

|

| 119 |

+

parameters:

|

| 120 |

+

density: 0.10

|

| 121 |

+

weight: 0.40

|

| 122 |

+

dtype: bfloat16

|

| 123 |

+

out_dtype: bfloat16

|

| 124 |

+

---

|

| 125 |

+

name: lamarck-14b-converge # Virtuoso has good capabilities all-around; it is 100% of the first

|

| 126 |

+

merge_method: slerp # two layers, and blends into the reasoning+prose convergance

|

| 127 |

+

base_model: arcee-ai/Virtuoso-Small # for some interesting boosts

|

| 128 |

+

tokenizer_source: base

|

| 129 |

+

parameters:

|

| 130 |

+

t: [ 0.00, 0.60, 0.80, 0.80, 0.80, 0.70, 0.40 ]

|

| 131 |

+

slices:

|

| 132 |

+

- sources:

|

| 133 |

+

- layer_range: [ 0, 2 ]

|

| 134 |

+

model: arcee-ai/Virtuoso-Small

|

| 135 |

+

- layer_range: [ 0, 2 ]

|

| 136 |

+

model: merges/lamarck-14b-converge-della

|

| 137 |

+

t: [ 0.00, 0.00 ]

|

| 138 |

+

- sources:

|

| 139 |

+

- layer_range: [ 2, 8 ]

|

| 140 |

+

model: arcee-ai/Virtuoso-Small

|

| 141 |

+

- layer_range: [ 2, 8 ]

|

| 142 |

+

model: merges/lamarck-14b-converge-della

|

| 143 |

+

t: [ 0.00, 0.60 ]

|

| 144 |

+

- sources:

|

| 145 |

+

- layer_range: [ 8, 16 ]

|

| 146 |

+

model: arcee-ai/Virtuoso-Small

|

| 147 |

+

- layer_range: [ 8, 16 ]

|

| 148 |

+

model: merges/lamarck-14b-converge-della

|

| 149 |

+

t: [ 0.60, 0.70 ]

|

| 150 |

+

- sources:

|

| 151 |

+

- layer_range: [ 16, 24 ]

|

| 152 |

+

model: arcee-ai/Virtuoso-Small

|

| 153 |

+

- layer_range: [ 16, 24 ]

|

| 154 |

+

model: merges/lamarck-14b-converge-della

|

| 155 |

+

t: [ 0.70, 0.70 ]

|

| 156 |

+

- sources:

|

| 157 |

+

- layer_range: [ 24, 32 ]

|

| 158 |

+

model: arcee-ai/Virtuoso-Small

|

| 159 |

+

- layer_range: [ 24, 32 ]

|

| 160 |

+

model: merges/lamarck-14b-converge-della

|

| 161 |

+

t: [ 0.70, 0.70 ]

|

| 162 |

+

- sources:

|

| 163 |

+

- layer_range: [ 32, 40 ]

|

| 164 |

+

model: arcee-ai/Virtuoso-Small

|

| 165 |

+

- layer_range: [ 32, 40 ]

|

| 166 |

+

model: merges/lamarck-14b-converge-della

|

| 167 |

+

t: [ 0.70, 0.60 ]

|

| 168 |

+

- sources:

|

| 169 |

+

- layer_range: [ 40, 48 ]

|

| 170 |

+

model: arcee-ai/Virtuoso-Small

|

| 171 |

+

- layer_range: [ 40, 48 ]

|

| 172 |

+

model: merges/lamarck-14b-converge-della

|

| 173 |

+

t: [ 0.60, 0.40 ]

|

| 174 |

+

dtype: bfloat16

|

| 175 |

+

out_dtype: bfloat16

|

| 176 |

+

---

|

| 177 |

+

name: lamarck-14b-finalize

|

| 178 |

+

merge_method: ties

|

| 179 |

+

base_model: Qwen/Qwen2.5-14B

|

| 180 |

+

tokenizer_source: Qwen/Qwen2.5-14B-Instruct

|

| 181 |

+

parameters:

|

| 182 |

+

int8_mask: false

|

| 183 |

+

normalize: true

|

| 184 |

+

rescale: false

|

| 185 |

+

density: 1.00

|

| 186 |

+

weight: 1.00

|

| 187 |

+

models:

|

| 188 |

+

- model: merges/lamarck-14b-converge

|

| 189 |

+

dtype: bfloat16

|

| 190 |

+

out_dtype: bfloat16

|

| 191 |

+

---

|

| 192 |

+

|

| 193 |

+

```

|