Spaces:

Build error

A newer version of the Gradio SDK is available:

5.9.1

title: alps

app_file: app.py

sdk: gradio

sdk_version: 4.44.0

Alps

Pipeline for OCRing PDFs and tables

This repository contains different OCR methods using various libraries/models.

Running gradio:

python app.py in terminal

Installation :

Build the docker image and run the contianer

Clone this repository and Install the required dependencies:

pip install -r requirements.txt --extra-index-url https://download.pytorch.org/whl/cu117

apt install weasyprint

Note: You need a GPU to run this code.

Example Usage

Run python main.py inside the directory. Provide the path to the test file (the file must be placed inside the repository,and the file path should be relative to the repository (alps)). Next, provide the path to save intermediate outputs from the run (draw cell bounding boxes on the table, show table detection results in pdf), and specify which component to run.

outputs are printed in terminal

usage: main.py [-h] [--test_file TEST_FILE] [--debug_folder DEBUG_FOLDER] [--englishFlag ENGLISHFLAG] [--denoise DENOISE] ocr

Description of the component:

ocr1

ocr1 Input: Path to a PDF file Output: Dictionary of each page and list of line_annotations. List of LineAnnotations contains bboxes for each line and List of its children wordAnnotation. Each wordAnnotation contains bboxes and text inside. What it does: Runs Ragflow textline detector + OCR with DocTR

Example:

python main.py ocr1 --test_file TestingFiles/OCRTest1German.pdf --debug_folder ./res/ocrdebug1/

python main.py ocr1 --test_file TestingFiles/OCRTest3English.pdf --debug_folder ./res/ocrdebug1/ --englishFlag True

table1

Input : file path to an image of a cropped table Output: Parsed table in HTML form What it does: Uses Unitable + DocTR

python main.py table1 --test_file cropped_table.png --debug_folder ./res/table1/

table2

Input: File path to an image of a cropped table Output: Parsed table in HTML form What it does: Uses Unitable

python main.py table2 --test_file cropped_table.png --debug_folder ./res/table2/

pdftable1

Input: PDF file path Output: Parsed table in HTML form What it does: Uses Unitable + DocTR

python main.py pdftable1 --test_file TestingFiles/OCRTest5English.pdf --debug_folder ./res/table_debug1/

python main.py pdftable3 --test_file TestingFiles/TableOCRTestEnglish.pdf --debug_folder ./res/poor_relief2

pdftable2 :

Input: PDF file path Output: Parsed table in HTML form What it does: Detects table and parses them, Runs Full Unitable Table detection

python main.py pdftable2 --test_file TestingFiles/OCRTest5English.pdf --debug_folder ./res/table_debug2/

pdftable3

Input: PDF file path Output: Parsed table in HTML form What it does: Detects table with YOLO, Unitable + DocTR

pdftable4

Input: PDF file path Output: Parsed table in HTML form What it does: Detects table with YOLO, Runs Full doctr Table detection

python main.py pdftable4 --test_file TestingFiles/TableOCRTestEasier.pdf --debug_folder ./res/table_debug3/

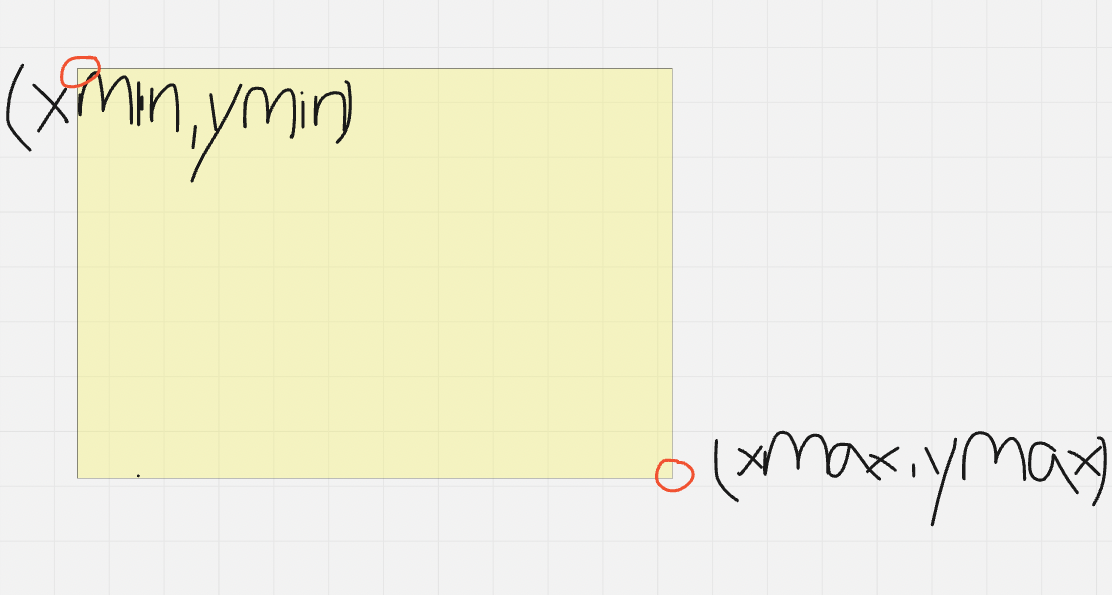

bbox

They are ordered as ordered as [xmin,ymin,xmax,ymax] . Cause the coordinates starts from (0,0) of the image which is upper left corner

xmin ymim - upper left corner xmax ymax - bottom lower corner