Flow Matching for Conditional Text Generation in a Few Sampling Steps (EACL2024)

This model represents the official checkpoint of the paper titled "Flow Matching for Conditional Text Generation in a Few Sampling Steps (EACL2024)".

Vincent Tao Hu, Di Wu, Yuki M Asano, Pascal Mettes, Basura Fernando, Björn Ommer Cees G.M. Snoek

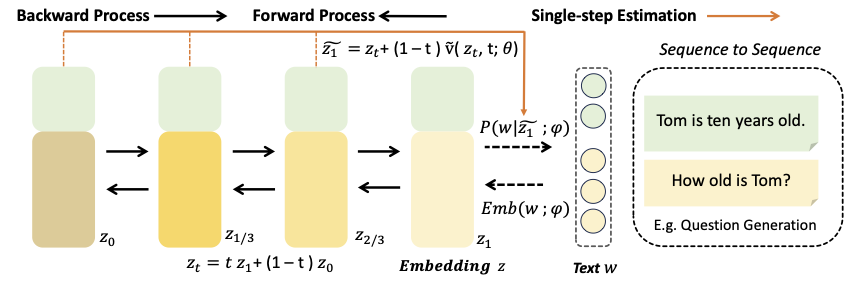

Diffusion models are a promising tool for highquality text generation. However, current models face multiple drawbacks including slow sampling, noise schedule sensitivity, and misalignment between the training and sampling stages. In this paper, we introduce FlowSeq, which bypasses all current drawbacks by leveraging flow matching for conditional text generation. FlowSeq can generate text in a few steps by training with a novel anchor loss, alleviating the need for expensive hyperparameter optimization of the noise schedule prevalent in diffusion models. We extensively evaluate our proposed method and show competitive performance in tasks such as question generation, open-domain dialogue, and paraphrasing.

🎓 Citation

@inproceedings{HuEACL2024,

title = {Flow Matching for Conditional Text Generation in a Few Sampling Steps},

author = {Vincent Tao Hu and Di Wu and Yuki M Asano and Pascal Mettes and Basura Fernando and Björn Ommer and Cees G M Snoek},

year = {2024},

date = {2024-03-27},

booktitle = {EACL},

tppubtype = {inproceedings}

}

🎫 License

This work is licensed under the Apache License, Version 2.0 (as defined in the LICENSE).

By downloading and using the code and model you agree to the terms in the LICENSE.