library_name: transformers

tags:

- mergekit

- merge

license: apache-2.0

base_model:

- arcee-ai/Virtuoso-Small

- CultriX/SeQwence-14B-EvolMerge

- CultriX/Qwen2.5-14B-Wernicke

- sometimesanotion/lamarck-14b-prose-model_stock

- sometimesanotion/lamarck-14b-if-model_stock

- sometimesanotion/lamarck-14b-reason-model_stock

language:

- en

Lamarck-14B version 0.3 is strongly based on arcee-ai/Virtuoso-Small as a diffuse influence for prose and reasoning. Arcee's pioneering use of distillation and innovative merge techniques create a diverse knowledge pool for its models.

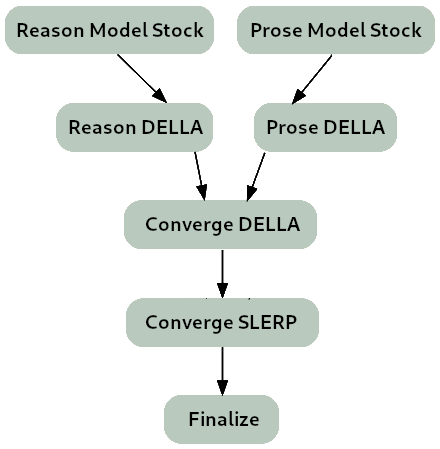

Merge Strategy:

- Two model_stocks used to begin specialized branches for reasoning and prose quality.

- For refinement on Virtuoso as a base model, DELLA and SLERP include the model_stocks while re-emphasizing selected ancestors.

- For integration, a SLERP merge of Virtuoso with the converged branches.

- For finalization, a TIES merge.

Ancestor Models:

Top influences: These ancestors are base models and present in the model_stocks, but are heavily re-emphasized in the DELLA and SLERP merges.

arcee-ai/Virtuoso-Small - A brand new model from Arcee, refined from the notable cross-architecture Llama-to-Qwen distillation arcee-ai/SuperNova-Medius. The first two layers are nearly exclusively from Virtuoso. It has proven to be a well-rounded performer, and contributes a noticeable boost to the model's prose quality.

CultriX/SeQwence-14B-EvolMerge - A well-rounded model, with interesting gains for instruction following while remaining strong for reasoning.

CultriX/Qwen2.5-14B-Wernicke - A top performer for Arc and GPQA, Wernicke is re-emphasized in small but highly-ranked portions of the model.