tokenizer | model | datasets | plots | fine tuning

Tokenizer {#tokenizer}

We trained our tokenizer using sentencepiece's unigram tokenizer. Then loaded the tokenizer as MT5TokenizerFast.

Model {#model}

We used MT5-base model.

Datasets {#datasets}

We used Code Search Net's dataset and some scrapped data from internet to train the model. We maintained a list of datasets where each dataset had codes of same language.

Plots {#plots}

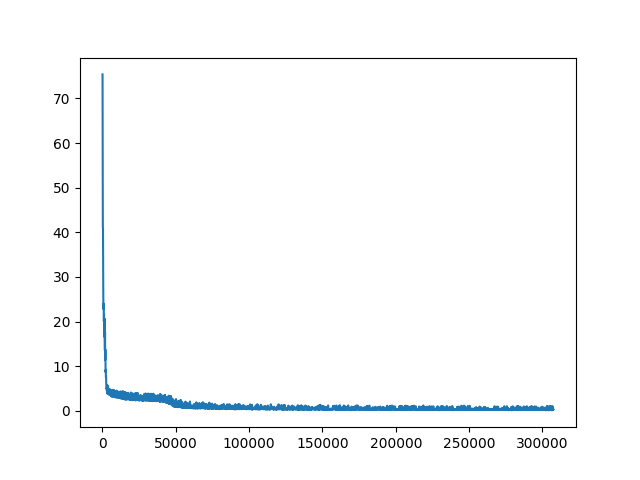

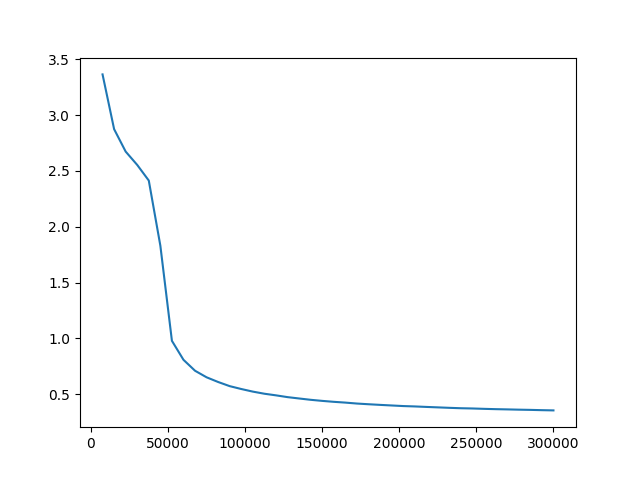

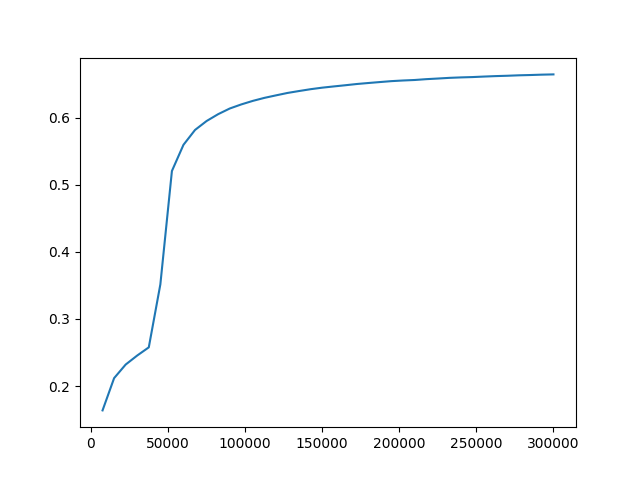

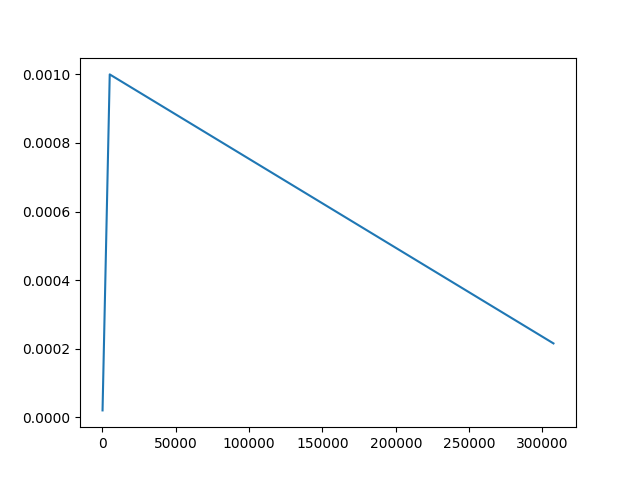

train loss | evaluation loss | evaluation accuracy | learning rate

Train loss {#train_loss}

Evaluation loss {#eval_loss}

Evaluation accuracy {#eval_acc}

Learning rate {#lrs}

Fine tuning {#fine-tuning}

We fine tuned the model with CodeXGLUE code-to-code-trans dataset, and scrapper data.